Google’s AI for Social Good crew consists of researchers, engineers, volunteers, and others with a shared give attention to optimistic social influence. Our mission is to display AI’s societal profit by enabling real-world worth, with initiatives spanning work in public well being, accessibility, disaster response, local weather and power, and nature and society. We consider that one of the simplest ways to drive optimistic change in underserved communities is by partnering with change-makers and the organizations they serve.

In this weblog submit we talk about work carried out by Project Euphonia, a crew inside AI for Social Good, that goals to enhance automated speech recognition (ASR) for individuals with disordered speech. For individuals with typical speech, an ASR mannequin’s phrase error charge (WER) will be lower than 10%. But for individuals with disordered speech patterns, equivalent to stuttering, dysarthria and apraxia, the WER may attain 50% and even 90% relying on the etiology and severity. To assist handle this drawback, we labored with greater than 1,000 contributors to gather over 1,000 hours of disordered speech samples and used the info to indicate that ASR personalization is a viable avenue for bridging the efficiency hole for customers with disordered speech. We’ve proven that personalization will be profitable with as little as 3-4 minutes of coaching speech utilizing layer freezing strategies.

This work led to the event of Project Relate for anybody with atypical speech who may benefit from a customized speech mannequin. Built in partnership with Google’s Speech crew, Project Relate allows individuals who discover it arduous to be understood by different individuals and expertise to coach their very own fashions. People can use these personalised fashions to speak extra successfully and acquire extra independence. To make ASR extra accessible and usable, we describe how we fine-tuned Google’s Universal Speech Model (USM) to higher perceive disordered speech out of the field, with out personalization, for use with digital assistant applied sciences, dictation apps, and in conversations.

Addressing the challenges

Working carefully with Project Relate customers, it turned clear that personalised fashions will be very helpful, however for many customers, recording dozens or a whole bunch of examples will be difficult. In addition, the personalised fashions didn’t all the time carry out nicely in freeform dialog.

To handle these challenges, Euphonia’s analysis efforts have been specializing in speaker impartial ASR (SI-ASR) to make fashions work higher out of the field for individuals with disordered speech in order that no extra coaching is important.

Prompted Speech dataset for SI-ASR

The first step in constructing a sturdy SI-ASR mannequin was to create consultant dataset splits. We created the Prompted Speech dataset by splitting the Euphonia corpus into practice, validation and take a look at parts, whereas making certain that every cut up spanned a variety of speech impairment severity and underlying etiology and that no audio system or phrases appeared in a number of splits. The coaching portion consists of over 950k speech utterances from over 1,000 audio system with disordered speech. The take a look at set comprises round 5,700 utterances from over 350 audio system. Speech-language pathologists manually reviewed all the utterances within the take a look at set for transcription accuracy and audio high quality.

Real Conversation take a look at set

Unprompted or conversational speech differs from prompted speech in a number of methods. In dialog, individuals communicate sooner and enunciate much less. They repeat phrases, restore misspoken phrases, and use a extra expansive vocabulary that’s particular and private to themselves and their neighborhood. To enhance a mannequin for this use case, we created the Real Conversation take a look at set to benchmark efficiency.

The Real Conversation take a look at set was created with the assistance of trusted testers who recorded themselves talking throughout conversations. The audio was reviewed, any personally identifiable info (PII) was eliminated, after which that knowledge was transcribed by speech-language pathologists. The Real Conversation take a look at set comprises over 1,500 utterances from 29 audio system.

Adapting USM to disordered speech

We then tuned USM on the coaching cut up of the Euphonia Prompted Speech set to enhance its efficiency on disordered speech. Instead of fine-tuning the total mannequin, our tuning was based mostly on residual adapters, a parameter-efficient tuning method that provides tunable bottleneck layers as residuals between the transformer layers. Only these layers are tuned, whereas the remainder of the mannequin weights are untouched. We have beforehand proven that this method works very nicely to adapt ASR fashions to disordered speech. Residual adapters had been solely added to the encoder layers, and the bottleneck dimension was set to 64.

Results

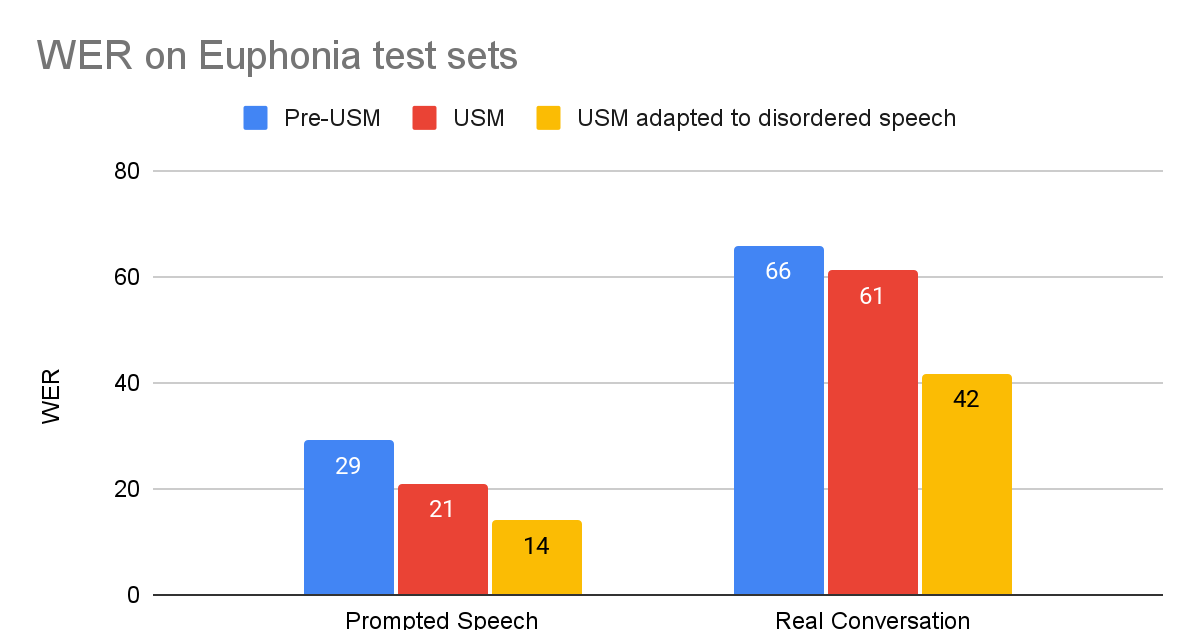

To consider the tailored USM, we in contrast it to older ASR fashions utilizing the 2 take a look at units described above. For every take a look at, we evaluate tailored USM to the pre-USM mannequin greatest suited to that job: (1) For brief prompted speech, we evaluate to Google’s manufacturing ASR mannequin optimized for brief type ASR; (2) for longer Real Conversation speech, we evaluate to a mannequin skilled for lengthy type ASR. USM enhancements over pre-USM fashions will be defined by USM’s relative dimension enhance, 120M to 2B parameters, and different enhancements mentioned within the USM weblog submit.

| Model phrase error charges (WER) for every take a look at set (decrease is best). |

We see that the USM tailored with disordered speech considerably outperforms the opposite fashions. The tailored USM’s WER on Real Conversation is 37% higher than the pre-USM mannequin, and on the Prompted Speech take a look at set, the tailored USM performs 53% higher.

These findings counsel that the tailored USM is considerably extra usable for an finish person with disordered speech. We can display this enchancment by transcripts of Real Conversation take a look at set recordings from a trusted tester of Euphonia and Project Relate (see beneath).

| Audio1 | Ground Truth | Pre-USM ASR | Adapted USM | |||

| I now have an Xbox adaptive controller on my lap. | i now have so much and that guide on my mouth | i now had an xbox adapter controller on my lamp. | ||||

| I’ve been speaking for fairly some time now. Let’s see. | fairly some time now | i have been speaking for fairly some time now. |

| Example audio and transcriptions of a trusted tester’s speech from the Real Conversation take a look at set. |

A comparability of the Pre-USM and tailored USM transcripts revealed some key benefits:

- The first instance reveals that Adapted USM is best at recognizing disordered speech patterns. The baseline misses key phrases like “XBox” and “controller” which can be essential for a listener to know what they’re attempting to say.

- The second instance is an efficient instance of how deletions are a main situation with ASR fashions that aren’t skilled with disordered speech. Though the baseline mannequin did transcribe a portion accurately, a big a part of the utterance was not transcribed, shedding the speaker’s meant message.

Conclusion

We consider that this work is a crucial step in the direction of making speech recognition extra accessible to individuals with disordered speech. We are persevering with to work on bettering the efficiency of our fashions. With the fast developments in ASR, we intention to make sure individuals with disordered speech profit as nicely.

Acknowledgements

Key contributors to this undertaking embody Fadi Biadsy, Michael Brenner, Julie Cattiau, Richard Cave, Amy Chung-Yu Chou, Dotan Emanuel, Jordan Green, Rus Heywood, Pan-Pan Jiang, Anton Kast, Marilyn Ladewig, Bob MacDonald, Philip Nelson, Katie Seaver, Joel Shor, Jimmy Tobin, Katrin Tomanek, and Subhashini Venugopalan. We gratefully acknowledge the assist Project Euphonia obtained from members of the USM analysis crew together with Yu Zhang, Wei Han, Nanxin Chen, and lots of others. Most importantly, we wished to say an enormous thanks to the two,200+ contributors who recorded speech samples and the various advocacy teams who helped us join with these contributors.

1Audio quantity has been adjusted for ease of listening, however the unique information could be extra in keeping with these utilized in coaching and would have pauses, silences, variable quantity, and so on. ↩