Deep studying has lately pushed super progress in a wide selection of purposes, starting from practical picture era and spectacular retrieval techniques to language fashions that may maintain human-like conversations. While this progress may be very thrilling, the widespread use of deep neural community fashions requires warning: as guided by Google’s AI Principles, we search to develop AI applied sciences responsibly by understanding and mitigating potential dangers, reminiscent of the propagation and amplification of unfair biases and defending person privateness.

Fully erasing the affect of the information requested to be deleted is difficult since, except for merely deleting it from databases the place it’s saved, it additionally requires erasing the affect of that information on different artifacts reminiscent of skilled machine studying fashions. Moreover, latest analysis [1, 2] has proven that in some instances it might be doable to deduce with excessive accuracy whether or not an instance was used to coach a machine studying mannequin utilizing membership inference assaults (MIAs). This can increase privateness considerations, because it implies that even when a person’s information is deleted from a database, it might nonetheless be doable to deduce whether or not that particular person’s information was used to coach a mannequin.

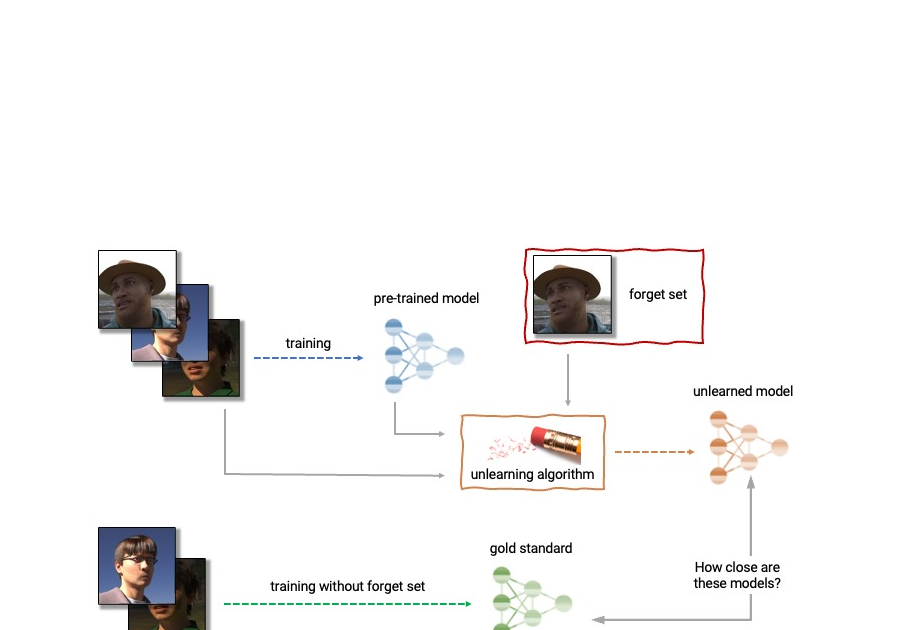

Given the above, machine unlearning is an emergent subfield of machine studying that goals to take away the affect of a particular subset of coaching examples — the “neglect set” — from a skilled mannequin. Furthermore, a really perfect unlearning algorithm would take away the affect of sure examples whereas sustaining different useful properties, reminiscent of the accuracy on the remainder of the practice set and generalization to held-out examples. A simple strategy to produce this unlearned mannequin is to retrain the mannequin on an adjusted coaching set that excludes the samples from the neglect set. However, this isn’t all the time a viable choice, as retraining deep fashions might be computationally costly. An supreme unlearning algorithm would as a substitute use the already-trained mannequin as a place to begin and effectively make changes to take away the affect of the requested information.

Today we’re thrilled to announce that we have teamed up with a broad group of educational and industrial researchers to arrange the first Machine Unlearning Challenge. The competitors considers a practical state of affairs during which after coaching, a sure subset of the coaching photos have to be forgotten to guard the privateness or rights of the people involved. The competitors might be hosted on Kaggle, and submissions might be routinely scored when it comes to each forgetting high quality and mannequin utility. We hope that this competitors will assist advance the state of the artwork in machine unlearning and encourage the improvement of environment friendly, efficient and moral unlearning algorithms.

Machine unlearning purposes

Machine unlearning has purposes past defending person privateness. For occasion, one can use unlearning to erase inaccurate or outdated info from skilled fashions (e.g., resulting from errors in labeling or modifications in the atmosphere) or take away dangerous, manipulated, or outlier information.

The discipline of machine unlearning is said to different areas of machine studying reminiscent of differential privateness, life-long studying, and equity. Differential privateness goals to ensure that no explicit coaching instance has too giant an affect on the skilled mannequin; a stronger objective in comparison with that of unlearning, which solely requires erasing the affect of the designated neglect set. Life-long studying analysis goals to design fashions that may be taught repeatedly whereas sustaining previously-acquired abilities. As work on unlearning progresses, it might additionally open further methods to spice up equity in fashions, by correcting unfair biases or disparate therapy of members belonging to completely different teams (e.g., demographics, age teams, and many others.).

|

| Anatomy of unlearning. An unlearning algorithm takes as enter a pre-trained mannequin and a number of samples from the practice set to unlearn (the “neglect set”). From the mannequin, neglect set, and retain set, the unlearning algorithm produces an up to date mannequin. An supreme unlearning algorithm produces a mannequin that’s indistinguishable from the mannequin skilled with out the neglect set. |

Challenges of machine unlearning

The drawback of unlearning is advanced and multifaceted because it includes a number of conflicting targets: forgetting the requested information, sustaining the mannequin’s utility (e.g., accuracy on retained and held-out information), and effectivity. Because of this, current unlearning algorithms make completely different trade-offs. For instance, full retraining achieves profitable forgetting with out damaging mannequin utility, however with poor effectivity, whereas including noise to the weights achieves forgetting at the expense of utility.

Furthermore, the analysis of forgetting algorithms in the literature has to this point been extremely inconsistent. While some works report the classification accuracy on the samples to unlearn, others report distance to the absolutely retrained mannequin, and but others use the error price of membership inference assaults as a metric for forgetting high quality [4, 5, 6].

We imagine that the inconsistency of analysis metrics and the lack of a standardized protocol is a critical obstacle to progress in the discipline — we’re unable to make direct comparisons between completely different unlearning strategies in the literature. This leaves us with a myopic view of the relative deserves and downsides of various approaches, in addition to open challenges and alternatives for creating improved algorithms. To handle the problem of inconsistent analysis and to advance the state of the artwork in the discipline of machine unlearning, we have teamed up with a broad group of educational and industrial researchers to arrange the first unlearning problem.

Announcing the first Machine Unlearning Challenge

We are happy to announce the first Machine Unlearning Challenge, which might be held as a part of the NeurIPS 2023 Competition Track. The objective of the competitors is twofold. First, by unifying and standardizing the analysis metrics for unlearning, we hope to determine the strengths and weaknesses of various algorithms by apples-to-apples comparisons. Second, by opening this competitors to everybody, we hope to foster novel options and make clear open challenges and alternatives.

The competitors might be hosted on Kaggle and run between mid-July 2023 and mid-September 2023. As a part of the competitors, right this moment we’re asserting the availability of the beginning package. This beginning package offers a basis for individuals to construct and take a look at their unlearning fashions on a toy dataset.

The competitors considers a practical state of affairs during which an age predictor has been skilled on face photos, and, after coaching, a sure subset of the coaching photos have to be forgotten to guard the privateness or rights of the people involved. For this, we’ll make obtainable as a part of the beginning package a dataset of artificial faces (samples proven under) and we’ll additionally use a number of real-face datasets for analysis of submissions. The individuals are requested to submit code that takes as enter the skilled predictor, the neglect and retain units, and outputs the weights of a predictor that has unlearned the designated neglect set. We will consider submissions based mostly on each the energy of the forgetting algorithm and mannequin utility. We will even implement a tough cut-off that rejects unlearning algorithms that run slower than a fraction of the time it takes to retrain. A priceless final result of this competitors might be to characterize the trade-offs of various unlearning algorithms.

|

| Excerpt photos from the Face Synthetics dataset along with age annotations. The competitors considers the state of affairs during which an age predictor has been skilled on face photos like the above, and, after coaching, a sure subset of the coaching photos have to be forgotten. |

For evaluating forgetting, we’ll use instruments impressed by MIAs, reminiscent of LiRA. MIAs had been first developed in the privateness and safety literature and their objective is to deduce which examples had been a part of the coaching set. Intuitively, if unlearning is profitable, the unlearned mannequin comprises no traces of the forgotten examples, inflicting MIAs to fail: the attacker can be unable to deduce that the neglect set was, the truth is, a part of the unique coaching set. In addition, we will even use statistical checks to quantify how completely different the distribution of unlearned fashions (produced by a specific submitted unlearning algorithm) is in comparison with the distribution of fashions retrained from scratch. For a really perfect unlearning algorithm, these two might be indistinguishable.

Conclusion

Machine unlearning is a strong software that has the potential to handle a number of open issues in machine studying. As analysis on this space continues, we hope to see new strategies which might be extra environment friendly, efficient, and accountable. We are thrilled to have the alternative by way of this competitors to spark curiosity on this discipline, and we’re wanting ahead to sharing our insights and findings with the group.

Acknowledgements

The authors of this publish are actually a part of Google DeepThoughts. We are scripting this weblog publish on behalf of the group staff of the Unlearning Competition: Eleni Triantafillou*, Fabian Pedregosa* (*equal contribution), Meghdad Kurmanji, Kairan Zhao, Gintare Karolina Dziugaite, Peter Triantafillou, Ioannis Mitliagkas, Vincent Dumoulin, Lisheng Sun Hosoya, Peter Kairouz, Julio C. S. Jacques Junior, Jun Wan, Sergio Escalera and Isabelle Guyon.