AI-related merchandise and applied sciences are constructed and deployed in a societal context: that’s, a dynamic and complicated assortment of social, cultural, historic, political and financial circumstances. Because societal contexts by nature are dynamic, advanced, non-linear, contested, subjective, and extremely qualitative, they’re difficult to translate into the quantitative representations, strategies, and practices that dominate normal machine studying (ML) approaches and responsible AI product improvement practices.

The first section of AI product improvement is downside understanding, and this section has great affect over how issues (e.g., growing most cancers screening availability and accuracy) are formulated for ML methods to remedy as nicely many different downstream choices, akin to dataset and ML structure alternative. When the societal context by which a product will function shouldn’t be articulated nicely sufficient to end in sturdy downside understanding, the ensuing ML options may be fragile and even propagate unfair biases.

When AI product builders lack entry to the knowledge and instruments mandatory to successfully perceive and contemplate societal context throughout improvement, they have a tendency to summary it away. This abstraction leaves them with a shallow, quantitative understanding of the issues they search to remedy, whereas product customers and society stakeholders — who’re proximate to these issues and embedded in associated societal contexts — have a tendency to have a deep qualitative understanding of those self same issues. This qualitative–quantitative divergence in methods of understanding advanced issues that separates product customers and society from builders is what we name the downside understanding chasm.

This chasm has repercussions in the actual world: for instance, it was the root trigger of racial bias found by a broadly used healthcare algorithm supposed to remedy the downside of selecting sufferers with the most advanced healthcare wants for particular applications. Incomplete understanding of the societal context by which the algorithm would function led system designers to kind incorrect and oversimplified causal theories about what the key downside elements have been. Critical socio-structural elements, together with lack of entry to healthcare, lack of belief in the well being care system, and underdiagnosis due to human bias, have been unnoticed whereas spending on healthcare was highlighted as a predictor of advanced well being want.

To bridge the downside understanding chasm responsibly, AI product builders want instruments that put community-validated and structured knowledge of societal context about advanced societal issues at their fingertips — beginning with downside understanding, but additionally all through the product improvement lifecycle. To that finish, Societal Context Understanding Tools and Solutions (SCOUTS) — half of the Responsible AI and Human-Centered Technology (RAI-HCT) crew inside Google Research — is a devoted analysis crew targeted on the mission to “empower people with the scalable, trustworthy societal context knowledge required to realize responsible, robust AI and solve the world’s most complex societal problems.” SCOUTS is motivated by the important problem of articulating societal context, and it conducts modern foundational and utilized analysis to produce structured societal context knowledge and to combine it into all phases of the AI-related product improvement lifecycle. Last 12 months we introduced that Jigsaw, Google’s incubator for constructing know-how that explores options to threats to open societies, leveraged our structured societal context knowledge method throughout the information preparation and analysis phases of mannequin improvement to scale bias mitigation for his or her broadly used Perspective API toxicity classifier. Going ahead SCOUTS’ analysis agenda focuses on the downside understanding section of AI-related product improvement with the objective of bridging the downside understanding chasm.

Bridging the AI downside understanding chasm

Bridging the AI downside understanding chasm requires two key components: 1) a reference body for organizing structured societal context knowledge and a pair of) participatory, non-extractive strategies to elicit neighborhood experience about advanced issues and symbolize it as structured knowledge. SCOUTS has revealed modern analysis in each areas.

| An illustration of the downside understanding chasm. |

A societal context reference body

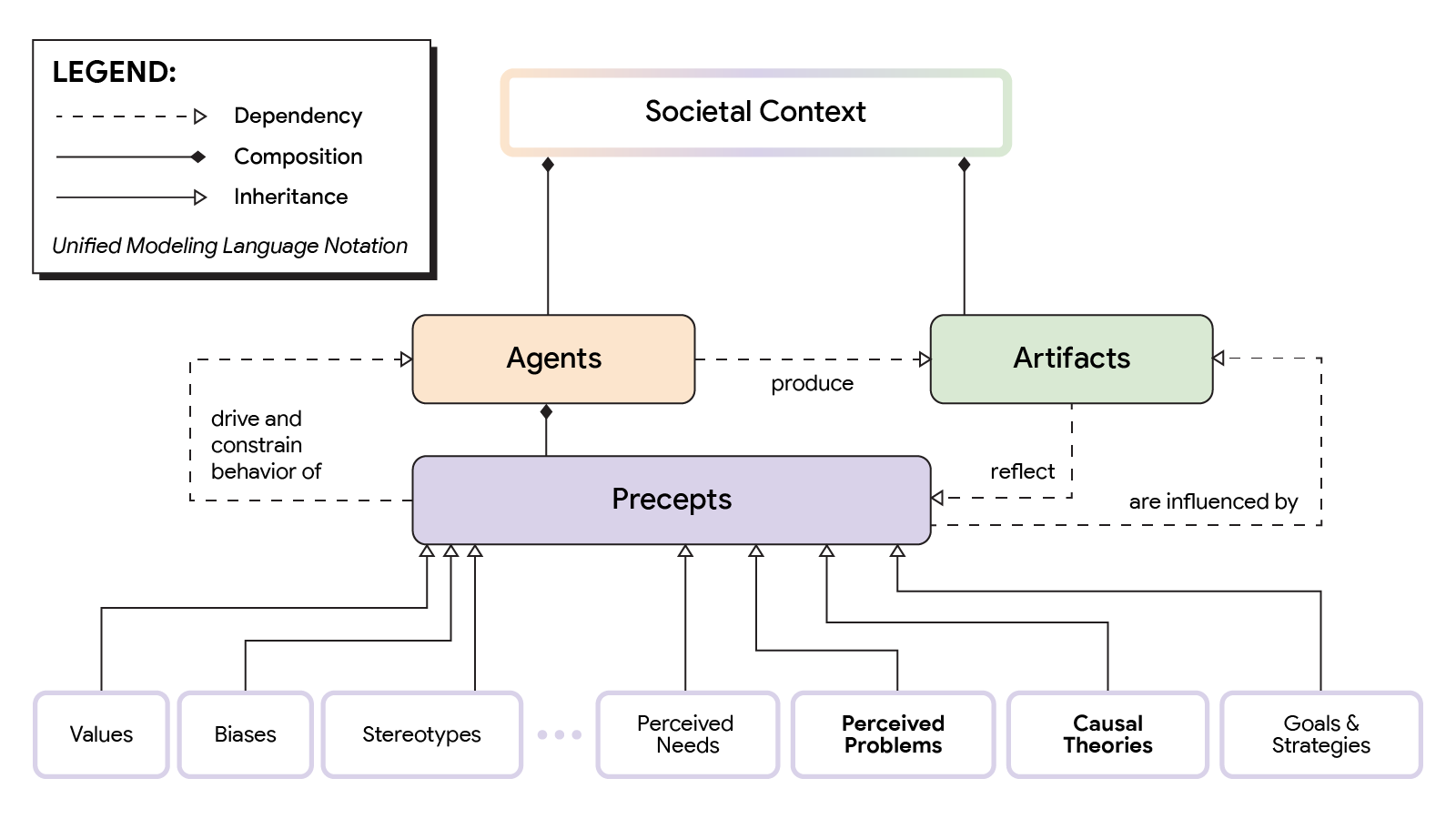

An important ingredient for producing structured knowledge is a taxonomy for creating the construction to arrange it. SCOUTS collaborated with different RAI-HCT groups (TasC, Impact Lab), Google DeepMind, and exterior system dynamics specialists to develop a taxonomic reference body for societal context. To take care of the advanced, dynamic, and adaptive nature of societal context, we leverage advanced adaptive methods (CAS) concept to suggest a high-level taxonomic mannequin for organizing societal context knowledge. The mannequin pinpoints three key components of societal context and the dynamic suggestions loops that bind them collectively: brokers, precepts, and artifacts.

- Agents: These may be people or establishments.

- Precepts: The preconceptions — together with beliefs, values, stereotypes and biases — that constrain and drive the conduct of brokers. An instance of a primary principle is that “all basketball players are over 6 feet tall.” That limiting assumption can lead to failures in figuring out basketball gamers of smaller stature.

- Artifacts: Agent behaviors produce many varieties of artifacts, together with language, information, applied sciences, societal issues and merchandise.

The relationships between these entities are dynamic and complicated. Our work hypothesizes that precepts are the most important factor of societal context and we spotlight the issues individuals understand and the causal theories they maintain about why these issues exist as notably influential precepts which can be core to understanding societal context. For instance, in the case of racial bias in a medical algorithm described earlier, the causal concept principle held by designers was that advanced well being issues would trigger healthcare expenditures to go up for all populations. That incorrect principle instantly led to the alternative of healthcare spending as the proxy variable for the mannequin to predict advanced healthcare want, which in flip led to the mannequin being biased towards Black sufferers who, due to societal elements akin to lack of entry to healthcare and underdiagnosis due to bias on common, don’t at all times spend extra on healthcare once they have advanced healthcare wants. A key open query is how can we ethically and equitably elicit causal theories from the individuals and communities who’re most proximate to issues of inequity and rework them into helpful structured knowledge?

|

| Illustrative model of societal context reference body. |

|

| Taxonomic model of societal context reference body. |

Working with communities to foster the responsible application of AI to healthcare

Since its inception, SCOUTS has labored to construct capability in traditionally marginalized communities to articulate the broader societal context of the advanced issues that matter to them utilizing a observe known as neighborhood primarily based system dynamics (CBSD). System dynamics (SD) is a technique for articulating causal theories about advanced issues, each qualitatively as causal loop and inventory and stream diagrams (CLDs and SFDs, respectively) and quantitatively as simulation fashions. The inherent assist of visible qualitative instruments, quantitative strategies, and collaborative mannequin constructing makes it a really perfect ingredient for bridging the downside understanding chasm. CBSD is a community-based, participatory variant of SD particularly targeted on constructing capability inside communities to collaboratively describe and mannequin the issues they face as causal theories, instantly with out intermediaries. With CBSD we’ve witnessed neighborhood teams be taught the fundamentals and start drawing CLDs inside 2 hours.

There is a big potential for AI to enhance medical prognosis. But the security, fairness, and reliability of AI-related well being diagnostic algorithms relies on numerous and balanced coaching datasets. An open problem in the well being diagnostic area is the dearth of coaching pattern information from traditionally marginalized teams. SCOUTS collaborated with the Data 4 Black Lives neighborhood and CBSD specialists to produce qualitative and quantitative causal theories for the information hole downside. The theories embrace important elements that make up the broader societal context surrounding well being diagnostics, together with cultural reminiscence of loss of life and belief in medical care.

The determine beneath depicts the causal concept generated throughout the collaboration described above as a CLD. It hypothesizes that belief in medical care influences all components of this advanced system and is the key lever for growing screening, which in flip generates information to overcome the information variety hole.

|

|

| Causal loop diagram of the well being diagnostics information hole |

These community-sourced causal theories are a primary step to bridge the downside understanding chasm with reliable societal context knowledge.

Conclusion

As mentioned on this weblog, the downside understanding chasm is a important open problem in responsible AI. SCOUTS conducts exploratory and utilized analysis in collaboration with different groups inside Google Research, exterior neighborhood, and educational companions throughout a number of disciplines to make significant progress fixing it. Going ahead our work will deal with three key components, guided by our AI Principles:

- Increase consciousness and understanding of the downside understanding chasm and its implications by means of talks, publications, and coaching.

- Conduct foundational and utilized analysis for representing and integrating societal context knowledge into AI product improvement instruments and workflows, from conception to monitoring, analysis and adaptation.

- Apply community-based causal modeling strategies to the AI well being fairness area to notice affect and construct society’s and Google’s functionality to produce and leverage global-scale societal context knowledge to notice responsible AI.

|

| SCOUTS flywheel for bridging the downside understanding chasm. |

Acknowledgments

Thank you to John Guilyard for graphics improvement, everybody in SCOUTS, and all of our collaborators and sponsors.