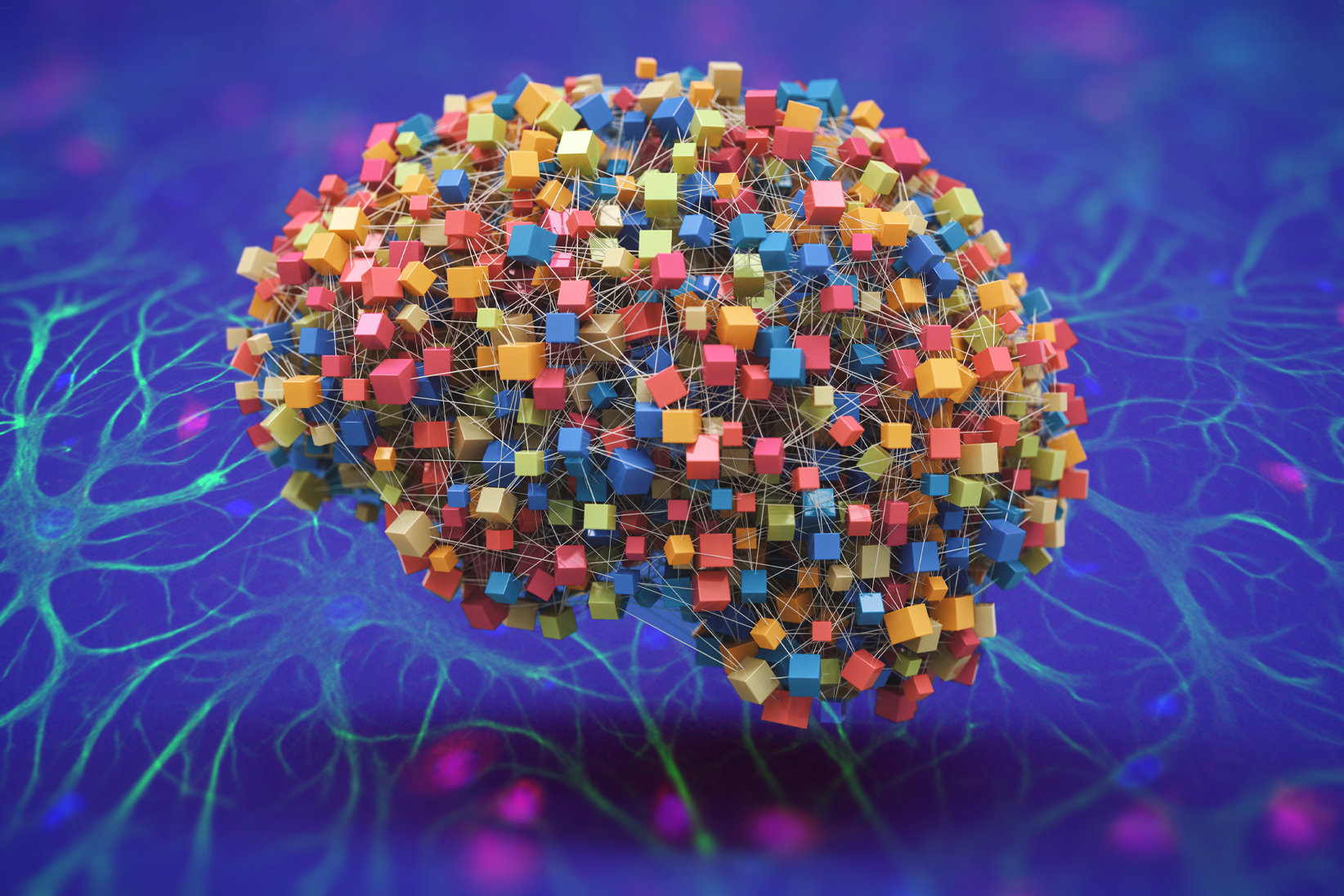

Artificial neural networks, ubiquitous machine-learning models that may be educated to finish many duties, are so known as as a result of their structure is impressed by the way in which organic neurons course of info within the human mind.

About six years in the past, scientists found a brand new sort of extra highly effective neural community mannequin often called a transformer. These models can obtain unprecedented efficiency, similar to by producing textual content from prompts with near-human-like accuracy. A transformer underlies AI methods similar to ChatGPT and Bard, for instance. While extremely efficient, transformers are additionally mysterious: Unlike with different brain-inspired neural community models, it hasn’t been clear find out how to construct them utilizing organic elements.

Now, researchers from MIT, the MIT-IBM Watson AI Lab, and Harvard Medical School have produced a speculation that will clarify how a transformer could possibly be constructed utilizing organic components within the mind. They recommend {that a} organic community composed of neurons and different mind cells known as astrocytes might carry out the identical core computation as a transformer.

Recent analysis has proven that astrocytes, non-neuronal cells that are considerable within the mind, talk with neurons and play a task in some physiological processes, like regulating blood movement. But scientists nonetheless lack a transparent understanding of what these cells do computationally.

With the brand new examine, printed this week in open-access format within the Proceedings of the National Academy of Sciences, the researchers explored the position astrocytes play within the mind from a computational perspective, and crafted a mathematical mannequin that reveals how they could possibly be used, together with neurons, to construct a biologically believable transformer.

Their speculation offers insights that might spark future neuroscience analysis into how the human mind works. At the identical time, it might assist machine-learning researchers clarify why transformers are so profitable throughout a various set of advanced duties.

“The brain is far superior to even the best artificial neural networks that we have developed, but we don’t really know exactly how the brain works. There is scientific value in thinking about connections between biological hardware and large-scale artificial intelligence networks. This is neuroscience for AI and AI for neuroscience,” says Dmitry Krotov, a analysis employees member on the MIT-IBM Watson AI Lab and senior writer of the analysis paper.

Joining Krotov on the paper are lead writer Leo Kozachkov, a postdoc within the MIT Department of Brain and Cognitive Sciences; and Ksenia V. Kastanenka, an assistant professor of neurobiology at Harvard Medical School and an assistant investigator on the Massachusetts General Research Institute.

A organic impossibility turns into believable

Transformers function otherwise than different neural community models. For occasion, a recurrent neural community educated for pure language processing would evaluate every phrase in a sentence to an inside state decided by the earlier phrases. A transformer, however, compares all of the phrases within the sentence without delay to generate a prediction, a course of known as self-attention.

For self-attention to work, the transformer should preserve all of the phrases prepared in some type of reminiscence, Krotov explains, but this didn’t appear biologically potential because of the means neurons talk.

However, a number of years in the past scientists learning a barely totally different sort of machine-learning mannequin (often called a Dense Associated Memory) realized that this self-attention mechanism might happen within the mind, but provided that there have been communication between a minimum of three neurons.

“The number three really popped out to me because it is known in neuroscience that these cells called astrocytes, which are not neurons, form three-way connections with neurons, what are called tripartite synapses,” Kozachkov says.

When two neurons talk, a presynaptic neuron sends chemical substances known as neurotransmitters throughout the synapse that connects it to a postsynaptic neuron. Sometimes, an astrocyte can be linked — it wraps a protracted, skinny tentacle across the synapse, making a tripartite (three-part) synapse. One astrocyte could type hundreds of thousands of tripartite synapses.

The astrocyte collects some neurotransmitters that movement by the synaptic junction. At some level, the astrocyte can sign again to the neurons. Because astrocytes function on a for much longer time scale than neurons — they create indicators by slowly elevating their calcium response after which reducing it — these cells can maintain and combine info communicated to them from neurons. In this manner, astrocytes can type a kind of reminiscence buffer, Krotov says.

“If you think about it from that perspective, then astrocytes are extremely natural for precisely the computation we need to perform the attention operation inside transformers,” he provides.

Building a neuron-astrocyte community

With this perception, the researchers shaped their speculation that astrocytes might play a task in how transformers compute. Then they got down to construct a mathematical mannequin of a neuron-astrocyte community that may function like a transformer.

They took the core arithmetic that comprise a transformer and developed easy biophysical models of what astrocytes and neurons do when they talk within the mind, based mostly on a deep dive into the literature and steering from neuroscientist collaborators.

Then they mixed the models in sure methods till they arrived at an equation of a neuron-astrocyte community that describes a transformer’s self-attention.

“Sometimes, we found that certain things we wanted to be true couldn’t be plausibly implemented. So, we had to think of workarounds. There are some things in the paper that are very careful approximations of the transformer architecture to be able to match it in a biologically plausible way,” Kozachkov says.

Through their evaluation, the researchers confirmed that their biophysical neuron-astrocyte community theoretically matches a transformer. In addition, they performed numerical simulations by feeding photographs and paragraphs of textual content to transformer models and evaluating the responses to these of their simulated neuron-astrocyte community. Both responded to the prompts in comparable methods, confirming their theoretical mannequin.

“Having remained electrically silent for over a century of brain recordings, astrocytes are one of the most abundant, yet less explored, cells in the brain. The potential of unleashing the computational power of the other half of our brain is enormous,” says Konstantinos Michmizos, affiliate professor of pc science at Rutgers University, who was not concerned with this work. “This study opens up a fascinating iterative loop, from understanding how intelligent behavior may truly emerge in the brain, to translating disruptive hypotheses into new tools that exhibit human-like intelligence.”

The subsequent step for the researchers is to make the leap from concept to apply. They hope to match the mannequin’s predictions to these which were noticed in organic experiments, and use this information to refine, or probably disprove, their speculation.

In addition, one implication of their examine is that astrocytes could also be concerned in long-term reminiscence, because the community must retailer info to find a way act on it sooner or later. Additional analysis might examine this concept additional, Krotov says.

“For a lot of reasons, astrocytes are extremely important for cognition and behavior, and they operate in fundamentally different ways from neurons. My biggest hope for this paper is that it catalyzes a bunch of research in computational neuroscience toward glial cells, and in particular, astrocytes,” provides Kozachkov.

This analysis was supported, partly, by the BrightFocus Foundation and the National Institute of Health.