The US acted aggressively final yr to restrict China’s capability to develop synthetic intelligence for navy functions, blocking the sale there of probably the most superior US chips used to train AI programs.

Big advances within the chips used to develop generative AI have meant that the newest US expertise on sale in China is extra highly effective than something obtainable earlier than. That is although the chips have been intentionally hobbled for the Chinese market to restrict their capabilities, making them much less efficient than merchandise obtainable elsewhere on this planet.

The end result has been hovering Chinese orders for the newest superior US processors. China’s main Internet firms have positioned orders for $5 billion value of chips from Nvidia, whose graphical processing items have change into the workhorse for coaching giant AI models.

The affect of hovering international demand for Nvidia’s merchandise is probably going to underpin the chipmaker’s second-quarter monetary outcomes due to be introduced on Wednesday.

Besides reflecting demand for improved chips to train the Internet firms’ newest giant language models, the frenzy has additionally been prompted by worries that the US would possibly tighten its export controls additional, making even these restricted merchandise unavailable sooner or later.

However, Bill Dally, Nvidia’s chief scientist, instructed that the US export controls would have larger affect sooner or later.

“As training requirements [for the most advanced AI systems] continue to double every six to 12 months,” the hole between chips bought in China and people obtainable in the remainder of the world “will grow quickly,” he mentioned.

Capping processing speeds

Last yr’s US export controls on chips had been a part of a bundle that included stopping Chinese prospects from buying the tools wanted to make superior chips.

Washington set a cap on the utmost processing pace of chips that may very well be bought in China, in addition to the speed at which the chips can switch information—a important issue when it comes to coaching giant AI models, a data-intensive job that requires connecting giant numbers of chips collectively.

Nvidia responded by reducing the info switch charge on its A100 processors, on the time its top-of-the-line GPUs, creating a brand new product for China referred to as the A800 that happy the export controls.

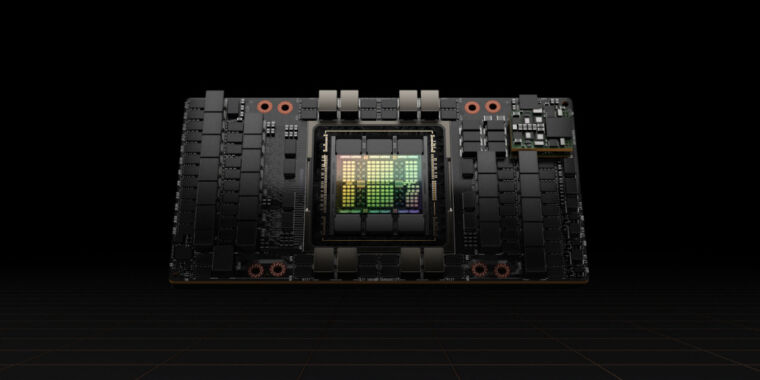

This yr, it has adopted with information switch limits on its H100, a brand new and much more highly effective processor that was specifically designed to train giant language models, making a model referred to as the H800 for the Chinese market.

The chipmaker has not disclosed the technical capabilities of the made-for-China processors, however pc makers have been open concerning the particulars. Lenovo, as an example, advertises servers containing H800 chips that it says are equivalent in each method to H100s bought elsewhere on this planet, besides that they’ve a switch charge of solely 400 gigabytes per second.

That is under the 600GB/s restrict the US has set for chip exports to China. By comparability, Nvidia has mentioned its H100, which it started delivery to prospects earlier this yr, has a switch charge of 900GB/s.

The decrease switch charge in China signifies that customers of the chips there face longer coaching occasions for his or her AI programs than Nvidia’s prospects elsewhere on this planet—an essential limitation because the models have grown in dimension.

The longer coaching occasions elevate prices since chips will want to devour extra energy, one of many largest bills with giant models.

However, even with these limits, the H800 chips on sale in China are extra highly effective than something obtainable wherever else earlier than this yr, main to the massive demand.

The H800 chips are 5 occasions quicker than the A100 chips that had been Nvidia’s strongest GPUs, in accordance to Patrick Moorhead, a US chip analyst at Moor Insights & Strategy.

That signifies that Chinese Internet firms that skilled their AI models utilizing top-of-the-line chips purchased earlier than the US export controls can nonetheless count on massive enhancements by buying the newest semiconductors, he mentioned.

“It appears the US government wants to not shut down China’s AI effort, but make it harder,” mentioned Moorhead.

Cost-benefit

Many Chinese tech firms are nonetheless on the stage of pre-training giant language models, which burns quite a lot of efficiency from particular person GPU chips and calls for a excessive diploma of knowledge switch functionality.

Only Nvidia’s chips can present the effectivity wanted for pre-training, say Chinese AI engineers. The particular person chip efficiency of the 800 collection, regardless of the weakened switch speeds, continues to be forward of others available on the market.

“Nvidia’s GPUs may seem expensive but are, in fact, the most cost-effective option,” mentioned one AI engineer at a number one Chinese Internet firm.

Other GPU distributors quoted decrease costs with extra well timed service, the engineer mentioned, however the firm judged that the coaching and improvement prices would rack up and that it will have the additional burden of uncertainty.

Nvidia’s providing contains the software program ecosystem, with its computing platform Compute Unified Device Architecture, or Cuda, that it arrange in 2006 and that has change into a part of the AI infrastructure.

Industry analysts imagine that Chinese firms might quickly face limitations within the pace of interconnections between the 800-series chips. This may hinder their capability to cope with the growing quantity of knowledge required for AI coaching, and they are going to be hampered as they delve deeper into researching and growing giant language models.

Charlie Chai, a Shanghai-based analyst at 86Research, in contrast the scenario with constructing many factories with congested motorways between them. Even firms that may accommodate the weakened chips would possibly face issues throughout the subsequent two or three years, he added.

© 2023 The Financial Times Ltd. All rights reserved. Please don’t copy and paste FT articles and redistribute by e-mail or publish to the online.