This story initially appeared in The Algorithm, our weekly publication on AI. To get tales like this in your inbox first, enroll right here.

I’m again from a healthful week off choosing blueberries in a forest. So this story we printed final week concerning the messy ethics of AI in warfare is simply the antidote, bringing my blood strain proper again up once more.

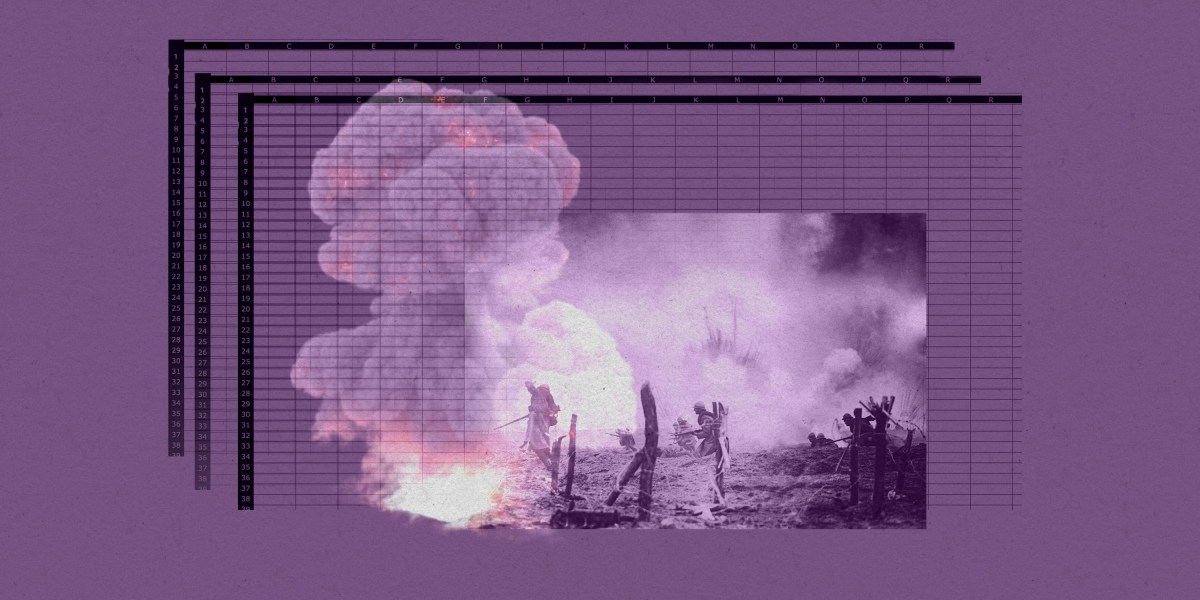

Arthur Holland Michel does an ideal job trying on the sophisticated and nuanced moral questions round warfare and the army’s growing use of artificial-intelligence instruments. There are myriad methods AI might fail catastrophically or be abused in battle conditions, and there don’t appear to be any actual guidelines constraining it but. Holland Michel’s story illustrates how little there may be to carry folks accountable when issues go fallacious.

Last yr I wrote about how the struggle in Ukraine kick-started a brand new growth in enterprise for protection AI startups. The newest hype cycle has solely added to that, as corporations—and now the army too—race to embed generative AI in services.

Earlier this month, the US Department of Defense introduced it’s organising a Generative AI Task Force, aimed toward “analyzing and integrating” AI instruments reminiscent of massive language fashions throughout the division.

The division sees tons of potential to “improve intelligence, operational planning, and administrative and business processes.”

But Holland Michel’s story highlights why the primary two use instances would possibly be a nasty thought. Generative AI instruments, reminiscent of language fashions, are glitchy and unpredictable, they usually make issues up. They even have huge safety vulnerabilities, privateness issues, and deeply ingrained biases.

Applying these applied sciences in high-stakes settings might result in lethal accidents the place it’s unclear who or what should be held accountable, and even why the issue occurred. Everyone agrees that people should make the ultimate name, however that’s made more durable by know-how that acts unpredictably, particularly in fast-moving battle conditions.

Some fear that the folks lowest on the hierarchy can pay the very best worth when issues go fallacious: “In the event of an accident—regardless of whether the human was wrong, the computer was wrong, or they were wrong together—the person who made the ‘decision’ will absorb the blame and protect everyone else along the chain of command from the full impact of accountability,” Holland Michel writes.

The solely ones who appear more likely to face no penalties when AI fails in struggle are the businesses supplying the know-how.

It helps corporations when the principles the US has set to control AI in warfare are mere suggestions, not legal guidelines. That makes it actually exhausting to carry anybody accountable. Even the AI Act, the EU’s sweeping upcoming regulation for high-risk AI programs, exempts army makes use of, which arguably are the highest-risk purposes of them all.

While everyone seems to be trying for thrilling new makes use of for generative AI, I personally can’t wait for it to turn into boring.

Amid early indicators that persons are beginning to lose curiosity within the know-how, corporations would possibly discover that these kinds of instruments are higher suited for mundane, low-risk purposes than fixing humanity’s greatest issues.

Applying AI in, for instance, productiveness software program reminiscent of Excel, e mail, or phrase processing may not be the sexiest thought, however in comparison with warfare it’s a comparatively low-stakes software, and easy sufficient to have the potential to really work as marketed. It might assist us do the tedious bits of our jobs quicker and higher.

Boring AI is unlikely to interrupt as simply and, most essential, gained’t kill anybody. Hopefully, quickly we’ll neglect we’re interacting with AI at all. (It wasn’t that way back when machine translation was an thrilling new factor in AI. Now most individuals don’t even take into consideration its function in powering Google Translate.)

That’s why I’m extra assured that organizations just like the DoD will discover success making use of generative AI in administrative and enterprise processes.

Boring AI just isn’t morally advanced. It’s not magic. But it really works.

Deeper Learning

AI isn’t nice at decoding human feelings. So why are regulators focusing on the tech?

Amid all the chatter about ChatGPT, synthetic normal intelligence, and the prospect of robots taking folks’s jobs, regulators within the EU and the US have been ramping up warnings in opposition to AI and emotion recognition. Emotion recognition is the try and establish an individual’s emotions or mind-set utilizing AI evaluation of video, facial pictures, or audio recordings.

But why is that this a high concern? Western regulators are significantly involved about China’s use of the know-how, and its potential to allow social management. And there’s additionally proof that it merely doesn’t work correctly. Tate Ryan-Mosley dissected the thorny questions across the know-how in final week’s version of The Technocrat, our weekly publication on tech coverage.

Bits and Bytes

Meta is making ready to launch free code-generating software program

A model of its new LLaMA 2 language mannequin that is ready to generate programming code will pose a stiff problem to comparable proprietary code-generating packages from rivals reminiscent of OpenAI, Microsoft, and Google. The open-source program is known as Code Llama, and its launch is imminent, in accordance with The Information. (The Information)

OpenAI is testing GPT-4 for content material moderation

Using the language mannequin to reasonable on-line content material might actually assist alleviate the psychological toll content material moderation takes on people. OpenAI says it’s seen some promising first outcomes, though the tech doesn’t outperform extremely skilled people. Lots of large, open questions stay, reminiscent of whether or not the instrument can be attuned to completely different cultures and choose up context and nuance. (OpenAI)

Google is engaged on an AI assistant that provides life recommendation

The generative AI instruments might perform as a life coach, providing up concepts, planning directions, and tutoring suggestions. (The New York Times)

Two tech luminaries have give up their jobs to construct AI programs impressed by bees

Sakana, a brand new AI analysis lab, attracts inspiration from the animal kingdom. Founded by two outstanding business researchers and former Googlers, the corporate plans to make a number of smaller AI fashions that work collectively, the thought being {that a} “swarm” of packages might be as highly effective as a single massive AI mannequin. (Bloomberg)