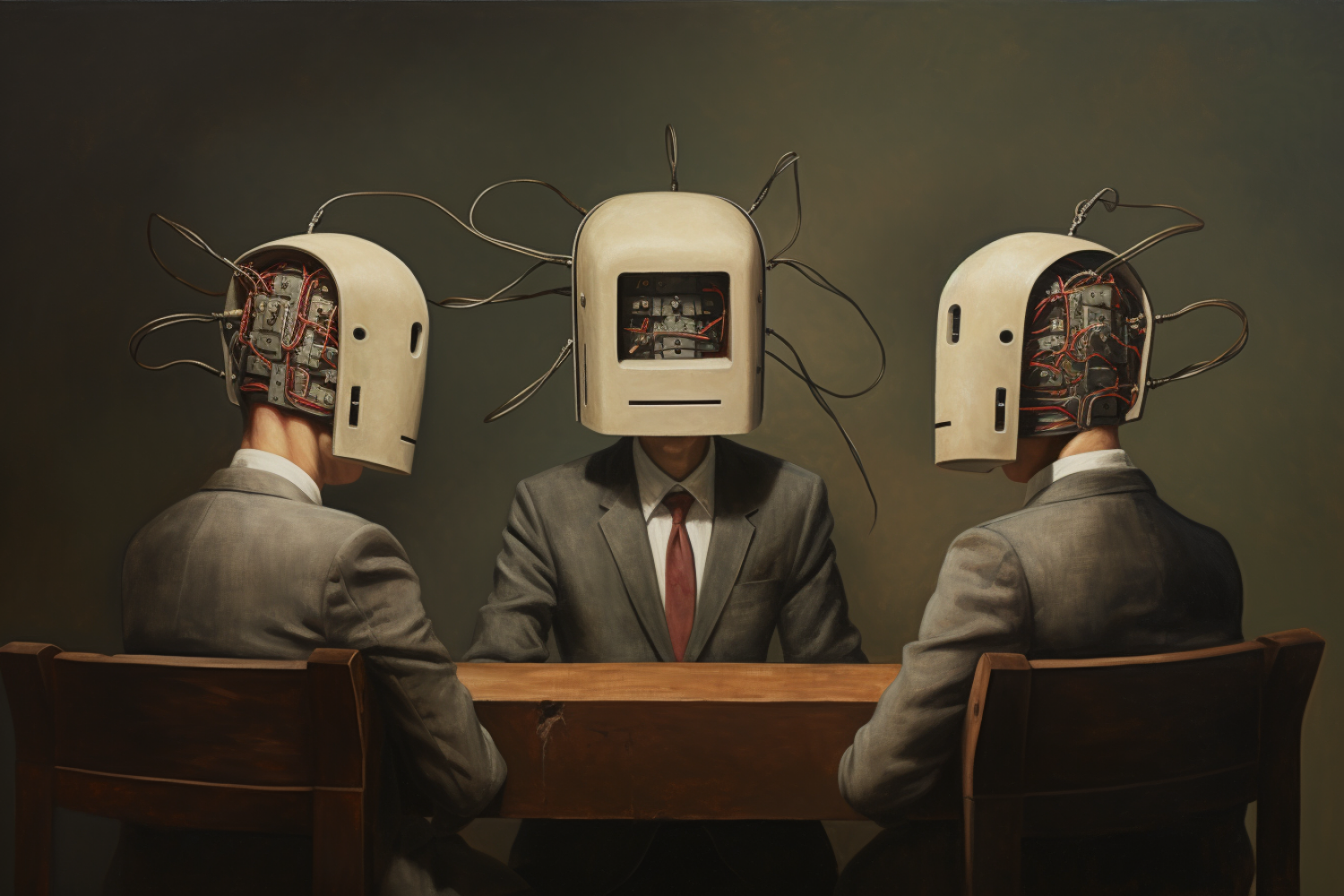

An age-old adage, usually launched to us throughout our adolescence, is designed to nudge us past our self-centered, nascent minds: “Two heads are higher than one.” This proverb encourages collaborative considering and highlights the efficiency of shared mind.

Fast ahead to 2023, and we discover that this knowledge holds true even in the realm of synthetic intelligence: Multiple language models, working in concord, are higher than one.

Recently, a group from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) embodied this historical knowledge inside the frontier of contemporary expertise. They launched a technique that leverages a number of AI programs to debate and argue with one another to converge on a best-possible reply to a given query. This methodology empowers these expansive language models to intensify their adherence to factual knowledge and refine their decision-making.

The crux of the issue with large language models (LLMs) lies in the inconsistency of their generated responses, resulting in potential inaccuracies and flawed reasoning. This new method lets every agent actively assess each different agent’s responses, and makes use of this collective suggestions to refine its personal reply. In technical phrases, the method consists of a number of rounds of response era and critique. Each language mannequin generates a solution to the given query, and then incorporates the suggestions from all different brokers to replace its personal response. This iterative cycle culminates in a ultimate output from a majority vote throughout the models’ options. It considerably mirrors the dynamics of a gaggle dialogue — the place people contribute to succeed in a unified and well-reasoned conclusion.

One actual power of the method lies in its seamless software to present black-box models. As the methodology revolves round producing textual content, it can be applied throughout numerous LLMs with no need entry to their inside workings. This simplicity, the group says, might assist researchers and builders use the device to enhance the consistency and factual accuracy of language mannequin outputs throughout the board.

“Employing a novel method, we don’t merely depend on a single AI mannequin for solutions. Instead, our course of enlists a mess of AI models, every bringing distinctive insights to deal with a query. Although their preliminary responses could seem truncated or could comprise errors, these models can sharpen and enhance their very own solutions by scrutinizing the responses supplied by their counterparts,” says Yilun Du, an MIT PhD pupil in electrical engineering and pc science, affiliate of MIT CSAIL, and lead writer on a brand new paper in regards to the work. “As these AI models interact in discourse and deliberation, they’re higher outfitted to acknowledge and rectify points, improve their problem-solving talents, and higher confirm the precision of their responses. Essentially, we’re cultivating an surroundings that compels them to delve deeper into the crux of an issue. This stands in distinction to a single, solitary AI mannequin, which regularly parrots content material discovered on the web. Our methodology, nevertheless, actively stimulates the AI models to craft extra correct and complete options.”

The analysis checked out mathematical problem-solving, together with grade faculty and center/highschool math issues, and noticed a major increase in efficiency by way of the multi-agent debate course of. Additionally, the language models confirmed off enhanced talents to generate correct arithmetic evaluations, illustrating potential throughout completely different domains.

The methodology may assist tackle the problem of “hallucinations” that usually plague language models. By designing an surroundings the place brokers critique one another’s responses, they had been extra incentivized to keep away from spitting out random info and prioritize factual accuracy.

Beyond its software to language models, the method may be used for integrating numerous models with specialised capabilities. By establishing a decentralized system the place a number of brokers work together and debate, they may doubtlessly use these complete and environment friendly problem-solving talents throughout numerous modalities like speech, video, or textual content.

While the methodology yielded encouraging outcomes, the researchers say that present language models could face challenges with processing very lengthy contexts, and the critique talents is probably not as refined as desired. Furthermore,the multi-agent debate format, impressed by human group interplay, has but to include the extra advanced types of dialogue that contribute to clever collective decision-making — a vital space for future exploration, the group says. Advancing the approach might contain a deeper understanding of the computational foundations behind human debates and discussions, and utilizing these models to reinforce or complement present LLMs.

“Not solely does this method provide a pathway to raise the efficiency of present language models, but it surely additionally presents an computerized technique of self-improvement. By using the talk course of as supervised knowledge, language models can improve their factuality and reasoning autonomously, decreasing reliance on human suggestions and providing a scalable method to self-improvement,” says Du. “As researchers proceed to refine and discover this method, we will get nearer to a future the place language models not solely mimic human-like language but in addition exhibit extra systematic and dependable considering, forging a brand new period of language understanding and software.”

“It makes a lot sense to make use of a deliberative course of to enhance the mannequin’s general output, and it is a large step ahead from chain-of-thought prompting,” says Anca Dragan, affiliate professor on the University of California at Berkeley’s Department of Electrical Engineering and Computer Sciences, who was not concerned in the work. “I’m enthusiastic about the place this will go subsequent. Can folks higher choose the solutions popping out of LLMs once they see the deliberation, whether or not or not it converges? Can folks arrive at higher solutions by themselves deliberating with an LLM? Can an identical concept be used to assist a consumer probe a LLM’s reply in order to reach at a greater one?”

Du wrote the paper with three CSAIL associates: Shuang Li SM ’20, PhD ’23; MIT professor {of electrical} engineering and pc science Antonio Torralba; and MIT professor of computational cognitive science and Center for Brains, Minds, and Machines member Joshua Tenenbaum. Google DeepMind researcher Igor Mordatch was additionally a co-author.