Generative AI has been the greatest know-how story of 2023. Almost everyone’s performed with ChatGPT, Stable Diffusion, GitHub Copilot, or Midjourney. A couple of have even tried out Bard or Claude, or run LLaMA1 on their laptop computer. And everybody has opinions about how these language fashions and artwork technology applications are going to vary the nature of labor, usher in the singularity, or even perhaps doom the human race. In enterprises, we’ve seen all the things from wholesale adoption to insurance policies that severely prohibit and even forbid the use of generative AI.

What’s the actuality? We wished to search out out what persons are really doing, so in September we surveyed O’Reilly’s customers. Our survey targeted on how corporations use generative AI, what bottlenecks they see in adoption, and what expertise gaps must be addressed.

Learn quicker. Dig deeper. See farther.

Executive Summary

We’ve by no means seen a know-how adopted as quick as generative AI—it’s laborious to consider that ChatGPT is barely a yr previous. As of November 2023:

- Two-thirds (67%) of our survey respondents report that their corporations are utilizing generative AI.

- AI customers say that AI programming (66%) and knowledge evaluation (59%) are the most wanted expertise.

- Many AI adopters are nonetheless in the early phases. 26% have been working with AI for underneath a yr. But 18% have already got functions in manufacturing.

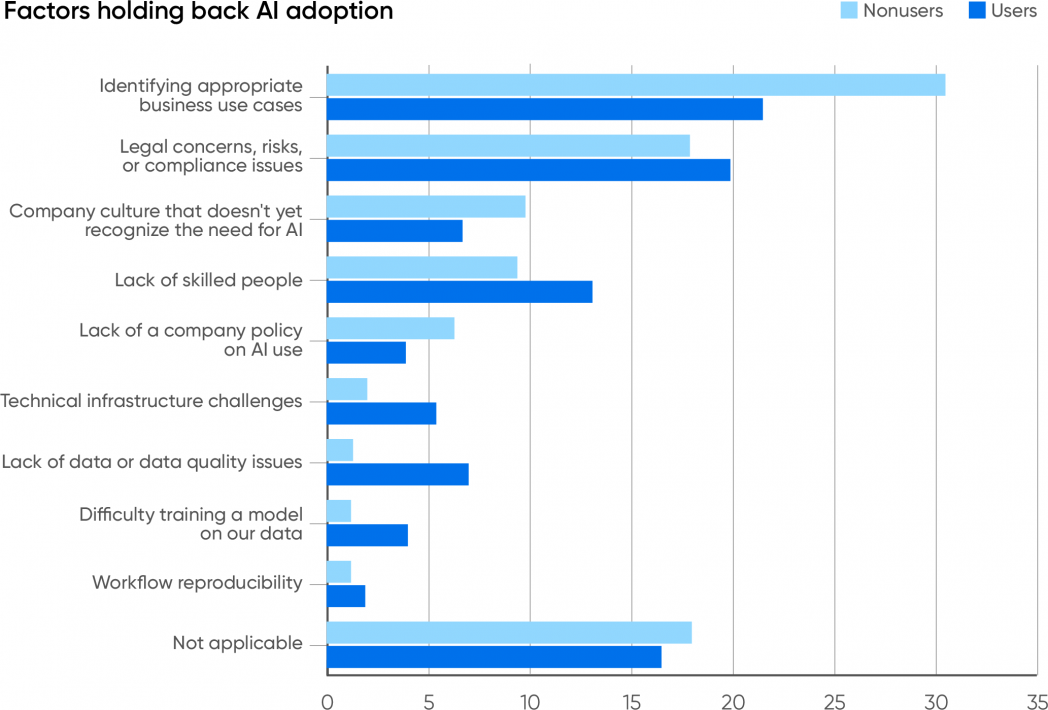

- Difficulty discovering applicable use circumstances is the greatest bar to adoption for each customers and nonusers.

- 16% of respondents working with AI are utilizing open supply fashions.

- Unexpected outcomes, safety, security, equity and bias, and privateness are the greatest dangers for which adopters are testing.

- 54% of AI customers anticipate AI’s greatest profit shall be better productiveness. Only 4% pointed to decrease head counts.

Is generative AI at the prime of the hype curve? We see loads of room for progress, significantly as adopters uncover new use circumstances and reimagine how they do enterprise.

Users and Nonusers

AI adoption is in the strategy of changing into widespread, nevertheless it’s nonetheless not common. Two-thirds of our survey’s respondents (67%) report that their corporations are utilizing generative AI. 41% say their corporations have been utilizing AI for a yr or extra; 26% say their corporations have been utilizing AI for lower than a yr. And solely 33% report that their corporations aren’t utilizing AI in any respect.

Generative AI customers signify a two-to-one majority over nonusers, however what does that imply? If we requested whether or not their corporations have been utilizing databases or net servers, little doubt 100% of the respondents would have mentioned “yes.” Until AI reaches 100%, it’s nonetheless in the strategy of adoption. ChatGPT was opened to the public on November 30, 2022, roughly a yr in the past; the artwork mills, resembling Stable Diffusion and DALL-E, are considerably older. A yr after the first net servers turned out there, what number of corporations had web sites or have been experimenting with constructing them? Certainly not two-thirds of them. Looking solely at AI customers, over a 3rd (38%) report that their corporations have been working with AI for lower than a yr and are nearly actually nonetheless in the early phases: they’re experimenting and dealing on proof-of-concept initiatives. (We’ll say extra about this later.) Even with cloud-based basis fashions like GPT-4, which eradicate the must develop your personal mannequin or present your personal infrastructure, fine-tuning a mannequin for any specific use case continues to be a serious endeavor. We’ve by no means seen adoption proceed so shortly.

When 26% of a survey’s respondents have been working with a know-how for underneath a yr, that’s an vital signal of momentum. Yes, it’s conceivable that AI—and particularly generative AI—could possibly be at the peak of the hype cycle, as Gartner has argued. We don’t consider that, despite the fact that the failure charge for a lot of of those new initiatives is undoubtedly excessive. But whereas the rush to undertake AI has loads of momentum, AI will nonetheless should show its worth to these new adopters, and shortly. Its adopters anticipate returns, and if not, effectively, AI has skilled many “winters” in the previous. Are we at the prime of the adoption curve, with nowhere to go however down? Or is there nonetheless room for progress?

We consider there’s plenty of headroom. Training fashions and creating advanced functions on prime of these fashions is changing into simpler. Many of the new open supply fashions are a lot smaller and never as useful resource intensive however nonetheless ship good outcomes (particularly when skilled for a particular software). Some can simply be run on a laptop computer and even in an internet browser. A wholesome instruments ecosystem has grown up round generative AI—and, as was mentioned about the California Gold Rush, if you wish to see who’s making a living, don’t take a look at the miners; take a look at the folks promoting shovels. Automating the strategy of constructing advanced prompts has change into frequent, with patterns like retrieval-augmented technology (RAG) and instruments like LangChain. And there are instruments for archiving and indexing prompts for reuse, vector databases for retrieving paperwork that an AI can use to reply a query, and rather more. We’re already transferring into the second (if not the third) technology of tooling. A roller-coaster journey into Gartner’s “trough of disillusionment” is unlikely.

What’s Holding AI Back?

It was vital for us to be taught why corporations aren’t utilizing AI, so we requested respondents whose corporations aren’t utilizing AI a single apparent query: “Why isn’t your company using AI?” We requested the same query to customers who mentioned their corporations are utilizing AI: “What’s the main bottleneck holding back further AI adoption?” Both teams have been requested to pick from the similar group of solutions. The most typical purpose, by a big margin, was problem discovering applicable enterprise use circumstances (31% for nonusers, 22% for customers). We may argue that this displays an absence of creativeness—however that’s not solely ungracious, it additionally presumes that making use of AI in every single place with out cautious thought is a good suggestion. The penalties of “Move fast and break things” are nonetheless enjoying out throughout the world, and it isn’t fairly. Badly thought-out and poorly carried out AI options could be damaging, so most corporations ought to consider carefully about learn how to use AI appropriately. We’re not encouraging skepticism or worry, however corporations ought to begin AI merchandise with a transparent understanding of the dangers, particularly these dangers which can be particular to AI. What use circumstances are applicable, and what aren’t? The capability to tell apart between the two is vital, and it’s a difficulty for each corporations that use AI and corporations that don’t. We even have to acknowledge that many of those use circumstances will problem conventional methods of eager about companies. Recognizing use circumstances for AI and understanding how AI means that you can reimagine the enterprise itself will go hand in hand.

The second most typical purpose was concern about authorized points, threat, and compliance (18% for nonusers, 20% for customers). This fear actually belongs to the similar story: threat needs to be thought of when eager about applicable use circumstances. The authorized penalties of utilizing generative AI are nonetheless unknown. Who owns the copyright for AI-generated output? Can the creation of a mannequin violate copyright, or is it a “transformative” use that’s protected underneath US copyright legislation? We don’t know proper now; the solutions shall be labored out in the courts in the years to come back. There are different dangers too, together with reputational injury when a mannequin generates inappropriate output, new safety vulnerabilities, and plenty of extra.

Another piece of the similar puzzle is the lack of a coverage for AI use. Such insurance policies can be designed to mitigate authorized issues and require regulatory compliance. This isn’t as vital a difficulty; it was cited by 6.3% of customers and three.9% of nonusers. Corporate insurance policies on AI use shall be showing and evolving over the subsequent yr. (At O’Reilly, we now have simply put our coverage for office use into place.) Late in 2023, we suspect that comparatively few corporations have a coverage. And after all, corporations that don’t use AI don’t want an AI use coverage. But it’s vital to consider which is the cart and which is the horse. Does the lack of a coverage forestall the adoption of AI? Or are people adopting AI on their very own, exposing the firm to unknown dangers and liabilities? Among AI customers, the absence of company-wide insurance policies isn’t holding again AI use; that’s self-evident. But this in all probability isn’t a very good factor. Again, AI brings with it dangers and liabilities that needs to be addressed fairly than ignored. Willful ignorance can solely result in unlucky penalties.

Another issue holding again the use of AI is an organization tradition that doesn’t acknowledge the want (9.8% for nonusers, 6.7% for customers). In some respects, not recognizing the want is much like not discovering applicable enterprise use circumstances. But there’s additionally an vital distinction: the phrase “appropriate.” AI entails dangers, and discovering use circumstances which can be applicable is a legit concern. A tradition that doesn’t acknowledge the want is dismissive and will point out an absence of creativeness or forethought: “AI is just a fad, so we’ll just continue doing what has always worked for us.” Is that the subject? It’s laborious to think about a enterprise the place AI couldn’t be put to make use of, and it will possibly’t be wholesome to an organization’s long-term success to disregard that promise.

We’re sympathetic to corporations that fear about the lack of expert folks, a difficulty that was reported by 9.4% of nonusers and 13% of customers. People with AI expertise have at all times been laborious to search out and are sometimes costly. We don’t anticipate that state of affairs to vary a lot in the close to future. While skilled AI builders are beginning to depart powerhouses like Google, OpenAI, Meta, and Microsoft, not sufficient are leaving to satisfy demand—and most of them will in all probability gravitate to startups fairly than including to the AI expertise inside established corporations. However, we’re additionally shocked that this subject doesn’t determine extra prominently. Companies which can be adopting AI are clearly discovering employees someplace, whether or not by means of hiring or coaching their present employees.

A small share (3.7% of nonusers, 5.4% of customers) report that “infrastructure issues” are a difficulty. Yes, constructing AI infrastructure is tough and costly, and it isn’t shocking that the AI customers really feel this drawback extra keenly. We’ve all examine the scarcity of the high-end GPUs that energy fashions like ChatGPT. This is an space the place cloud suppliers already bear a lot of the burden, and can proceed to bear it in the future. Right now, only a few AI adopters keep their very own infrastructure and are shielded from infrastructure points by their suppliers. In the long run, these points might sluggish AI adoption. We suspect that many API companies are being provided as loss leaders—that the main suppliers have deliberately set costs low to purchase market share. That pricing gained’t be sustainable, significantly as {hardware} shortages drive up the value of constructing infrastructure. How will AI adopters react when the value of renting infrastructure from AWS, Microsoft, or Google rises? Given the value of equipping a knowledge heart with high-end GPUs, they in all probability gained’t try to construct their very own infrastructure. But they might again off on AI improvement.

Few nonusers (2%) report that lack of knowledge or knowledge high quality is a matter, and just one.3% report that the problem of coaching a mannequin is an issue. In hindsight, this was predictable: these are issues that solely seem after you’ve began down the street to generative AI. AI customers are positively dealing with these issues: 7% report that knowledge high quality has hindered additional adoption, and 4% cite the problem of coaching a mannequin on their knowledge. But whereas knowledge high quality and the problem of coaching a mannequin are clearly vital points, they don’t look like the greatest limitations to constructing with AI. Developers are studying learn how to discover high quality knowledge and construct fashions that work.

How Companies Are Using AI

We requested a number of particular questions on how respondents are working with AI, and whether or not they’re “using” it or simply “experimenting.”

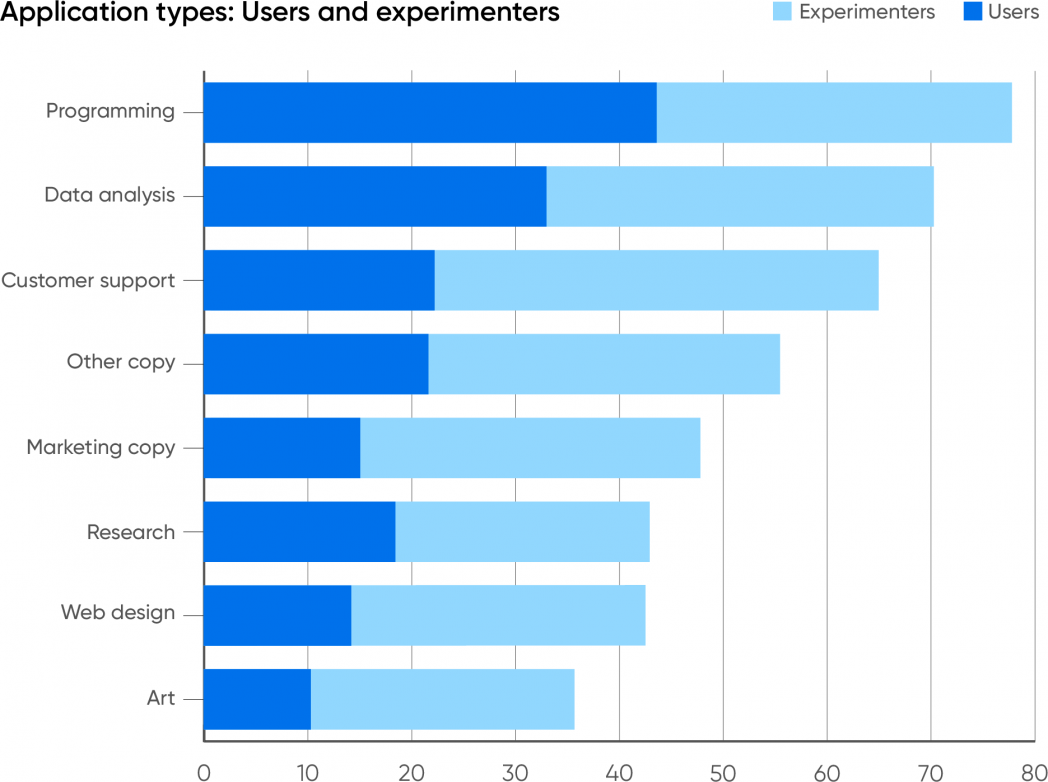

We aren’t shocked that the most typical software of generative AI is in programming, utilizing instruments like GitHub Copilot or ChatGPT. However, we are shocked at the degree of adoption: 77% of respondents report utilizing AI as an support in programming; 34% are experimenting with it, and 44% are already utilizing it in their work. Data evaluation confirmed the same sample: 70% complete; 32% utilizing AI, 38% experimenting with it. The increased share of customers which can be experimenting might replicate OpenAI’s addition of Advanced Data Analysis (previously Code Interpreter) to ChatGPT’s repertoire of beta options. Advanced Data Analysis does a good job of exploring and analyzing datasets—although we anticipate knowledge analysts to watch out about checking AI’s output and to mistrust software program that’s labeled as “beta.”

Using generative AI instruments for duties associated to programming (together with knowledge evaluation) is almost common. It will definitely change into common for organizations that don’t explicitly prohibit its use. And we anticipate that programmers will use AI even in organizations that prohibit its use. Programmers have at all times developed instruments that will assist them do their jobs, from take a look at frameworks to supply management to built-in improvement environments. And they’ve at all times adopted these instruments whether or not or not they’d administration’s permission. From a programmer’s perspective, code technology is simply one other labor-saving instrument that retains them productive in a job that’s continually changing into extra advanced. In the early 2000s, some research of open supply adoption discovered that a big majority of employees mentioned that they have been utilizing open supply, despite the fact that a big majority of CIOs mentioned their corporations weren’t. Clearly these CIOs both didn’t know what their workers have been doing or have been prepared to look the different approach. We’ll see that sample repeat itself: programmers will do what’s essential to get the job finished, and managers shall be blissfully unaware so long as their groups are extra productive and objectives are being met.

After programming and knowledge evaluation, the subsequent most typical use for generative AI was functions that work together with prospects, together with buyer help: 65% of all respondents report that their corporations are experimenting with (43%) or utilizing AI (22%) for this objective. While corporations have lengthy been speaking about AI’s potential to enhance buyer help, we didn’t anticipate to see customer support rank so excessive. Customer-facing interactions are very dangerous: incorrect solutions, bigoted or sexist habits, and plenty of different well-documented issues with generative AI shortly result in injury that’s laborious to undo. Perhaps that’s why such a big share of respondents are experimenting with this know-how fairly than utilizing it (greater than for another type of software). Any try at automating customer support must be very fastidiously examined and debugged. We interpret our survey outcomes as “cautious but excited adoption.” It’s clear that automating customer support may go a protracted option to reduce prices and even, if finished effectively, make prospects happier. No one needs to be left behind, however at the similar time, nobody needs a extremely seen PR catastrophe or a lawsuit on their fingers.

A reasonable variety of respondents report that their corporations are utilizing generative AI to generate copy (written textual content). 47% are utilizing it particularly to generate advertising and marketing copy, and 56% are utilizing it for different kinds of copy (inside memos and studies, for instance). While rumors abound, we’ve seen few studies of people that have really misplaced their jobs to AI—however these studies have been nearly completely from copywriters. AI isn’t but at the level the place it will possibly write in addition to an skilled human, but when your organization wants catalog descriptions for lots of of things, velocity could also be extra vital than good prose. And there are lots of different functions for machine-generated textual content: AI is nice at summarizing paperwork. When coupled with a speech-to-text service, it will possibly do a satisfactory job of making assembly notes and even podcast transcripts. It’s additionally effectively suited to writing a fast e mail.

The functions of generative AI with the fewest customers have been net design (42% complete; 28% experimenting, 14% utilizing) and artwork (36% complete; 25% experimenting, 11% utilizing). This little doubt displays O’Reilly’s developer-centric viewers. However, a number of different components are in play. First, there are already plenty of low-code and no-code net design instruments, a lot of which characteristic AI however aren’t but utilizing generative AI. Generative AI will face vital entrenched competitors in this crowded market. Second, whereas OpenAI’s GPT-4 announcement final March demoed producing web site code from a hand-drawn sketch, that functionality wasn’t out there till after the survey closed. Third, whereas roughing out the HTML and JavaScript for a easy web site makes an important demo, that isn’t actually the drawback net designers want to unravel. They need a drag-and-drop interface that may be edited on-screen, one thing that generative AI fashions don’t but have. Those functions shall be constructed quickly; tldraw is a really early instance of what they is likely to be. Design instruments appropriate for skilled use don’t exist but, however they may seem very quickly.

An even smaller share of respondents say that their corporations are utilizing generative AI to create artwork. While we’ve examine startup founders utilizing Stable Diffusion and Midjourney to create firm or product logos on the low cost, that’s nonetheless a specialised software and one thing you don’t do steadily. But that isn’t all the artwork that an organization wants: “hero images” for weblog posts, designs for studies and whitepapers, edits to publicity pictures, and extra are all vital. Is generative AI the reply? Perhaps not but. Take Midjourneyfor instance: whereas its capabilities are spectacular, the instrument may also make foolish errors, like getting the variety of fingers (or arms) on topics incorrect. While the newest model of Midjourney is significantly better, it hasn’t been out for lengthy, and plenty of artists and designers would like to not take care of the errors. They’d additionally want to keep away from authorized legal responsibility. Among generative artwork distributors, Shutterstock, Adobe, and Getty Images indemnify customers of their instruments in opposition to copyright claims. Microsoft, Google, IBM, and OpenAI have provided extra normal indemnification.

We additionally requested whether or not the respondents’ corporations are utilizing AI to create another type of software, and if that’s the case, what. While many of those write-in functions duplicated options already out there from large AI suppliers like Microsoft, OpenAI, and Google, others coated a really spectacular vary. Many of the functions concerned summarization: information, authorized paperwork and contracts, veterinary medication, and monetary info stand out. Several respondents additionally talked about working with video: analyzing video knowledge streams, video analytics, and producing or enhancing movies.

Other functions that respondents listed included fraud detection, instructing, buyer relations administration, human assets, and compliance, together with extra predictable functions like chat, code technology, and writing. We can’t tally and tabulate all the responses, nevertheless it’s clear that there’s no scarcity of creativity and innovation. It’s additionally clear that there are few industries that gained’t be touched—AI will change into an integral a part of nearly each career.

Generative AI will take its place as the final workplace productiveness instrument. When this occurs, it could now not be acknowledged as AI; it’s going to simply be a characteristic of Microsoft Office or Google Docs or Adobe Photoshop, all of that are integrating generative AI fashions. GitHub Copilot and Google’s Codey have each been built-in into Microsoft and Google’s respective programming environments. They will merely be a part of the setting in which software program builders work. The similar factor occurred to networking 20 or 25 years in the past: wiring an workplace or a home for ethernet was once an enormous deal. Now we anticipate wi-fi in every single place, and even that’s not right. We don’t “expect” it—we assume it, and if it’s not there, it’s an issue. We anticipate cell to be in every single place, together with map companies, and it’s an issue in case you get misplaced in a location the place the cell indicators don’t attain. We anticipate search to be in every single place. AI shall be the similar. It gained’t be anticipated; will probably be assumed, and an vital a part of the transition to AI in every single place shall be understanding learn how to work when it isn’t out there.

The Builders and Their Tools

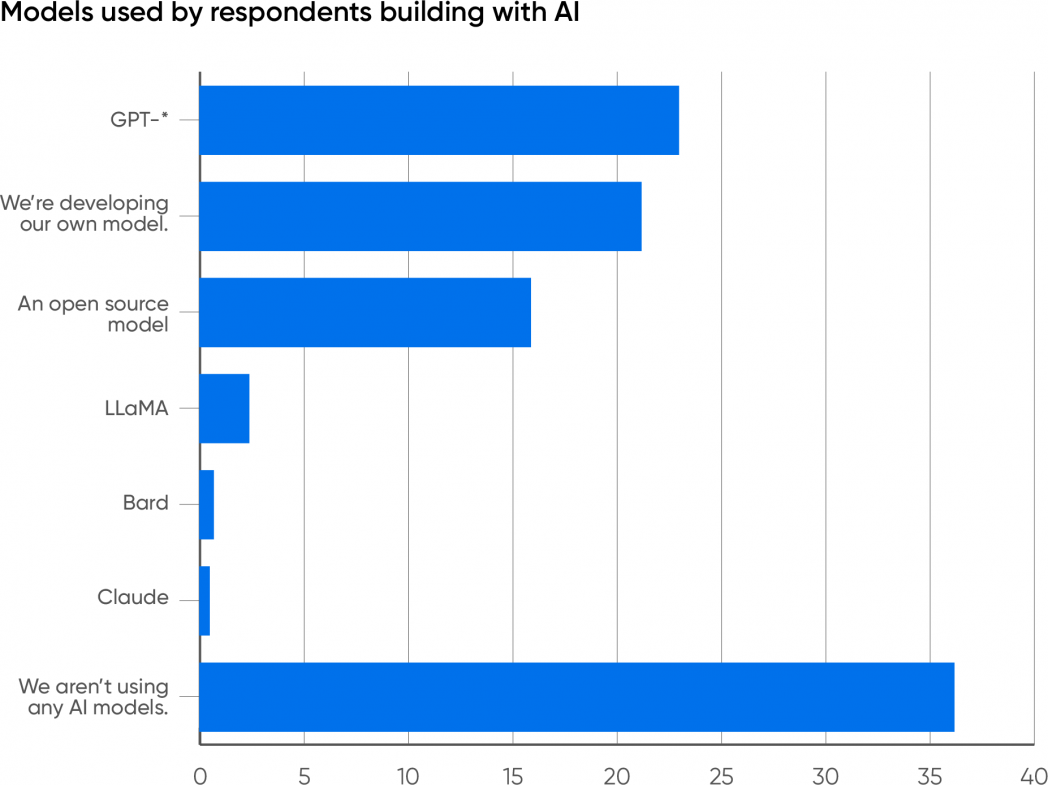

To get a distinct tackle what our prospects are doing with AI, we requested what fashions they’re utilizing to construct customized functions. 36% indicated that they aren’t constructing a customized software. Instead, they’re working with a prepackaged software like ChatGPT, GitHub Copilot, the AI options built-in into Microsoft Office and Google Docs, or one thing related. The remaining 64% have shifted from utilizing AI to creating AI functions. This transition represents an enormous leap ahead: it requires funding in folks, in infrastructure, and in training.

Which Model?

While the GPT fashions dominate most of the on-line chatter, the variety of fashions out there for constructing functions is rising quickly. We examine a brand new mannequin nearly each day—actually each week—and a fast take a look at Hugging Face will present you extra fashions than you possibly can rely. (As of November, the variety of fashions in its repository is approaching 400,000.) Developers clearly have selections. But what selections are they making? Which fashions are they utilizing?

It’s no shock that 23% of respondents report that their corporations are utilizing certainly one of the GPT fashions (2, 3.5, 4, and 4V), greater than another mannequin. It’s an even bigger shock that 21% of respondents are creating their very own mannequin; that process requires substantial assets in employees and infrastructure. It shall be price watching how this evolves: will corporations proceed to develop their very own fashions, or will they use AI companies that enable a basis mannequin (like GPT-4) to be custom-made?

16% of the respondents report that their corporations are constructing on prime of open supply fashions. Open supply fashions are a big and various group. One vital subsection consists of fashions derived from Meta’s LLaMA: llama.cpp, Alpaca, Vicuna, and plenty of others. These fashions are sometimes smaller (7 to 14 billion parameters) and simpler to fine-tune, and so they can run on very restricted {hardware}; many can run on laptops, cell telephones, or nanocomputers resembling the Raspberry Pi. Training requires rather more {hardware}, however the capability to run in a restricted setting implies that a completed mannequin could be embedded inside a {hardware} or software program product. Another subsection of fashions has no relationship to LLaMA: RedPajama, Falcon, MPT, Bloom, and plenty of others, most of which can be found on Hugging Face. The variety of builders utilizing any particular mannequin is comparatively small, however the complete is spectacular and demonstrates a significant and energetic world past GPT. These “other” fashions have attracted a big following. Be cautious, although: whereas this group of fashions is steadily known as “open source,” a lot of them prohibit what builders can construct from them. Before working with any so-called open supply mannequin, look fastidiously at the license. Some restrict the mannequin to analysis work and prohibit industrial functions; some prohibit competing with the mannequin’s builders; and extra. We’re caught with the time period “open source” for now, however the place AI is anxious, open supply usually isn’t what it appears to be.

Only 2.4% of the respondents are constructing with LLaMA and Llama 2. While the supply code and weights for the LLaMA fashions can be found on-line, the LLaMA fashions don’t but have a public API backed by Meta—though there look like a number of APIs developed by third events, and each Google Cloud and Microsoft Azure provide Llama 2 as a service. The LLaMA-family fashions additionally fall into the “so-called open source” class that restricts what you possibly can construct.

Only 1% are constructing with Google’s Bard, which maybe has much less publicity than the others. A lot of writers have claimed that Bard offers worse outcomes than the LLaMA and GPT fashions; which may be true for chat, however I’ve discovered that Bard is usually right when GPT-4 fails. For app builders, the greatest drawback with Bard in all probability isn’t accuracy or correctness; it’s availability. In March 2023, Google introduced a public beta program for the Bard API. However, as of November, questions on API availability are nonetheless answered by hyperlinks to the beta announcement. Use of the Bard API is undoubtedly hampered by the comparatively small variety of builders who’ve entry to it. Even fewer are utilizing Claude, a really succesful mannequin developed by Anthropic. Claude doesn’t get as a lot information protection as the fashions from Meta, OpenAI, and Google, which is unlucky: Anthropic’s Constitutional AI strategy to AI security is a novel and promising try to unravel the greatest issues troubling the AI business.

What Stage?

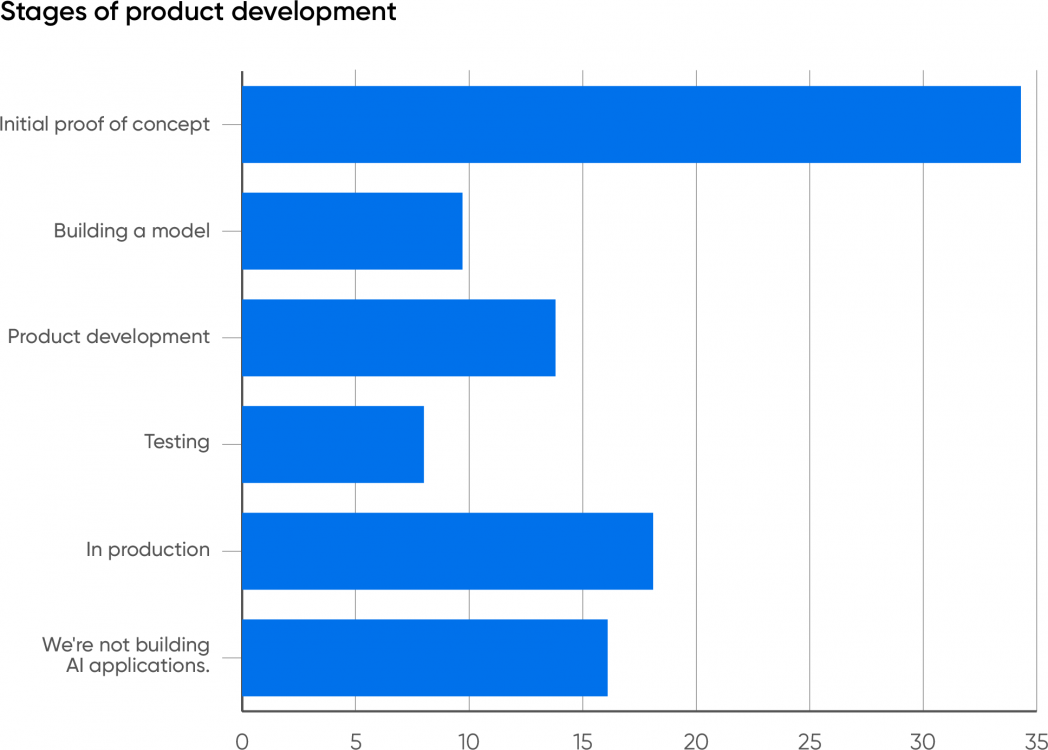

When requested what stage corporations are at in their work, most respondents shared that they’re nonetheless in the early phases. Given that generative AI is comparatively new, that isn’t information. If something, we needs to be shocked that generative AI has penetrated so deeply and so shortly. 34% of respondents are engaged on an preliminary proof of idea. 14% are in product improvement, presumably after creating a PoC; 10% are constructing a mannequin, additionally an early stage exercise; and eight% are testing, which presumes that they’ve already constructed a proof of idea and are transferring towards deployment—they’ve a mannequin that a minimum of seems to work.

What stands out is that 18% of the respondents work for corporations which have AI functions in manufacturing. Given that the know-how is new and that many AI initiatives fail,2 it’s shocking that 18% report that their corporations have already got generative AI functions in manufacturing. We’re not being skeptics; that is proof that whereas most respondents report corporations which can be engaged on proofs of idea or in different early phases, generative AI is being adopted and is doing actual work. We’ve already seen some vital integrations of AI into present merchandise, together with our personal. We anticipate others to comply with.

Risks and Tests

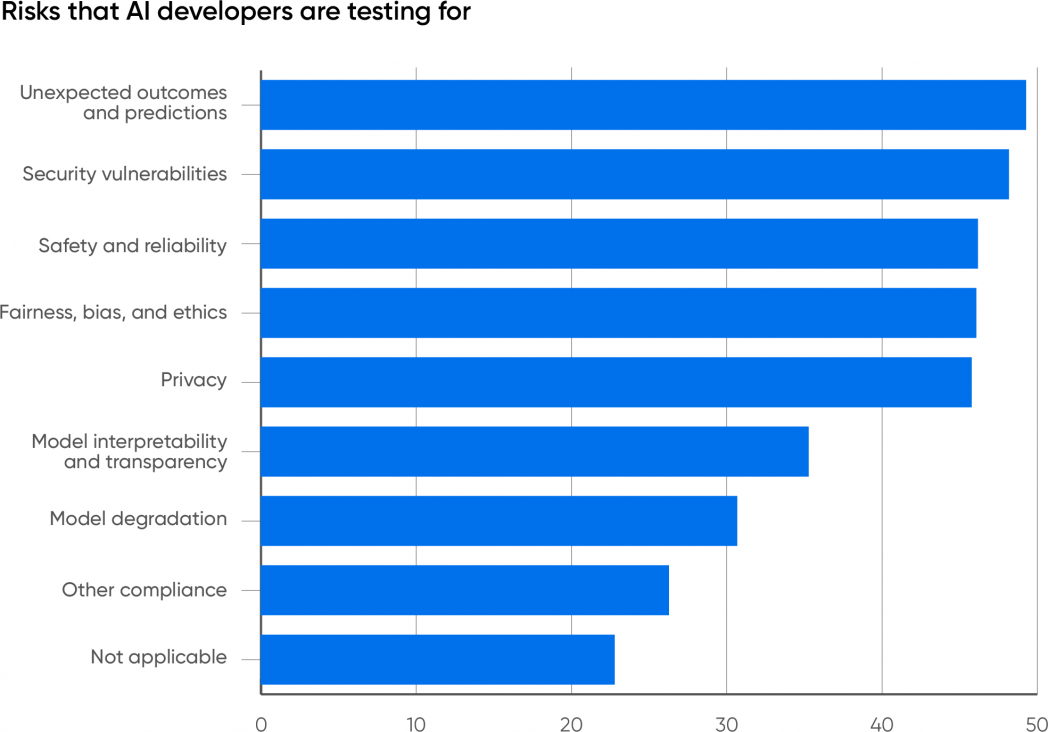

We requested the respondents whose corporations are working with AI what dangers they’re testing for. The prime 5 responses clustered between 45 and 50%: sudden outcomes (49%), safety vulnerabilities (48%), security and reliability (46%), equity, bias, and ethics (46%), and privateness (46%).

It’s vital that just about half of respondents chosen “unexpected outcomes,” greater than another reply: anybody working with generative AI must know that incorrect outcomes (usually known as hallucinations) are frequent. If there’s a shock right here, it’s that this reply wasn’t chosen by 100% of the individuals. Unexpected, incorrect, or inappropriate outcomes are nearly actually the greatest single threat related to generative AI.

We’d prefer to see extra corporations take a look at for equity. There are many functions (for instance, medical functions) the place bias is amongst the most vital issues to check for and the place eliminating historic biases in the coaching knowledge may be very tough and of utmost significance. It’s vital to appreciate that unfair or biased output could be very refined, significantly if software builders don’t belong to teams that have bias—and what’s “subtle” to a developer is usually very unsubtle to a consumer. A chat software that doesn’t perceive a consumer’s accent is an apparent drawback (seek for “Amazon Alexa doesn’t understand Scottish accent”). It’s additionally vital to search for functions the place bias isn’t a difficulty. ChatGPT has pushed a deal with private use circumstances, however there are lots of functions the place issues of bias and equity aren’t main points: for instance, inspecting photos to inform whether or not crops are diseased or optimizing a constructing’s heating and air con for optimum effectivity whereas sustaining consolation.

It’s good to see points like security and safety close to the prime of the record. Companies are progressively waking as much as the concept that safety is a critical subject, not only a value heart. In many functions (for instance, customer support), generative AI is in a place to do vital reputational injury, in addition to creating authorized legal responsibility. Furthermore, generative AI has its personal vulnerabilities, resembling immediate injection, for which there’s nonetheless no recognized resolution. Model leeching, in which an attacker makes use of specifically designed prompts to reconstruct the knowledge on which the mannequin was skilled, is one other assault that’s distinctive to AI. While 48% isn’t unhealthy, we wish to see even better consciousness of the want to check AI functions for safety.

Model interpretability (35%) and mannequin degradation (31%) aren’t as large issues. Unfortunately, interpretability stays a analysis drawback for generative AI. At least with the present language fashions, it’s very tough to clarify why a generative mannequin gave a particular reply to any query. Interpretability may not be a requirement for many present functions. If ChatGPT writes a Python script for you, you might not care why it wrote that exact script fairly than one thing else. (It’s additionally price remembering that in case you ask ChatGPT why it produced any response, its reply won’t be the purpose for the earlier response, however, as at all times, the probably response to your query.) But interpretability is crucial for diagnosing issues of bias and shall be extraordinarily vital when circumstances involving generative AI find yourself in courtroom.

Model degradation is a distinct concern. The efficiency of any AI mannequin degrades over time, and so far as we all know, massive language fashions are not any exception. One hotly debated examine argues that the high quality of GPT-4’s responses has dropped over time. Language modifications in refined methods; the questions customers ask shift and might not be answerable with older coaching knowledge. Even the existence of an AI answering questions may trigger a change in what questions are requested. Another fascinating subject is what occurs when generative fashions are skilled on knowledge generated by different generative fashions. Is “model collapse” actual, and what influence will it have as fashions are retrained?

If you’re merely constructing an software on prime of an present mannequin, you might not be capable of do something about mannequin degradation. Model degradation is a a lot greater subject for builders who’re constructing their very own mannequin or doing further coaching to fine-tune an present mannequin. Training a mannequin is pricey, and it’s more likely to be an ongoing course of.

Missing Skills

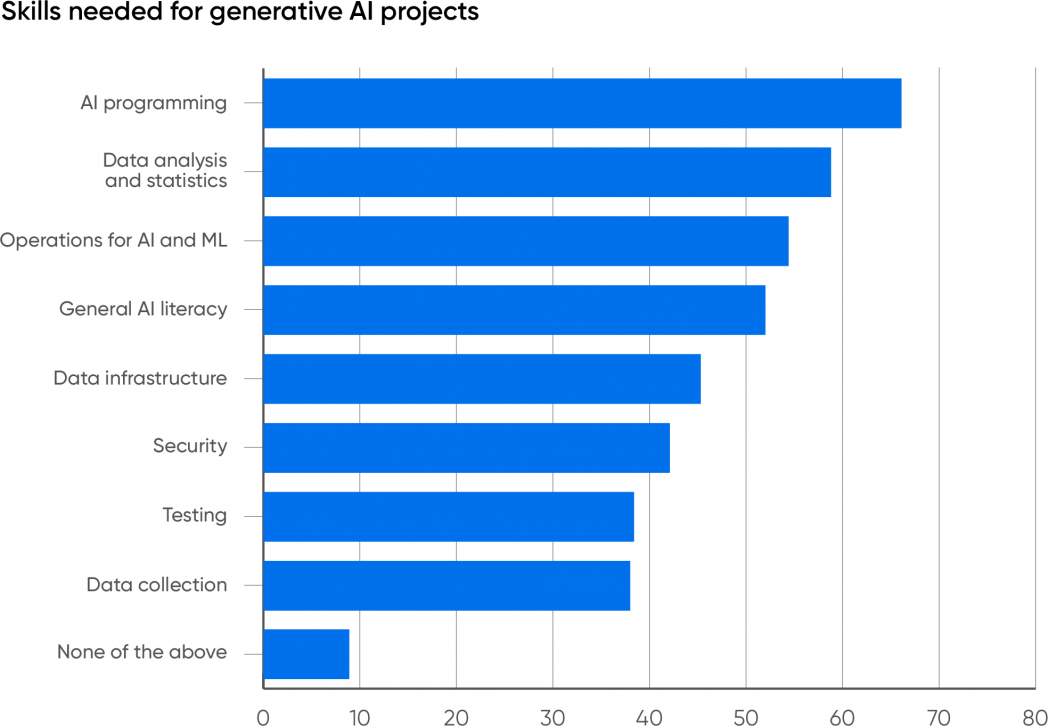

One of the greatest challenges dealing with corporations creating with AI is experience. Do they’ve employees with the vital expertise to construct, deploy, and handle these functions? To discover out the place the expertise deficits are, we requested our respondents what expertise their organizations want to accumulate for AI initiatives. We weren’t shocked that AI programming (66%) and knowledge evaluation (59%) are the two most wanted. AI is the subsequent technology of what we known as “data science” a couple of years again, and knowledge science represented a merger between statistical modeling and software program improvement. The area might have advanced from conventional statistical evaluation to synthetic intelligence, however its general form hasn’t modified a lot.

The subsequent most wanted ability is operations for AI and ML (54%). We’re glad to see folks acknowledge this; we’ve lengthy thought that operations was the “elephant in the room” for AI and ML. Deploying and managing AI merchandise isn’t easy. These merchandise differ in some ways from extra conventional functions, and whereas practices like steady integration and deployment have been very efficient for conventional software program functions, AI requires a rethinking of those code-centric methodologies. The mannequin, not the supply code, is the most vital a part of any AI software, and fashions are massive binary recordsdata that aren’t amenable to supply management instruments like Git. And not like supply code, fashions develop stale over time and require fixed monitoring and testing. The statistical habits of most fashions implies that easy, deterministic testing gained’t work; you possibly can’t assure that, given the similar enter, a mannequin will generate the similar output. The result’s that AI operations is a specialty of its personal, one which requires a deep understanding of AI and its necessities in addition to extra conventional operations. What sorts of deployment pipelines, repositories, and take a look at frameworks do we have to put AI functions into manufacturing? We don’t know; we’re nonetheless creating the instruments and practices wanted to deploy and handle AI efficiently.

Infrastructure engineering, a selection chosen by 45% of respondents, doesn’t rank as excessive. This is a little bit of a puzzle: working AI functions in manufacturing can require large assets, as corporations as massive as Microsoft are discovering out. However, most organizations aren’t but working AI on their very own infrastructure. They’re both utilizing APIs from an AI supplier like OpenAI, Microsoft, Amazon, or Google or they’re utilizing a cloud supplier to run a homegrown software. But in each circumstances, another supplier builds and manages the infrastructure. OpenAI in specific presents enterprise companies, which incorporates APIs for coaching customized fashions together with stronger ensures about holding company knowledge personal. However, with cloud suppliers working close to full capability, it is smart for corporations investing in AI to begin eager about their very own infrastructure and buying the capability to construct it.

Over half of the respondents (52%) included normal AI literacy as a wanted ability. While the quantity could possibly be increased, we’re glad that our customers acknowledge that familiarity with AI and the approach AI methods behave (or misbehave) is crucial. Generative AI has an important wow issue: with a easy immediate, you may get ChatGPT to inform you about Maxwell’s equations or the Peloponnesian War. But easy prompts don’t get you very far in enterprise. AI customers quickly be taught that good prompts are sometimes very advanced, describing in element the end result they need and learn how to get it. Prompts could be very lengthy, and so they can embrace all the assets wanted to reply the consumer’s query. Researchers debate whether or not this degree of immediate engineering shall be vital in the future, however it’s going to clearly be with us for the subsequent few years. AI customers additionally must anticipate incorrect solutions and to be geared up to test nearly all the output that an AI produces. This is usually known as crucial considering, nevertheless it’s rather more like the strategy of discovery in legislation: an exhaustive search of all attainable proof. Users additionally must know learn how to create a immediate for an AI system that may generate a helpful reply.

Finally, the Business

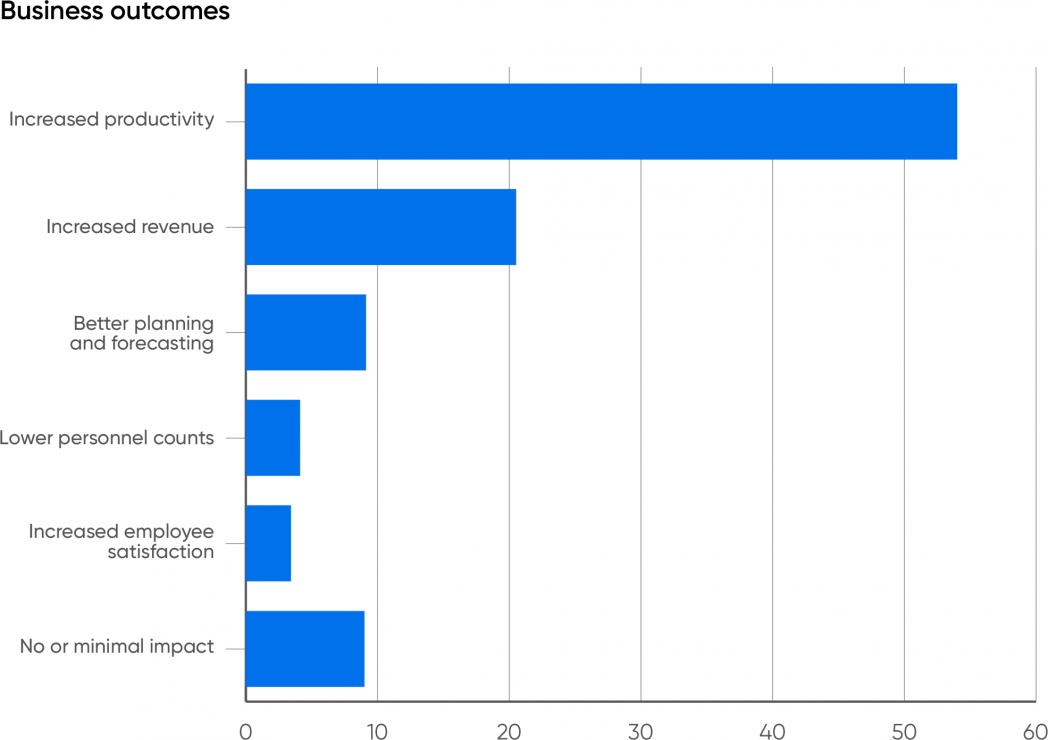

So what’s the backside line? How do companies profit from AI? Over half (54%) of the respondents anticipate their companies to learn from elevated productiveness. 21% anticipate elevated income, which could certainly be the results of elevated productiveness. Together, that’s three-quarters of the respondents. Another 9% say that their corporations would profit from higher planning and forecasting.

Only 4% consider that the major profit shall be decrease personnel counts. We’ve lengthy thought that the worry of dropping your job to AI was exaggerated. While there shall be some short-term dislocation as a couple of jobs change into out of date, AI can even create new jobs—as has nearly each vital new know-how, together with computing itself. Most jobs depend on a large number of particular person expertise, and generative AI can solely substitute for a couple of of them. Most workers are additionally prepared to make use of instruments that may make their jobs simpler, boosting productiveness in the course of. We don’t consider that AI will exchange folks, and neither do our respondents. On the different hand, workers will want coaching to make use of AI-driven instruments successfully, and it’s the accountability of the employer to supply that coaching.

We’re optimistic about generative AI’s future. It’s laborious to appreciate that ChatGPT has solely been round for a yr; the know-how world has modified a lot in that quick interval. We’ve by no means seen a brand new know-how command a lot consideration so shortly: not private computer systems, not the web, not the net. It’s actually attainable that we’ll slide into one other AI winter if the investments being made in generative AI don’t pan out. There are positively issues that must be solved—correctness, equity, bias, and safety are amongst the greatest—and a few early adopters will ignore these hazards and undergo the penalties. On the different hand, we consider that worrying a couple of normal AI deciding that people are pointless is both an affliction of those that learn an excessive amount of science fiction or a technique to encourage regulation that provides the present incumbents a bonus over startups.

It’s time to begin studying about generative AI, eager about the way it can enhance your organization’s enterprise, and planning a technique. We can’t inform you what to do; builders are pushing AI into nearly each side of enterprise. But corporations might want to make investments in coaching, each for software program builders and for AI customers; they’ll want to speculate in the assets required to develop and run functions, whether or not in the cloud or in their very own knowledge facilities; and so they’ll must suppose creatively about how they’ll put AI to work, realizing that the solutions might not be what they anticipate.

AI gained’t exchange people, however corporations that benefit from AI will exchange corporations that don’t.

Footnotes

- Meta has dropped the odd capitalization for Llama 2. In this report, we use LLaMA to discuss with the LLaMA fashions generically: LLaMA, Llama 2, and Llama n, when future variations exist. Although capitalization modifications, we use Claude to refer each to the authentic Claude and to Claude 2, and Bard to Google’s Bard mannequin and its successors.

- Many articles quote Gartner as saying that the failure charge for AI initiatives is 85%. We haven’t discovered the supply, although in 2018, Gartner wrote that 85% of AI initiatives “deliver erroneous outcomes.” That’s not the similar as failure, and 2018 considerably predates generative AI. Generative AI is actually liable to “erroneous outcomes,” and we suspect the failure charge is excessive. 85% is likely to be an affordable estimate.

Appendix

Methodology and Demographics

This survey ran from September 14, 2023, to September 27, 2023. It was publicized by means of O’Reilly’s studying platform to all our customers, each company and people. We obtained 4,782 responses, of which 2,857 answered all the questions. As we often do, we eradicated incomplete responses (customers who dropped out half approach by means of the questions). Respondents who indicated they weren’t utilizing generative AI have been requested a remaining query about why they weren’t utilizing it, and thought of full.

Any survey solely offers a partial image, and it’s crucial to consider biases. The greatest bias by far is the nature of O’Reilly’s viewers, which is predominantly North American and European. 42% of the respondents have been from North America, 32% have been from Europe, and 21% p.c have been from the Asia-Pacific area. Relatively few respondents have been from South America or Africa, though we’re conscious of very attention-grabbing functions of AI on these continents.

The responses are additionally skewed by the industries that use our platform most closely. 34% of all respondents who accomplished the survey have been from the software program business, and one other 11% labored on pc {hardware}, collectively making up nearly half of the respondents. 14% have been in monetary companies, which is one other space the place our platform has many customers. 5% of the respondents have been from telecommunications, 5% from the public sector and the authorities, 4.4% from the healthcare business, and three.7% from training. These are nonetheless wholesome numbers: there have been over 100 respondents in every group. The remaining 22% represented different industries, starting from mining (0.1%) and development (0.2%) to manufacturing (2.6%).

These percentages change little or no in case you look solely at respondents whose employers use AI fairly than all respondents who accomplished the survey. This means that AI utilization doesn’t rely loads on the particular business; the variations between industries displays the inhabitants of O’Reilly’s consumer base.