The physician-patient dialog is a cornerstone of medication, through which expert and intentional communication drives analysis, administration, empathy and belief. AI techniques able to such diagnostic dialogues may improve availability, accessibility, high quality and consistency of care by being helpful conversational companions to clinicians and sufferers alike. But approximating clinicians’ appreciable experience is a big problem.

Recent progress in giant language fashions (LLMs) exterior the medical area has proven that they will plan, motive, and use related context to carry wealthy conversations. However, there are numerous facets of excellent diagnostic dialogue which might be distinctive to the medical area. An efficient clinician takes an entire “clinical history” and asks clever questions that assist to derive a differential analysis. They wield appreciable ability to foster an efficient relationship, present info clearly, make joint and knowledgeable selections with the affected person, reply empathically to their feelings, and help them within the subsequent steps of care. While LLMs can precisely carry out duties equivalent to medical summarization or answering medical questions, there was little work particularly aimed in direction of creating these sorts of conversational diagnostic capabilities.

Inspired by this problem, we developed Articulate Medical Intelligence Explorer (AMIE), a research AI system based mostly on a LLM and optimized for diagnostic reasoning and conversations. We skilled and evaluated AMIE alongside many dimensions that mirror high quality in real-world scientific consultations from the angle of each clinicians and sufferers. To scale AMIE throughout a mess of illness situations, specialties and situations, we developed a novel self-play based mostly simulated diagnostic dialogue surroundings with automated suggestions mechanisms to counterpoint and speed up its studying course of. We additionally launched an inference time chain-of-reasoning technique to enhance AMIE’s diagnostic accuracy and dialog high quality. Finally, we examined AMIE prospectively in actual examples of multi-turn dialogue by simulating consultations with skilled actors.

|

| AMIE was optimized for diagnostic conversations, asking questions that assist to scale back its uncertainty and enhance diagnostic accuracy, whereas additionally balancing this with different necessities of efficient scientific communication, equivalent to empathy, fostering a relationship, and offering info clearly. |

Evaluation of conversational diagnostic AI

Besides creating and optimizing AI techniques themselves for diagnostic conversations, assess such techniques can be an open query. Inspired by accepted instruments used to measure session high quality and scientific communication expertise in real-world settings, we constructed a pilot analysis rubric to evaluate diagnostic conversations alongside axes pertaining to history-taking, diagnostic accuracy, scientific administration, scientific communication expertise, relationship fostering and empathy.

We then designed a randomized, double-blind crossover examine of text-based consultations with validated affected person actors interacting both with board-certified major care physicians (PCPs) or the AI system optimized for diagnostic dialogue. We arrange our consultations within the fashion of an goal structured scientific examination (OSCE), a sensible evaluation generally utilized in the true world to look at clinicians’ expertise and competencies in a standardized and goal means. In a typical OSCE, clinicians would possibly rotate by a number of stations, every simulating a real-life scientific state of affairs the place they carry out duties equivalent to conducting a session with a standardized affected person actor (skilled fastidiously to emulate a affected person with a specific situation). Consultations had been carried out utilizing a synchronous text-chat software, mimicking the interface acquainted to most customers utilizing LLMs immediately.

|

| AMIE is a research AI system based mostly on LLMs for diagnostic reasoning and dialogue. |

AMIE: an LLM-based conversational diagnostic research AI system

We skilled AMIE on real-world datasets comprising medical reasoning, medical summarization and real-world scientific conversations.

It is possible to coach LLMs utilizing real-world dialogues developed by passively amassing and transcribing in-person scientific visits, nonetheless, two substantial challenges restrict their effectiveness in coaching LLMs for medical conversations. First, current real-world knowledge usually fails to seize the huge vary of medical situations and situations, hindering the scalability and comprehensiveness. Second, the information derived from real-world dialogue transcripts tends to be noisy, containing ambiguous language (together with slang, jargon, humor and sarcasm), interruptions, ungrammatical utterances, and implicit references.

To deal with these limitations, we designed a self-play based mostly simulated studying surroundings with automated suggestions mechanisms for diagnostic medical dialogue in a digital care setting, enabling us to scale AMIE’s data and capabilities throughout many medical situations and contexts. We used this surroundings to iteratively fine-tune AMIE with an evolving set of simulated dialogues along with the static corpus of real-world knowledge described.

This course of consisted of two self-play loops: (1) an “inner” self-play loop, the place AMIE leveraged in-context critic suggestions to refine its conduct on simulated conversations with an AI affected person simulator; and (2) an “outer” self-play loop the place the set of refined simulated dialogues had been integrated into subsequent fine-tuning iterations. The ensuing new model of AMIE may then take part within the interior loop once more, making a virtuous steady studying cycle.

Further, we additionally employed an inference time chain-of-reasoning technique which enabled AMIE to progressively refine its response conditioned on the present dialog to reach at an knowledgeable and grounded reply.

|

| AMIE makes use of a novel self-play based mostly simulated dialogue studying surroundings to enhance the standard of diagnostic dialogue throughout a mess of illness situations, specialities and affected person contexts. |

We examined efficiency in consultations with simulated sufferers (performed by skilled actors), in comparison with these carried out by 20 actual PCPs utilizing the randomized method described above. AMIE and PCPs had been assessed from the views of each specialist attending physicians and our simulated sufferers in a randomized, blinded crossover examine that included 149 case situations from OSCE suppliers in Canada, the UK and India in a various vary of specialties and ailments.

Notably, our examine was not designed to emulate both conventional in-person OSCE evaluations or the methods clinicians often use textual content, e-mail, chat or telemedicine. Instead, our experiment mirrored the most typical means customers work together with LLMs immediately, a probably scalable and acquainted mechanism for AI techniques to have interaction in distant diagnostic dialogue.

|

| Overview of the randomized examine design to carry out a digital distant OSCE with simulated sufferers through on-line multi-turn synchronous textual content chat. |

Performance of AMIE

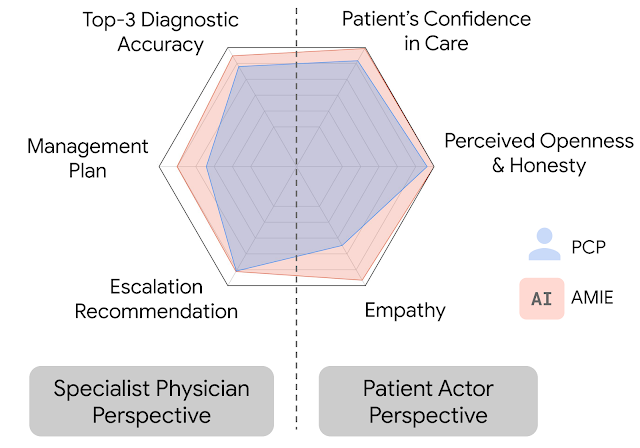

In this setting, we noticed that AMIE carried out simulated diagnostic conversations at the very least in addition to PCPs when each had been evaluated alongside a number of clinically-meaningful axes of session high quality. AMIE had better diagnostic accuracy and superior efficiency for 28 of 32 axes from the angle of specialist physicians, and 24 of 26 axes from the angle of affected person actors.

|

| AMIE outperformed PCPs on a number of analysis axes for diagnostic dialogue in our evaluations. |

|

| Specialist-rated top-k diagnostic accuracy. AMIE and PCPs top-k differential analysis (DDx) accuracy are in contrast throughout 149 situations with respect to the bottom reality analysis (a) and all diagnoses listed throughout the accepted differential diagnoses (b). Bootstrapping (n=10,000) confirms all top-k variations between AMIE and PCP DDx accuracy are vital with p <0.05 after false discovery price (FDR) correction. |

|

| Diagnostic dialog and reasoning qualities as assessed by specialist physicians. On 28 out of 32 axes, AMIE outperformed PCPs whereas being comparable on the remainder. |

Limitations

Our research has a number of limitations and must be interpreted with acceptable warning. Firstly, our analysis method probably underestimates the real-world worth of human conversations, because the clinicians in our examine had been restricted to an unfamiliar text-chat interface, which allows large-scale LLM–affected person interactions however is just not consultant of normal scientific observe. Secondly, any research of this kind have to be seen as solely a primary exploratory step on an extended journey. Transitioning from a LLM research prototype that we evaluated on this examine to a secure and sturdy software that might be utilized by individuals and those that present care for them would require vital extra research. There are many vital limitations to be addressed, together with experimental efficiency underneath real-world constraints and devoted exploration of such vital matters as well being fairness and equity, privateness, robustness, and many extra, to make sure the security and reliability of the know-how.

AMIE as an assist to clinicians

In a just lately launched preprint, we evaluated the power of an earlier iteration of the AMIE system to generate a DDx alone or as an assist to clinicians. Twenty (20) generalist clinicians evaluated 303 difficult, real-world medical circumstances sourced from the New England Journal of Medicine (NEJM) ClinicoPathologic Conferences (CPCs). Each case report was learn by two clinicians randomized to one among two assistive situations: both help from serps and customary medical assets, or AMIE help along with these instruments. All clinicians offered a baseline, unassisted DDx previous to utilizing the respective assistive instruments.

|

| Assisted randomized reader examine setup to analyze the assistive impact of AMIE to clinicians in fixing advanced diagnostic case challenges from the New England Journal of Medicine. |

AMIE exhibited standalone efficiency that exceeded that of unassisted clinicians (top-10 accuracy 59.1% vs. 33.6%, p= 0.04). Comparing the 2 assisted examine arms, the top-10 accuracy was larger for clinicians assisted by AMIE, in comparison with clinicians with out AMIE help (24.6%, p<0.01) and clinicians with search (5.45%, p=0.02). Further, clinicians assisted by AMIE arrived at extra complete differential lists than these with out AMIE help.

|

| In addition to sturdy standalone efficiency, utilizing the AMIE system led to vital assistive impact and enhancements in diagnostic accuracy of the clinicians in fixing these advanced case challenges. |

It’s value noting that NEJM CPCs will not be consultant of on a regular basis scientific observe. They are uncommon case stories in only some hundred people so supply restricted scope for probing vital points like fairness or equity.

Bold and accountable research in healthcare — the artwork of the potential

Access to scientific experience stays scarce world wide. While AI has proven nice promise in particular scientific purposes, engagement within the dynamic, conversational diagnostic journeys of scientific observe requires many capabilities not but demonstrated by AI techniques. Doctors wield not solely data and ability however a dedication to myriad rules, together with security and high quality, communication, partnership and teamwork, belief, and professionalism. Realizing these attributes in AI techniques is an inspiring problem that must be approached responsibly and with care. AMIE is our exploration of the “art of the possible”, a research-only system for safely exploring a imaginative and prescient of the long run the place AI techniques could be higher aligned with attributes of the expert clinicians entrusted with our care. It is early experimental-only work, not a product, and has a number of limitations that we imagine benefit rigorous and in depth additional scientific research with a view to envision a future through which conversational, empathic and diagnostic AI techniques would possibly develop into secure, useful and accessible.

Acknowledgements

The research described right here is joint work throughout many groups at Google Research and Google Deepmind. We are grateful to all our co-authors – Tao Tu, Mike Schaekermann, Anil Palepu, Daniel McDuff, Jake Sunshine, Khaled Saab, Jan Freyberg, Ryutaro Tanno, Amy Wang, Brenna Li, Mohamed Amin, Sara Mahdavi, Karan Sighal, Shekoofeh Azizi, Nenad Tomasev, Yun Liu, Yong Cheng, Le Hou, Albert Webson, Jake Garrison, Yash Sharma, Anupam Pathak, Sushant Prakash, Philip Mansfield, Shwetak Patel, Bradley Green, Ewa Dominowska, Renee Wong, Juraj Gottweis, Dale Webster, Katherine Chou, Christopher Semturs, Joelle Barral, Greg Corrado and Yossi Matias. We additionally thank Sami Lachgar, Lauren Winer and John Guilyard for their help with narratives and the visuals. Finally, we’re grateful to Michael Howell, James Maynika, Jeff Dean, Karen DeSalvo, Zoubin Gharahmani and Demis Hassabis for their help throughout the course of this challenge.