Ryan Haines / Android Authority

Conventional knowledge has it that smartphone specs don’t actually matter all that a lot anymore. Whether you’re taking a look at the perfect flagships or a plucky mid-ranger, they’re all greater than able to every day duties, enjoying the most recent cellular video games, and even taking stonkingly good snaps. It’s fairly troublesome to seek out outright unhealthy cellular {hardware} except you’re scraping absolutely the finances finish of the market.

Case in level, shoppers and pundits alike are enamored with the Pixel 8 sequence, regardless that it benchmarks properly behind the iPhone 15 and different Android rivals. Similarly, Apple’s and Samsung’s newest flagships barely transfer the needle on digital camera {hardware} but proceed to be extremely regarded for pictures.

Specs merely don’t routinely equate to the perfect smartphone anymore. And but, Google’s Pixel 8 sequence and the upcoming Samsung Galaxy S24 vary have shoved their foot in that closing door. In reality, we might properly be about to embark on a brand new specs arm’s race. I’m speaking, in fact, about AI and the more and more scorching debate concerning the professionals and cons of on-device versus cloud-based processing.

AI options are rapidly making our telephones even higher, however many require cloud processing.

In a nutshell, working AI requests is sort of totally different from the normal general-purpose CPU and graphics workloads we’ve come to affiliate with and benchmark for throughout cellular, laptop computer, and different shopper devices.

For starters, machine studying (ML) fashions are large, requiring massive swimming pools of reminiscence to load up even earlier than we get to working them. Even compressed fashions occupy a number of gigabytes of RAM, giving them an even bigger reminiscence footprint than many cellular video games. Secondly, working an ML mannequin effectively requires extra distinctive arithmetic logic blocks than your typical CPU or GPU, in addition to assist for small integer quantity codecs like INT8 and INT4. In different phrases, you ideally want a specialised processor to run these fashions in real-time.

For instance, strive working Stable Diffusion picture era on a robust fashionable desktop-grade CPU; it takes a number of minutes to provide a outcome. OK, however that’s not helpful in order for you a picture in a rush. Older and lower-power CPUs, like these present in telephones, simply aren’t minimize out for this form of real-time work. There’s a purpose why NVIDIA is within the enterprise of promoting AI accelerator playing cards and why flagship smartphone processors more and more tout their AI capabilities. However, smartphones stay contained by their small energy budgets and restricted thermal headroom, that means there’s a restrict on what can at the moment be executed on system.

Damien Wilde / Android Authority

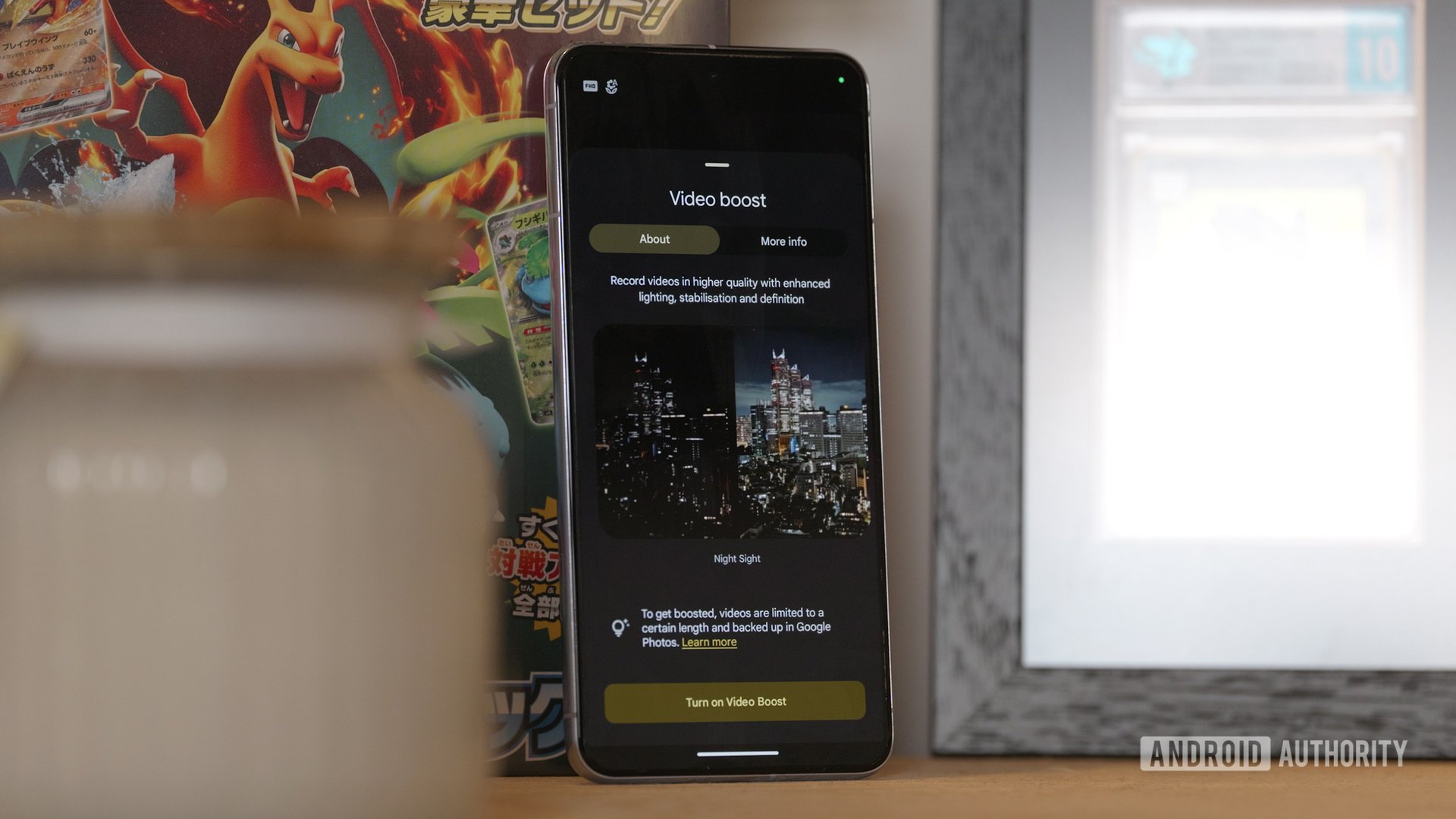

Nowhere is that this extra evident than within the newest Pixel and upcoming Galaxy smartphones. Both depend on new AI options to tell apart the brand new fashions from their predecessors and sport AI-accelerated processors to run helpful instruments, comparable to Call Screening and Magic Eraser, with out the cloud. However, peer on the small print, and you’ll discover that an web connection is required for cloud processing for a number of of the extra demanding AI options. Google’s Video Boost is a main instance, and Samsung has already clarified that some upcoming Galaxy AI options will run within the cloud, too.

Leveraging server energy to carry out duties that may’t be executed on our telephones is clearly a useful gizmo, however there are a number of limitations. The first is that these instruments require an web connection (clearly) and devour knowledge, which could not be appropriate on gradual connections, restricted knowledge plans, or when roaming. Real-time language translation, for instance, isn’t any good on a reference to very excessive latency.

Local AI processing is extra dependable and safe, however requires extra superior {hardware}.

Second, transmitting knowledge, notably private info like your conversations or photos, is a safety danger. The large names declare to maintain your knowledge safe from third events, however that’s by no means a assure. In addition, you’ll need to learn the tremendous print to know in the event that they’re utilizing your uploads to coach their algorithms additional.

Third, these options might be revoked at any time. If Google decides that Video Boost is just too costly to run long-term or not fashionable sufficient to assist, it might pull the plug, and a function you purchased the telephone for is gone. Of course, the inverse is true: Companies can extra simply add new cloud AI capabilities to gadgets, even those who lack sturdy AI {hardware}. So it’s not all unhealthy.

Still, ideally, it’s quicker, inexpensive, and safer to run AI duties regionally the place potential. Plus, you get to maintain the options for so long as the telephone continues to work. On-device is healthier, therefore why the power to compress and run massive language fashions, picture era, and different machine studying fashions in your telephone is a prize that chip distributors are speeding to say. Qualcomm’s newest flagship Snapdragon 8 Gen 3, MediaTek’s Dimensity 9300, Google’s Tensor G3, and Apple’s A17 Pro all speak an even bigger AI recreation than earlier fashions.

Cloud-processing is a boon for reasonably priced telephones, however they may find yourself left behind within the on-device arms race.

However, these are all costly flagship chips. While AI is already right here for the most recent flagship telephones, mid-range telephones are lacking out. Mainly as a result of they lack the high-end AI silicon to run many options on-devices, and it is going to be a few years earlier than the perfect AI capabilities come to mid-range chips.

Thankfully, mid-range gadgets can leverage cloud processing to bypass this deficit, however we haven’t seen a sign that manufacturers are in a rush to push these options down the worth tears but. Google, for example, baked the worth of its cloud options into the worth of the Pixel 8 Pro, however the cheaper Pixel 8 is left with out many of those instruments (for now). While the hole between mid-range and flagship telephones for day-to-day duties has actually narrowed lately, there’s a rising divide within the realm of AI capabilities.

The backside line is that in order for you the most recent and biggest AI instruments to run on-device (and you need to!), we’d like much more highly effective smartphone silicon. Thankfully, the most recent flagship chips and smartphones, just like the upcoming Samsung Galaxy S24 sequence, permit us to run a choice of highly effective AI instruments on-device. This will solely turn into extra frequent because the AI processor arms race heats up.