In SQL: The Universal Solvent for REST APIs we noticed how Steampipe’s suite of open-source plugins that translate REST API calls straight into SQL tables. These plugins had been, till not too long ago, tightly certain to the open-source engine and to the occasion of Postgres that it launches and controls. That led members of the Steampipe neighborhood to ask: “Can we use the plugins in our own Postgres databases?” Now the reply is sure—and extra—however let’s deal with Postgres first.

NOTE: Each Steampipe plugin ecosystem is now additionally a standalone foreign-data-wrapper extension for Postgres, a virtual-table extension for SQLite, and an export instrument.

Learn quicker. Dig deeper. See farther.

Using a Steampipe Plugin as a Standalone Postgres Foreign Data Wrapper (FDW)

Visit Steampipe downloads to seek out the installer on your OS, and run it to amass the Postgres FDW distribution of a plugin—on this case, the GitHub plugin. It’s considered one of (presently) 140 plugins obtainable on the Steampipe hub. Each plugin supplies a set of tables that map API calls to database tables—within the case of the GitHub plugin, 55 such tables. Each desk can seem in a FROM or JOIN clause; right here’s a question to pick columns from the GitHub challenge, filtering on a repository and creator.

choose

state,

updated_at,

title,

url

from

github_issue

the place

repository_full_name="turbot/steampipe"

and author_login = 'judell'

order by

updated_at descIf you’re utilizing Steampipe you possibly can set up the GitHub plugin like this:

steampipe plugin set up githubthen run the question within the Steampipe CLI, or in any Postgres consumer that may hook up with Steampipe’s occasion of Postgres.

But if you wish to do the identical factor in your personal occasion of Postgres you possibly can set up the plugin another way.

$ sudo /bin/sh -c "$(

curl -fsSL https://steampipe.io/set up/postgres.sh)"

Enter the plugin identify: github

Enter the model (newest):

Discovered:

- PostgreSQL model: 14

- PostgreSQL location: /usr/lib/postgresql/14

- Operating system: Linux

- System structure: x86_64

Based on the above, steampipe_postgres_github.pg14.linux_amd64.tar.gz

can be downloaded, extracted and put in at: /usr/lib/postgresql/14

Proceed with putting in Steampipe PostgreSQL FDW for model 14 at

/usr/lib/postgresql/14?

- Press 'y' to proceed with the present model.

- Press 'n' to customise your PostgreSQL set up listing

and choose a special model. (Y/n):

Downloading steampipe_postgres_github.pg14.linux_amd64.tar.gz...

###############################################################

############################ 100.0%

steampipe_postgres_github.pg14.linux_amd64/

steampipe_postgres_github.pg14.linux_amd64/steampipe_postgres_

github.so

steampipe_postgres_github.pg14.linux_amd64/steampipe_postgres_

github.management

steampipe_postgres_github.pg14.linux_amd64/steampipe_postgres_

github--1.0.sql

steampipe_postgres_github.pg14.linux_amd64/set up.sh

steampipe_postgres_github.pg14.linux_amd64/README.md

Download and extraction accomplished.

Installing steampipe_postgres_github in /usr/lib/postgresql/14...

Successfully put in steampipe_postgres_github extension!

Files have been copied to:

- Library listing: /usr/lib/postgresql/14/lib

- Extension listing: /usr/share/postgresql/14/extension/Now hook up with your server as standard, utilizing psql or one other consumer, most usually because the postgres consumer. Then run these instructions that are typical for any Postgres international knowledge wrapper. As with all Postgres extensions, you begin like this:

CREATE EXTENSION steampipe_postgres_fdw_github;To use a international knowledge wrapper, you first create a server:

CREATE SERVER steampipe_github FOREIGN DATA WRAPPER

steampipe_postgres_github OPTIONS (config 'token="ghp_..."');Use OPTIONS to configure the extension to make use of your GitHub entry token. (Alternatively, the usual surroundings variables used to configure a Steampipe plugin—it’s simply GITHUB_TOKEN on this case—will work should you set them earlier than beginning your occasion of Postgres.)

The tables offered by the extension will stay in a schema, so outline one:

CREATE SCHEMA github;Now import the schema outlined by the international server into the native schema you simply created:

IMPORT FOREIGN SCHEMA github FROM SERVER steampipe_github INTO github;Now run a question!

The international tables offered by the extension stay within the github schema, so by default you’ll discuss with tables like github.github_my_repository. If you set search_path="github", although, the schema turns into non-compulsory and you possibly can write queries utilizing unqualified desk names. Here’s a question we confirmed final time. It makes use of the github_search_repository which encapsulates the GitHub API for looking out repositories.

Suppose you’re in search of repos associated to PySpark. Here’s a question to seek out repos whose names match pyspark, and report just a few metrics that will help you gauge exercise and reputation.

choose

name_with_owner,

updated_at, -- how not too long ago up to date?

stargazer_count -- how many individuals starred the repo?

from

github_search_repository

the place

question = 'pyspark in:identify'

order by

stargazer_count desc

restrict 10;

+---------------------------------------+------------+---------------+

|name_with_owner |updated_at |stargazer_count|

+---------------------------------------+------------+---------------+

| AlexIoannides/pyspark-example-project | 2024-02-09 | 1324 |

| mahmoudparsian/pyspark-tutorial | 2024-02-11 | 1077 |

| spark-examples/pyspark-examples | 2024-02-11 | 1007 |

| palantir/pyspark-style-guide | 2024-02-12 | 924 |

| pyspark-ai/pyspark-ai | 2024-02-12 | 791 |

| lyhue1991/eat_pyspark_in_10_days | 2024-02-01 | 719 |

| CityInstitute/pyspark-tutorials | 2024-01-21 | 400 |

| krishnaik06/Pyspark-With-Python | 2024-02-11 | 400 |

| ekampf/PySpark-Boilerplate | 2024-02-11 | 388 |

| commoncrawl/cc-pyspark | 2024-02-12 | 361 |

+---------------------------------------+------------+---------------+If you’ve gotten plenty of repos, the primary run of that question will take just a few seconds. The second run will return outcomes immediately, although, as a result of the extension features a highly effective and refined cache.

And that’s all there may be to it! Every Steampipe plugin is now additionally a international knowledge wrapper that works precisely like this one. You can load a number of extensions with a view to be a part of throughout APIs. Of course you possibly can be a part of any of those API-sourced international tables with your personal Postgres tables. And to save lots of the outcomes of any question, you possibly can prepend “create table NAME as” or “create materialized view NAME as” to a question to persist outcomes as a desk or view.

Using a Steampipe Plugin as a SQLite Extension that Provides Virtual Tables

Visit Steampipe downloads to seek out the installer on your OS and run it to amass the SQLite distribution of the identical plugin.

$ sudo /bin/sh -c "$(curl -fsSL https://steampipe.io/set up/sqlite.sh)"

Enter the plugin identify: github

Enter model (newest):

Enter location (present listing):

Downloading steampipe_sqlite_github.linux_amd64.tar.gz...

############################################################

################ 100.0%

steampipe_sqlite_github.so

steampipe_sqlite_github.linux_amd64.tar.gz downloaded and

extracted efficiently at /dwelling/jon/steampipe-sqlite.Here’s the setup, and you possibly can place this code in ~/.sqliterc if you wish to run it each time you begin sqlite.

.load /dwelling/jon/steampipe-sqlite/steampipe_sqlite_github.so

choose steampipe_configure_github('

token="ghp_..."

');Now you possibly can run the identical question as above. Here, too, the outcomes are cached, so a second run of the question can be immediate.

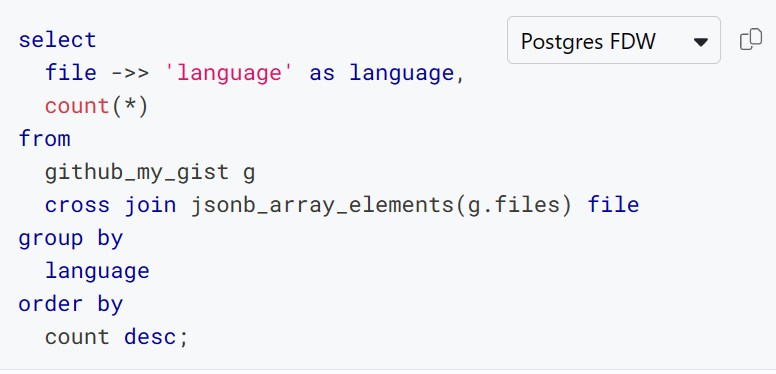

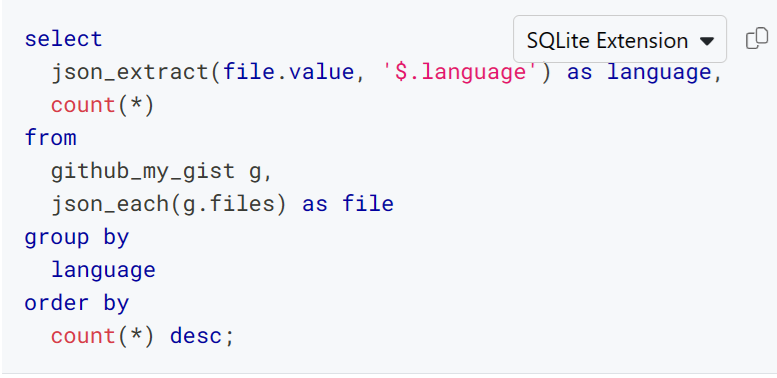

What concerning the variations between Postgres-flavored and SQLite-flavored SQL? The Steampipe hub is your good friend! For instance, listed below are Postgres and SQLite variants of a question that accesses a discipline inside a JSON column with a view to tabulate the languages related along with your gists.

Postgres

SQLite

The github_my_gist desk studies particulars about gists that belong to the GitHub consumer who’s authenticated to Steampipe. The language related to every gist lives in a JSONB column referred to as information, which accommodates a listing of objects like this.

{

"measurement": 24541,

"sort": "textual content/markdown",

"raw_url": "https://gist.githubusercontent.com/judell/49d66ca2a5d2a3b

"filename": "steampipe-readme-update.md",

"language": "Markdown"

}The capabilities wanted to venture that record as rows differ: in Postgres you employ jsonb_array_elements and in SQLite it’s json_each.

As with Postgres extensions, you possibly can load a number of SQLite extensions with a view to be a part of throughout APIs. You can be a part of any of those API-sourced international tables with your personal SQLite tables. And you possibly can prepend create desk NAME as to a question to persist outcomes as a desk.

Using a Steampipe Plugin as a Standalone Export Tool

Visit Steampipe downloads to seek out the installer on your OS, and run it to amass the export distribution of a plugin—once more, we’ll illustrate utilizing the GitHub plugin.

$ sudo /bin/sh -c "$(curl -fsSL https://steampipe.io/set up/export.sh)"

Enter the plugin identify: github

Enter the model (newest):

Enter location (/usr/native/bin):

Created short-term listing at /tmp/tmp.48QsUo6CLF.

Downloading steampipe_export_github.linux_amd64.tar.gz...

##########################################################

#################### 100.0%

Deflating downloaded archive

steampipe_export_github

Installing

Applying needed permissions

Removing downloaded archive

steampipe_export_github was put in efficiently to

/usr/native/bin

$ steampipe_export_github -h

Export knowledge utilizing the github plugin.

Find detailed utilization data together with desk names,

column names, and examples on the Steampipe Hub:

https://hub.steampipe.io/plugins/turbot/github

Usage:

steampipe_export_github TABLE_NAME [flags]

Flags:

--config string Config file knowledge

-h, --help assist for steampipe_export_github

--limit int Limit knowledge

--output string Output format: csv, json or jsonl

(default "csv")

--select strings Column knowledge to show

--where stringArray the place clause knowledgeThere’s no SQL engine within the image right here, this instrument is only an exporter. To export all of your gists to a JSON file:

steampipe_export_github github_my_gist --output json > gists.jsonTo choose just some columns and export to a CSV file:

steampipe_export_github github_my_gist --output csv --select

"description,created_at,html_url" > gists.csvYou can use --limit to restrict the rows returned, and --where to filter them, however largely you’ll use this instrument to shortly and simply seize knowledge that you simply’ll therapeutic massage elsewhere, for instance, in a spreadsheet.

Tap into the Steampipe Plugin Ecosystem

Steampipe plugins aren’t simply uncooked interfaces to underlying APIs. They use tables to mannequin these APIs in helpful methods. For instance, the github_my_repository desk exemplifies a design sample that applies constantly throughout the suite of plugins. From the GitHub plugin’s documentation:

You can personal repositories individually, or you possibly can share possession of repositories with different individuals in a company. The

github_my_repositorydesk will record repos that you simply personal, that you simply collaborate on, or that belong to your organizations. To question ANY repository, together with public repos, use thegithub_repositorydesk.

Other plugins comply with the identical sample. For instance, the Microsoft 365 plugin supplies each microsoft_my_mail_message and microsoft_mail_message, and the plugin supplies googleworkspace_my_gmail_message and googleworkspace_gmail. Where doable, plugins consolidate views of sources from the attitude of an authenticated consumer.

While plugins usually present tables with mounted schemas, that’s not at all times the case. Dynamic schemas, carried out by the Airtable, CSV, Kubernetes, and Salesforce plugins (amongst others) are one other key sample. Here’s a CSV instance utilizing a standalone Postgres FDW.

IMPORT FOREIGN SCHEMA csv FROM SERVER steampipe_csv INTO csv

OPTIONS(config 'paths=["/home/jon/csv"]');Now all of the .csv information in /dwelling/jon/csv will automagically be Postgres international tables. Suppose you retain observe of legitimate homeowners of EC2 cases in a file referred to as ec2_owner_tags. Here’s a question towards the corresponding desk.

choose * from csv.ec2_owner_tags;

proprietor | _ctx

----------------+----------------------------

Pam Beesly | {"connection_name": "csv"}

Dwight Schrute | {"connection_name": "csv"}You may be a part of that desk with the AWS plugin’s aws_ec2_instance desk to report proprietor tags on EC2 cases which can be (or usually are not) listed within the CSV file.

choose

ec2.proprietor,

case

when csv.proprietor is null then 'false'

else 'true'

finish as is_listed

from

(choose distinct tags ->> 'proprietor' as proprietor

from aws.aws_ec2_instance) ec2

left be a part of

csv.ec2_owner_tags csv on ec2.proprietor = csv.proprietor;

proprietor | is_listed

----------------+-----------

Dwight Schrute | true

Michael Scott | falseAcross the suite of plugins there are greater than 2,300 pre-defined fixed-schema tables that you should utilize in these methods, plus a limiteless variety of dynamic tables. And new plugins are always being added by Turbot and by Steampipe’s open-source neighborhood. You can faucet into this ecosystem utilizing Steampipe or Turbot Pipes, from your personal Postgres or SQLite database, or straight from the command line.