In biomedicine, segmentation entails annotating pixels from an vital construction in a medical picture, like an organ or cell. Artificial intelligence fashions might help clinicians by highlighting pixels which will present indicators of a sure illness or anomaly.

However, these fashions sometimes solely present one reply, whereas the issue of medical picture segmentation is commonly removed from black and white. Five knowledgeable human annotators would possibly present 5 completely different segmentations, maybe disagreeing on the existence or extent of the borders of a nodule in a lung CT picture.

“Having options can help in decision-making. Even just seeing that there is uncertainty in a medical image can influence someone’s decisions, so it is important to take this uncertainty into account,” says Marianne Rakic, an MIT pc science PhD candidate.

Rakic is lead creator of a paper with others at MIT, the Broad Institute of MIT and Harvard, and Massachusetts General Hospital that introduces a brand new AI instrument that may seize the uncertainty in a medical picture.

Known as Tyche (named for the Greek divinity of probability), the system offers a number of believable segmentations that every spotlight barely completely different areas of a medical picture. A person can specify what number of choices Tyche outputs and choose essentially the most acceptable one for his or her function.

Importantly, Tyche can deal with new segmentation duties with no need to be retrained. Training is a data-intensive course of that entails displaying a mannequin many examples and requires in depth machine-learning expertise.

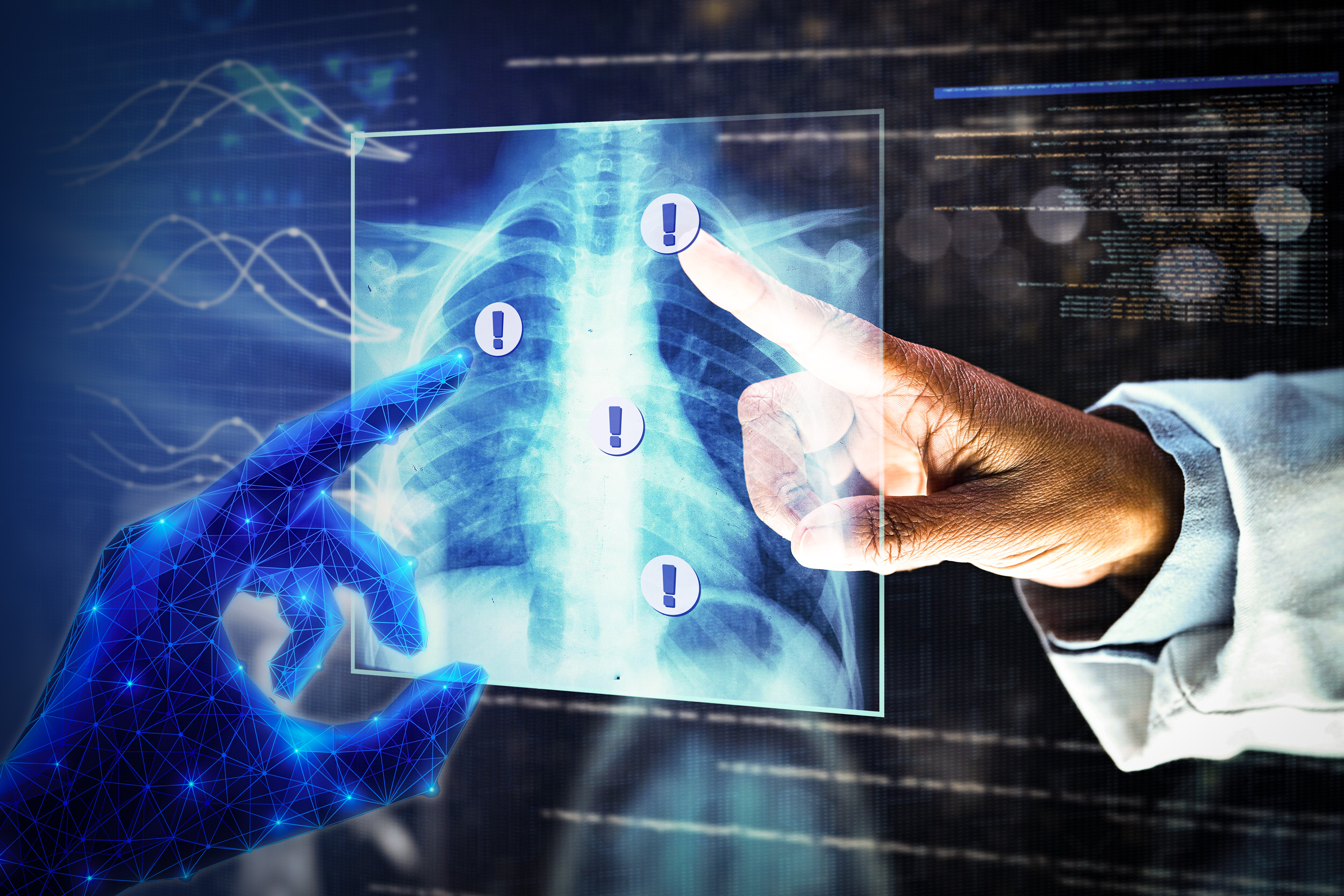

Because it doesn’t want retraining, Tyche could possibly be simpler for clinicians and biomedical researchers to make use of than another strategies. It could possibly be utilized “out of the box” for quite a lot of duties, from figuring out lesions in a lung X-ray to pinpointing anomalies in a mind MRI.

Ultimately, this technique may enhance diagnoses or help in biomedical analysis by calling consideration to probably essential info that different AI instruments would possibly miss.

“Ambiguity has been understudied. If your model completely misses a nodule that three experts say is there and two experts say is not, that is probably something you should pay attention to,” provides senior creator Adrian Dalca, an assistant professor at Harvard Medical School and MGH, and a analysis scientist in the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL).

Their co-authors embody Hallee Wong, a graduate pupil in electrical engineering and pc science; Jose Javier Gonzalez Ortiz PhD ’23; Beth Cimini, affiliate director for bioimage evaluation on the Broad Institute; and John Guttag, the Dugald C. Jackson Professor of Computer Science and Electrical Engineering. Rakic will current Tyche on the IEEE Conference on Computer Vision and Pattern Recognition, the place Tyche has been chosen as a spotlight.

Addressing ambiguity

AI programs for medical picture segmentation sometimes use neural networks. Loosely primarily based on the human mind, neural networks are machine-learning fashions comprising many interconnected layers of nodes, or neurons, that course of knowledge.

After talking with collaborators on the Broad Institute and MGH who use these programs, the researchers realized two main points restrict their effectiveness. The fashions can not seize uncertainty they usually should be retrained for even a barely completely different segmentation job.

Some strategies attempt to overcome one pitfall, however tackling each issues with a single resolution has confirmed particularly difficult, Rakic says.

“If you want to take ambiguity into account, you often have to use an extremely complicated model. With the method we propose, our goal is to make it easy to use with a relatively small model so that it can make predictions quickly,” she says.

The researchers constructed Tyche by modifying a simple neural community structure.

A person first feeds Tyche a couple of examples that present the segmentation job. For occasion, examples may embody a number of images of lesions in a coronary heart MRI which have been segmented by completely different human consultants so the mannequin can be taught the duty and see that there’s ambiguity.

The researchers discovered that simply 16 instance images, known as a “context set,” is sufficient for the mannequin to make good predictions, however there isn’t any restrict to the variety of examples one can use. The context set permits Tyche to unravel new duties with out retraining.

For Tyche to seize uncertainty, the researchers modified the neural community so it outputs a number of predictions primarily based on one medical picture enter and the context set. They adjusted the community’s layers in order that, as knowledge transfer from layer to layer, the candidate segmentations produced at every step can “talk” to one another and the examples in the context set.

In this manner, the mannequin can be certain that candidate segmentations are all a bit completely different, however nonetheless remedy the duty.

“It is like rolling dice. If your model can roll a two, three, or four, but doesn’t know you have a two and a four already, then either one might appear again,” she says.

They additionally modified the coaching course of so it’s rewarded by maximizing the standard of its greatest prediction.

If the person requested for 5 predictions, on the finish they will see all 5 medical picture segmentations Tyche produced, although one is likely to be higher than the others.

The researchers additionally developed a model of Tyche that can be utilized with an present, pretrained mannequin for medical picture segmentation. In this case, Tyche permits the mannequin to output a number of candidates by making slight transformations to images.

Better, sooner predictions

When the researchers examined Tyche with datasets of annotated medical images, they discovered that its predictions captured the variety of human annotators, and that its greatest predictions had been higher than any from the baseline fashions. Tyche additionally carried out sooner than most fashions.

“Outputting multiple candidates and ensuring they are different from one another really gives you an edge,” Rakic says.

The researchers additionally noticed that Tyche may outperform extra advanced fashions which have been skilled utilizing a big, specialised dataset.

For future work, they plan to strive utilizing a extra versatile context set, maybe together with textual content or a number of kinds of images. In addition, they need to discover strategies that might enhance Tyche’s worst predictions and improve the system so it may advocate one of the best segmentation candidates.

This analysis is funded, in half, by the National Institutes of Health, the Eric and Wendy Schmidt Center on the Broad Institute of MIT and Harvard, and Quanta Computer.