Imagine driving via a tunnel in an autonomous automobile, however unbeknownst to you, a crash has stopped site visitors up forward. Normally, you’d want to depend on the automotive in entrance of you to know it is best to begin braking. But what in case your automobile might see across the automotive forward and apply the brakes even sooner?

Researchers from MIT and Meta have developed a pc imaginative and prescient approach that might sometime allow an autonomous automobile to just do that.

They have launched a technique that creates bodily correct, 3D fashions of a complete scene, including areas blocked from view, utilizing pictures from a single digicam place. Their approach makes use of shadows to decide what lies in obstructed parts of the scene.

They name their method PlatoNeRF, primarily based on Plato’s allegory of the cave, a passage from the Greek thinker’s “Republic” through which prisoners chained in a cave discern the truth of the surface world primarily based on shadows forged on the cave wall.

By combining lidar (mild detection and ranging) know-how with machine studying, PlatoNeRF can generate extra correct reconstructions of 3D geometry than some present AI methods. Additionally, PlatoNeRF is best at easily reconstructing scenes the place shadows are laborious to see, resembling these with excessive ambient mild or darkish backgrounds.

In addition to bettering the protection of autonomous autos, PlatoNeRF might make AR/VR headsets extra environment friendly by enabling a person to model the geometry of a room with out the necessity to stroll round taking measurements. It might additionally assist warehouse robots discover gadgets in cluttered environments quicker.

“Our key idea was taking these two things that have been done in different disciplines before and pulling them together — multibounce lidar and machine learning. It turns out that when you bring these two together, that is when you find a lot of new opportunities to explore and get the best of both worlds,” says Tzofi Klinghoffer, an MIT graduate pupil in media arts and sciences, affiliate of the MIT Media Lab, and lead creator of a paper on PlatoNeRF.

Klinghoffer wrote the paper together with his advisor, Ramesh Raskar, affiliate professor of media arts and sciences and chief of the Camera Culture Group at MIT; senior creator Rakesh Ranjan, a director of AI analysis at Meta Reality Labs; in addition to Siddharth Somasundaram at MIT, and Xiaoyu Xiang, Yuchen Fan, and Christian Richardt at Meta. The analysis will probably be introduced on the Conference on Computer Vision and Pattern Recognition.

Shedding mild on the issue

Reconstructing a full 3D scene from one digicam viewpoint is a fancy downside.

Some machine-learning approaches make use of generative AI fashions that attempt to guess what lies within the occluded areas, however these fashions can hallucinate objects that aren’t actually there. Other approaches try to infer the shapes of hidden objects utilizing shadows in a shade picture, however these strategies can battle when shadows are laborious to see.

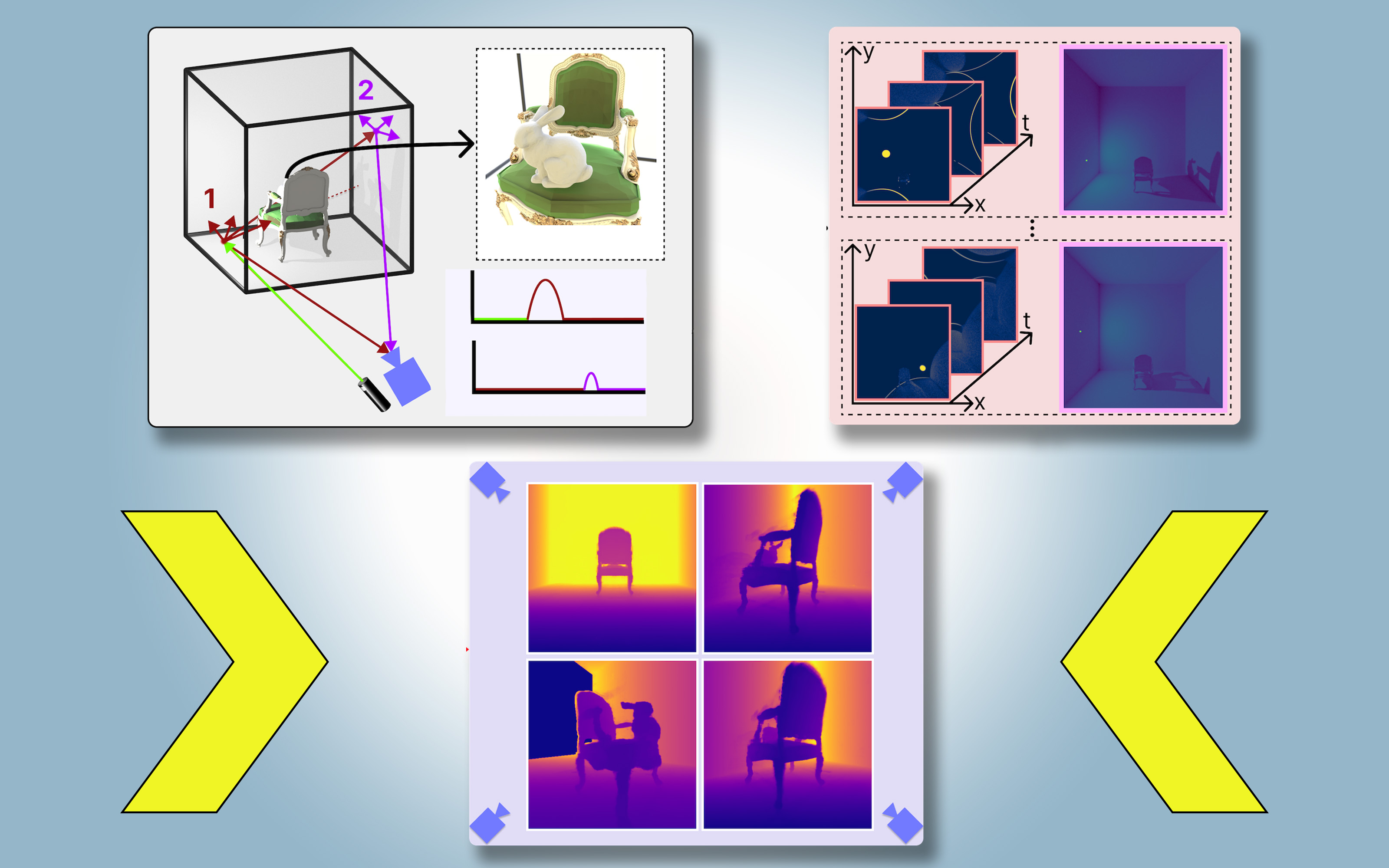

For PlatoNeRF, the MIT researchers constructed off these approaches utilizing a brand new sensing modality known as single-photon lidar. Lidars map a 3D scene by emitting pulses of sunshine and measuring the time it takes that mild to bounce again to the sensor. Because single-photon lidars can detect particular person photons, they supply higher-resolution information.

The researchers use a single-photon lidar to illuminate a goal level within the scene. Some mild bounces off that time and returns instantly to the sensor. However, many of the mild scatters and bounces off different objects earlier than returning to the sensor. PlatoNeRF depends on these second bounces of sunshine.

By calculating how lengthy it takes mild to bounce twice after which return to the lidar sensor, PlatoNeRF captures extra details about the scene, including depth. The second bounce of sunshine additionally incorporates details about shadows.

The system traces the secondary rays of sunshine — people who bounce off the goal level to different factors within the scene — to decide which factors lie in shadow (due to an absence of sunshine). Based on the situation of those shadows, PlatoNeRF can infer the geometry of hidden objects.

The lidar sequentially illuminates 16 factors, capturing a number of pictures which might be used to reconstruct the complete 3D scene.

“Every time we illuminate a point in the scene, we are creating new shadows. Because we have all these different illumination sources, we have a lot of light rays shooting around, so we are carving out the region that is occluded and lies beyond the visible eye,” Klinghoffer says.

A successful mixture

Key to PlatoNeRF is the mix of multibounce lidar with a particular sort of machine-learning model referred to as a neural radiance subject (NeRF). A NeRF encodes the geometry of a scene into the weights of a neural community, which supplies the model a powerful capability to interpolate, or estimate, novel views of a scene.

This capability to interpolate additionally leads to extremely correct scene reconstructions when mixed with multibounce lidar, Klinghoffer says.

“The biggest challenge was figuring out how to combine these two things. We really had to think about the physics of how light is transporting with multibounce lidar and how to model that with machine learning,” he says.

They in contrast PlatoNeRF to two frequent different strategies, one which solely makes use of lidar and the opposite that solely makes use of a NeRF with a shade picture.

They discovered that their technique was ready to outperform each methods, particularly when the lidar sensor had decrease decision. This would make their method extra sensible to deploy in the true world, the place decrease decision sensors are frequent in industrial gadgets.

“About 15 years ago, our group invented the first camera to ‘see’ around corners, that works by exploiting multiple bounces of light, or ‘echoes of light.’ Those techniques used special lasers and sensors, and used three bounces of light. Since then, lidar technology has become more mainstream, that led to our research on cameras that can see through fog. This new work uses only two bounces of light, which means the signal to noise ratio is very high, and 3D reconstruction quality is impressive,” Raskar says.

In the longer term, the researchers need to attempt monitoring greater than two bounces of sunshine to see how that might enhance scene reconstructions. In addition, they’re fascinated by making use of extra deep studying methods and mixing PlatoNeRF with shade picture measurements to seize texture data.

“While camera images of shadows have long been studied as a means to 3D reconstruction, this work revisits the problem in the context of lidar, demonstrating significant improvements in the accuracy of reconstructed hidden geometry. The work shows how clever algorithms can enable extraordinary capabilities when combined with ordinary sensors — including the lidar systems that many of us now carry in our pocket,” says David Lindell, an assistant professor within the Department of Computer Science on the University of Toronto, who was not concerned with this work.