For over 30 years, science photographer Felice Frankel has helped MIT professors, researchers, and college students talk their work visually. Throughout that point, she has seen the growth of varied instruments to help the creation of compelling pictures: some useful, and a few antithetical to the effort of producing a reliable and full illustration of the research. In a current opinion piece printed in Nature journal, Frankel discusses the burgeoning use of generative synthetic intelligence (GenAI) in pictures and the challenges and implications it has for speaking research. On a extra private be aware, she questions whether or not there’ll nonetheless be a spot for a science photographer in the research neighborhood.

Q: You’ve talked about that as quickly as a photograph is taken, the picture may be thought-about “manipulated.” There are methods you’ve manipulated your personal pictures to create a visible that extra efficiently communicates the desired message. Where is the line between acceptable and unacceptable manipulation?

A: In the broadest sense, the choices made on how one can body and construction the content material of a picture, together with which instruments used to create the picture, are already a manipulation of actuality. We want to recollect the picture is merely a illustration of the factor, and never the factor itself. Decisions should be made when creating the picture. The essential concern is to not manipulate the knowledge, and in the case of most pictures, the knowledge is the construction. For instance, for a picture I made a while in the past, I digitally deleted the petri dish in which a yeast colony was rising, to convey consideration to the beautiful morphology of the colony. The knowledge in the picture is the morphology of the colony. I didn’t manipulate that knowledge. However, I at all times point out in the textual content if I’ve carried out one thing to a picture. I talk about the concept of picture enhancement in my handbook, (*3*)

Q: What can researchers do to verify their research is communicated accurately and ethically?

A: With the introduction of AI, I see three most important points regarding visible illustration: the distinction between illustration and documentation, the ethics round digital manipulation, and a seamless want for researchers to be educated in visible communication. For years, I’ve been making an attempt to develop a visible literacy program for the current and upcoming courses of science and engineering researchers. MIT has a communication requirement which largely addresses writing, however what about the visible, which is now not tangential to a journal submission? I’ll wager that almost all readers of scientific articles go proper to the figures, after they learn the summary.

We have to require college students to learn to critically take a look at a broadcast graph or picture and resolve if there’s something bizarre happening with it. We want to debate the ethics of “nudging” a picture to look a sure predetermined means. I describe in the article an incident when a pupil altered one of my pictures (with out asking me) to match what the pupil needed to visually talk. I didn’t allow it, of course, and was disillusioned that the ethics of such an alteration weren’t thought-about. We have to develop, at the very least, conversations on campus and, even higher, create a visible literacy requirement together with the writing requirement.

Q: Generative AI shouldn’t be going away. What do you see as the future for speaking science visually?

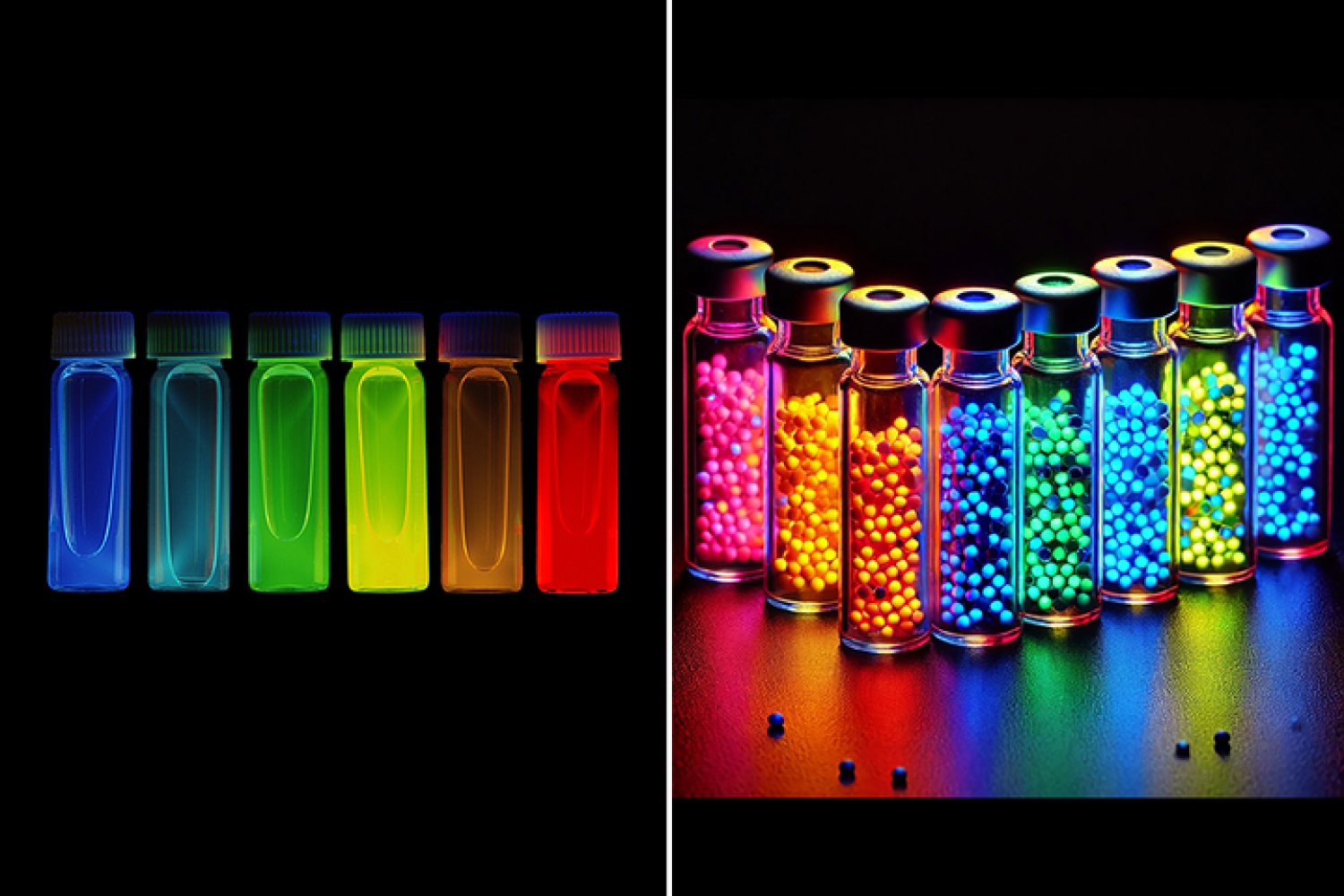

A: For the Nature article, I made a decision {that a} highly effective strategy to query the use of AI in producing pictures was by instance. I used one of the diffusion fashions to create a picture utilizing the following immediate:

“Create a photo of Moungi Bawendi’s nano crystals in vials against a black background, fluorescing at different wavelengths, depending on their size, when excited with UV light.”

The outcomes of my AI experimentation have been typically cartoon-like pictures that might hardly cross as actuality — not to mention documentation — however there shall be a time when they are going to be. In conversations with colleagues in research and computer-science communities, all agree that we should always have clear requirements on what’s and isn’t allowed. And most significantly, a GenAI visible ought to by no means be allowed as documentation.

But AI-generated visuals will, in reality, be helpful for illustration functions. If an AI-generated visible is to be submitted to a journal (or, for that matter, be proven in a presentation), I consider the researcher MUST

- clearly label if a picture was created by an AI mannequin;

- point out what mannequin was used;

- embody what immediate was used; and

- embody the picture, if there may be one, that was used to assist the immediate.