Last week Google revealed it’s going all in on generative AI. At its annual I/O convention, the corporate introduced it plans to embed AI instruments into nearly all of its merchandise, from Google Docs to coding and on-line search. (Read my story right here.)

Google’s announcement is a enormous deal. Billions of individuals will now get entry to highly effective, cutting-edge AI fashions to assist them do all types of duties, from producing textual content to answering queries to writing and debugging code. As MIT Technology Review’s editor in chief, Mat Honan, writes in his evaluation of I/O, it’s clear AI is now Google’s core product.

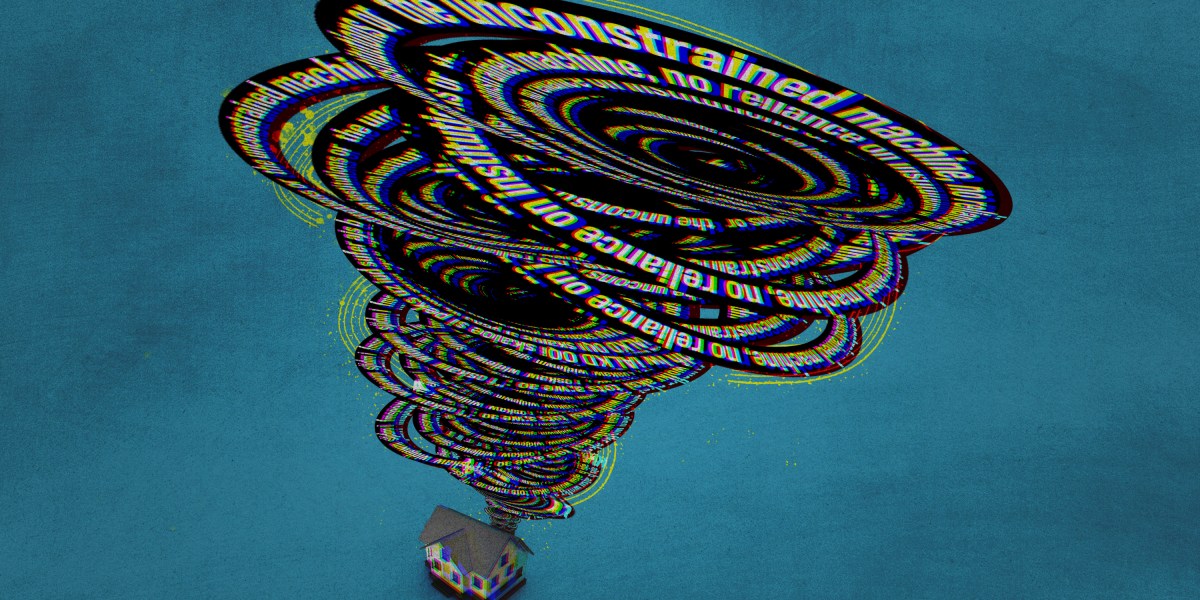

Google’s method is to introduce these new features into its merchandise regularly. But it would almost definitely be simply a matter of time earlier than issues begin to go awry. The firm has not solved any of the frequent issues with these AI fashions. They nonetheless make stuff up. They are nonetheless straightforward to govern to interrupt their very own guidelines. They are nonetheless weak to assaults. There may be very little stopping them from getting used as instruments for disinformation, scams, and spam.

Because these types of AI instruments are comparatively new, they nonetheless function in a largely regulation-free zone. But that doesn’t really feel sustainable. Calls for regulation are rising louder because the post-ChatGPT euphoria is sporting off, and regulators are beginning to ask robust questions in regards to the expertise.

US regulators are looking for a approach to govern highly effective AI instruments. This week, OpenAI CEO Sam Altman will testify within the US Senate (after a cozy “educational” dinner with politicians the evening earlier than). The listening to follows a assembly final week between Vice President Kamala Harris and the CEOs of Alphabet, Microsoft, OpenAI, and Anthropic.

In a assertion, Harris mentioned the businesses have an “ethical, moral, and legal responsibility” to make sure that their merchandise are protected. Senator Chuck Schumer of New York, the bulk chief, has proposed laws to control AI, which might embody a new company to implement the principles.

“Everybody wants to be seen to be doing something. There’s a lot of social anxiety about where all this is going,” says Jennifer King, a privateness and information coverage fellow on the Stanford Institute for Human-Centered Artificial Intelligence.

Getting bipartisan help for a new AI invoice will likely be troublesome, King says: “It will depend on to what extent [generative AI] is being seen as a real, societal-level threat.” But the chair of the Federal Trade Commission, Lina Khan, has come out “guns blazing,” she provides. Earlier this month, Khan wrote an op-ed calling for AI regulation now to stop the errors that arose from being too lax with the tech sector prior to now. She signaled that within the US, regulators are extra doubtless to make use of present legal guidelines already of their instrument equipment to control AI, reminiscent of antitrust and industrial practices legal guidelines.

Meanwhile, in Europe, lawmakers are edging nearer to a last deal on the AI Act. Last week members of the European Parliament signed off on a draft regulation that referred to as for a ban on facial recognition expertise in public locations. It additionally bans predictive policing, emotion recognition, and the indiscriminate scraping of biometric information on-line.

The EU is ready to create extra guidelines to constrain generative AI too, and the parliament desires corporations creating massive AI fashions to be extra clear. These measures embody labeling AI-generated content material, publishing summaries of copyrighted information that was used to coach the mannequin, and establishing safeguards that will stop fashions from producing unlawful content material.

But right here’s the catch: the EU remains to be a great distance away from implementing guidelines on generative AI, and a lot of the proposed components of the AI Act are usually not going to make it to the ultimate model. There are nonetheless robust negotiations left between the parliament, the European Commission, and the EU member nations. It will likely be years till we see the AI Act in pressure.

While regulators battle to get their act collectively, outstanding voices in tech are beginning to push the Overton window. Speaking at an occasion final week, Microsoft’s chief economist, Michael Schwarz, mentioned that we should always wait till we see “meaningful harm” from AI earlier than we regulate it. He in contrast it to driver’s licenses, which had been launched after many dozens of individuals had been killed in accidents. “There has to be at least a little bit of harm so that we see what is the real problem,” Schwarz mentioned.

This assertion is outrageous. The hurt attributable to AI has been effectively documented for years. There has been bias and discrimination, AI-generated faux information, and scams. Other AI methods have led to harmless folks being arrested, folks being trapped in poverty, and tens of 1000’s of individuals being wrongfully accused of fraud. These harms are more likely to develop exponentially as generative AI is built-in deeper into our society, due to bulletins like Google’s.

The query we ought to be asking ourselves is: How a lot hurt are we keen to see? I’d say we’ve seen sufficient.

Deeper Learning

The open-source AI increase is constructed on Big Tech’s handouts. How lengthy will it final?

New open-source massive language fashions—options to Google’s Bard or OpenAI’s ChatGPT that researchers and app builders can examine, construct on, and modify—are dropping like sweet from a piñata. These are smaller, cheaper variations of the best-in-class AI fashions created by the massive companies that (virtually) match them in efficiency—they usually’re shared free of charge.

The way forward for how AI is made and used is at a crossroads. On one hand, higher entry to those fashions has helped drive innovation. It also can assist catch their flaws. But this open-source increase is precarious. Most open-source releases nonetheless stand on the shoulders of big fashions put out by large companies with deep pockets. If OpenAI and Meta resolve they’re closing up store, a boomtown might grow to be a backwater. Read extra from Will Douglas Heaven.

Bits and Bytes

Amazon is engaged on a secret residence robotic with ChatGPT-like options

Leaked paperwork present plans for an up to date model of the Astro robotic that may bear in mind what it’s seen and understood, permitting folks to ask it questions and provides it instructions. But Amazon has to solve a lot of issues earlier than these fashions are protected to deploy inside folks’s properties at scale. (Insider)

Stability AI has launched a text-to-animation mannequin

The firm that created the open-source text-to-image mannequin Stable Diffusion has launched one other instrument that lets folks create animations utilizing textual content, picture, and video prompts. Copyright issues apart, these instruments might grow to be highly effective instruments for creatives, and the truth that they’re open supply makes them accessible to extra folks. It’s additionally a stopgap earlier than the inevitable subsequent step, open-source text-to-video. (Stability AI)

AI is getting sucked into tradition wars—see the Hollywood writers’ strike

One of the disputes between the Writers Guild of America and Hollywood studios is whether or not folks ought to be allowed to make use of AI to write down movie and tv scripts. With wearying predictability, the US culture-war brigade has stepped into the fray. Online trolls are gleefully telling placing writers that AI will exchange them. (New York Magazine)

Watch: An AI-generated trailer for Lord of the Rings … however make it Wes Anderson

This was cute.