Imagine a slime-like robotic that may seamlessly change its form to squeeze by way of slim areas, which might be deployed contained in the human physique to take away an undesirable merchandise.

While such a robotic doesn’t but exist exterior a laboratory, researchers are working to develop reconfigurable soft robots for purposes in well being care, wearable units, and industrial programs.

But how can one control a squishy robotic that doesn’t have joints, limbs, or fingers that may be manipulated, and as an alternative can drastically alter its complete form at will? MIT researchers are working to reply that query.

They developed a control algorithm that may autonomously find out how to transfer, stretch, and form a reconfigurable robotic to full a selected process, even when that process requires the robotic to change its morphology a number of instances. The workforce additionally constructed a simulator to take a look at control algorithms for deformable soft robots on a sequence of difficult, shape-changing duties.

Their methodology accomplished every of the eight duties they evaluated whereas outperforming different algorithms. The approach labored particularly effectively on multifaceted duties. For occasion, in a single take a look at, the robotic had to cut back its peak whereas rising two tiny legs to squeeze by way of a slim pipe, after which un-grow these legs and prolong its torso to open the pipe’s lid.

While reconfigurable soft robots are nonetheless of their infancy, such a method may sometime allow general-purpose robots that may adapt their shapes to accomplish various duties.

“When people think about soft robots, they tend to think about robots that are elastic, but return to their original shape. Our robot is like slime and can actually change its morphology. It is very striking that our method worked so well because we are dealing with something very new,” says Boyuan Chen, {an electrical} engineering and laptop science (EECS) graduate pupil and co-author of a paper on this strategy.

Chen’s co-authors embrace lead creator Suning Huang, an undergraduate pupil at Tsinghua University in China who accomplished this work whereas a visiting pupil at MIT; Huazhe Xu, an assistant professor at Tsinghua University; and senior creator Vincent Sitzmann, an assistant professor of EECS at MIT who leads the Scene Representation Group within the Computer Science and Artificial Intelligence Laboratory. The analysis can be introduced on the International Conference on Learning Representations.

Controlling dynamic movement

Scientists usually educate robots to full duties utilizing a machine-learning strategy generally known as reinforcement studying, which is a trial-and-error course of during which the robotic is rewarded for actions that transfer it nearer to a purpose.

This may be efficient when the robotic’s transferring elements are constant and well-defined, like a gripper with three fingers. With a robotic gripper, a reinforcement studying algorithm would possibly transfer one finger barely, studying by trial and error whether or not that movement earns it a reward. Then it might transfer on to the subsequent finger, and so forth.

But shape-shifting robots, that are managed by magnetic fields, can dynamically squish, bend, or elongate their complete our bodies.

Image: Courtesy of the researchers

“Such a robot could have thousands of small pieces of muscle to control, so it is very hard to learn in a traditional way,” says Chen.

To remedy this downside, he and his collaborators had to give it some thought in a different way. Rather than transferring every tiny muscle individually, their reinforcement studying algorithm begins by studying to control teams of adjoining muscular tissues that work collectively.

Then, after the algorithm has explored the house of attainable actions by specializing in teams of muscular tissues, it drills down into finer element to optimize the coverage, or motion plan, it has realized. In this way, the control algorithm follows a coarse-to-fine methodology.

“Coarse-to-fine means that when you take a random action, that random action is likely to make a difference. The change in the outcome is likely very significant because you coarsely control several muscles at the same time,” Sitzmann says.

To allow this, the researchers deal with a robotic’s motion house, or the way it can transfer in a sure space, like a picture.

Their machine-learning mannequin makes use of pictures of the robotic’s setting to generate a 2D motion house, which incorporates the robotic and the world round it. They simulate robotic movement utilizing what is called the material-point-method, the place the motion house is roofed by factors, like picture pixels, and overlayed with a grid.

The identical way close by pixels in a picture are associated (just like the pixels that type a tree in a photograph), they constructed their algorithm to perceive that close by motion factors have stronger correlations. Points across the robotic’s “shoulder” will transfer equally when it modifications form, whereas factors on the robotic’s “leg” may also transfer equally, however in a unique way than these on the “shoulder.”

In addition, the researchers use the identical machine-learning mannequin to have a look at the setting and predict the actions the robotic ought to take, which makes it extra environment friendly.

Building a simulator

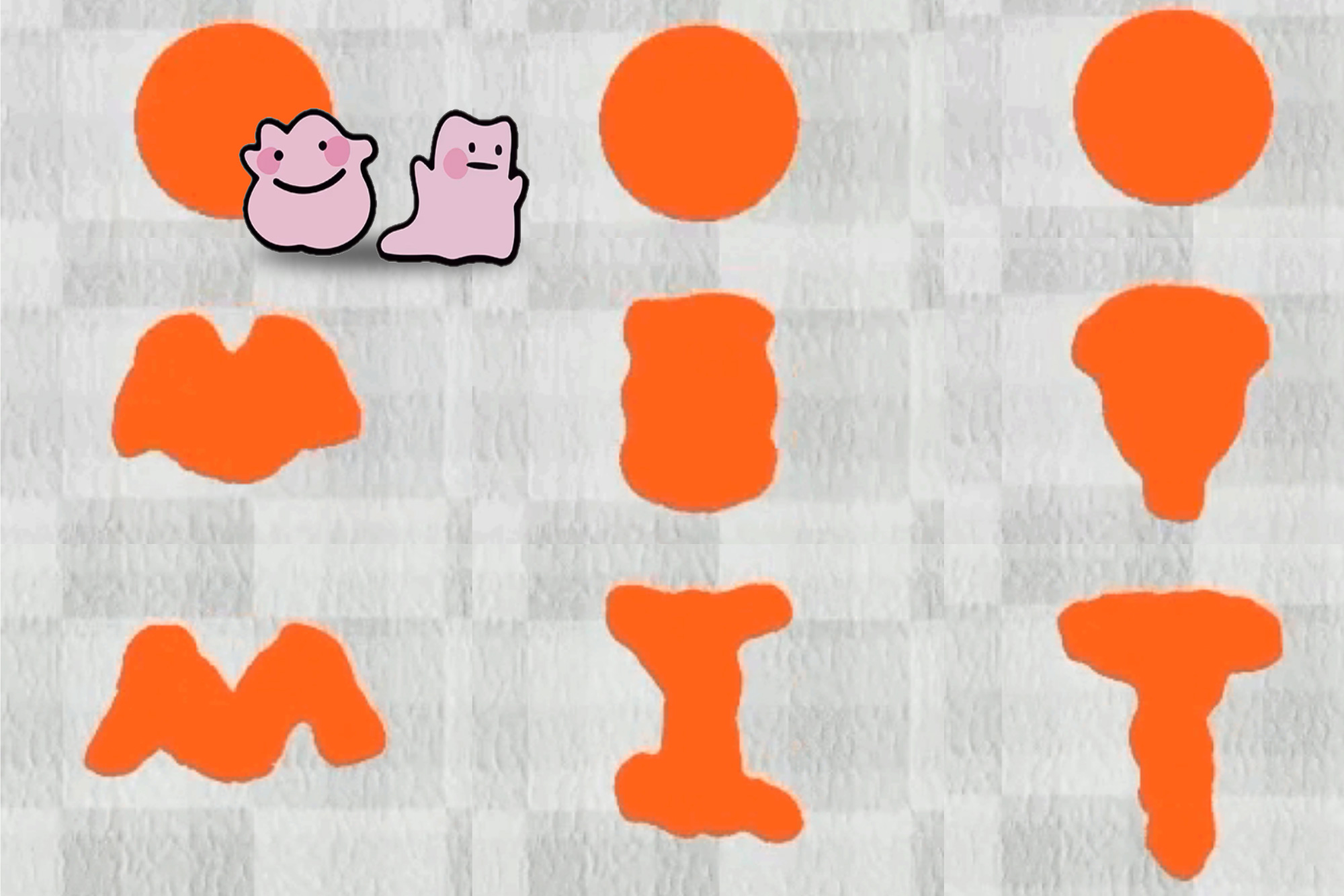

After growing this strategy, the researchers wanted a way to take a look at it, in order that they created a simulation setting referred to as DittoHealth club.

DittoHealth club options eight duties that consider a reconfigurable robotic’s capacity to dynamically change form. In one, the robotic should elongate and curve its physique so it may weave round obstacles to attain a goal level. In one other, it should change its form to mimic letters of the alphabet.

Image: Courtesy of the researchers

“Our task selection in DittoGym follows both generic reinforcement learning benchmark design principles and the specific needs of reconfigurable robots. Each task is designed to represent certain properties that we deem important, such as the capability to navigate through long-horizon explorations, the ability to analyze the environment, and interact with external objects,” Huang says. “We believe they together can give users a comprehensive understanding of the flexibility of reconfigurable robots and the effectiveness of our reinforcement learning scheme.”

Their algorithm outperformed baseline strategies and was the one approach appropriate for finishing multistage duties that required a number of form modifications.

“We have a stronger correlation between action points that are closer to each other, and I think that is key to making this work so well,” says Chen.

While it might be a few years earlier than shape-shifting robots are deployed in the true world, Chen and his collaborators hope their work conjures up different scientists not solely to research reconfigurable soft robots but in addition to take into consideration leveraging 2D motion areas for different advanced control issues.