Seeing his phrases on the printed web page is a giant deal to Andrew Leland—as it’s to all writers. But the sight of his ideas in written type is rather more treasured to him than to most scribes. Leland is progressively dropping his imaginative and prescientbecause of a congenital situation referred to as retinitis pigmentosa, which slowly kills off the rods and cones which can be the eyes’ gentle receptors. There will come a degree when the largest kind, the faces of his family members, and even the solar in the sky gained’t be seen to him. So, who higher to have written the newly launched e-book The Country of the Blind: A Memoir at the End of Sight, which presents a historical past of blindness that touches on occasions and advances in social, political, creative, and technological realms? Leland has fantastically woven in the gleanings from three years of deteriorating sight. And, to his credit score, he has finished so with out being the least bit doleful and self-pitying.

Leland says he started the e-book venture as a thought experiment that will permit him to determine how he might greatest handle the transition from the world of the sighted to the group of the blind and visually impaired. IEEE Spectrum spoke with him about the position know-how has performed in serving to the visually impaired navigate the world round them and luxuriate in the written phrase as a lot as sighted folks can.

IEEE Spectrum: What are the bread-and-butter applied sciences that the majority visually impaired folks depend on for finishing up the actions of day by day dwelling?

Andrew Leland: It’s not electrons like I do know you’re in search of, however the basic know-how of blindness is the white cane. That is the first step of mobility and orientation for blind folks.

It’s humorous…. I’ve heard from blind technologists who will usually be pitched new know-how that’s like, “Oh, we came up with this laser cane and it’s got lidar sensors on it.” There are instruments like that which can be actually helpful for blind folks. But I’ve heard tremendous techy blind folks say, ‘You know what? We don’t want a laser cane. We’re simply nearly as good with the historical know-how of a extremely lengthy stick.”

That’s all you want. So, I’d say that’s No. 1. No. 2 is about literacy. Braille is one other old-school know-how, however there’s of course, a contemporary model of it in the type of a refreshable Braille show.

How does the Braille show work?

Leland: So, in the event you think about a Kindle, the place you flip the web page and all the electrical Ink reconfigures itself into a brand new web page of textual content. The Braille show does an analogous factor. It’s received anyplace between like 14 and 80 cells. So, I assume I want to clarify what a cell is. The means a Braille cell works is there’s as many as six dots organized on a two-by-three grid. Depending on the permutation of these dots, that’s what the letter is. So, if it’s only a single dot in the higher left house , that’s the letter a. if it’s dots one and two—which seem in the high two areas on the left column, that’s the letter b. And so, in a Braille cell on the refreshable Braille show there are little holes which can be drilled in, and every cell is the dimension of a finger pad. When a line of textual content seems on the show, totally different configurations of little delicate dots will pop up by means of the drilled holes. And then while you’re able to scroll to the subsequent line, you simply hit a panning key and so they all drop down after which pop again up in a brand new configuration.

They name it a Braille show as a result of you’ll be able to hook it as much as a pc in order that any textual content that’s showing on the pc display screen, and thus in the display screen reader, you’ll be able to learn in Braille. That’s a extremely necessary function for deafblind folks, for instance, who can’t use a display screen reader with audio. They can do all of their computing by means of Braille.

And that brings up the third actually necessary know-how for blind folks, which is the display screen reader. It’s a bit of software program that sits in your telephone or pc and takes all of the textual content on the display screen and turns it into artificial speech—or in the instance I simply talked about, textual content to Braille. These days, the speech is an efficient artificial voice. Imagine the Siri voice or the Alexa voice; it’s like that, however slightly than being an AI that you just’re having a dialog with, it strikes all the performance of the pc from the mouse. If you consider the blind individual, having a mouse isn’t very helpful as a result of they will’t see the place the pointer is. The display screen reader pulls the web page navigation into the keyboard. You have a collection of scorching keys, so you’ll be able to navigate round the display screen. And wherever the focus of the display screen reader is, it reads the textual content aloud in an artificial voice.

So, if I’m getting into my e mail, it’d say, “112 messages.” And then I transfer the focus with the keyboard or with the contact display screen on my telephone with a swipe, and it’ll say “Message 1 from Willie Jones, sent 2 p.m.” Everything {that a} sighted individual can see visually, you’ll be able to hear aurally with a display screen reader.

You rely an excellent deal in your display screen reader. What would the effort of writing your e-book have been like together with your current degree of sightedness in the event you had been attempting to do it in the technological world of, say, the Nineties?

Leland: That’s a very good query. But I’d possibly counsel pulling again even additional and say, like, the Nineteen Sixties. In the Nineties, display screen readers had been round. They weren’t as highly effective as they’re now. They had been dearer and more durable to search out. And I’d have needed to do much more work to search out specialists who would set up it on my pc for me. And I’d most likely want an exterior sound card that will run it slightly than having a pc that already had a sound card in it that might deal with all the speech synthesis.

There was screen-magnification software program, which I additionally rely loads on. I’m additionally actually delicate to glare, and black textual content on a white display screen doesn’t actually work for me anymore.

All that stuff was round by the Nineties. But in the event you had requested me that query in the Nineteen Sixties or 70s, my reply could be fully totally different as a result of then I may need needed to write the e-book longhand with a extremely large magic marker and refill lots of of notebooks with big print—principally making my very own DIY 30-point font as a substitute of having it on my pc.

Or I may need had to make use of a Braille typewriter. I’m so gradual at Braille that I don’t know if I truly would have been in a position to write the e-book that means. Maybe I might have dictated it. Maybe I might have purchased a extremely costly reel-to-reel recorder—or if we’re speaking Eighties, a cassette recorder—and recorded a verbal draft. I’d then must have that transcribed and rent somebody to learn the manuscript again to me as I made revisions. That’s not too totally different from what John Milton [the 17th-century English poet who wrote Paradise Lost] needed to do. He was writing in an period even earlier than Braille was invented, and he composed traces in his head in a single day when he was on their lonesome. In the morning, his daughters (or his cousin or mates) would come and, as he put it, they’d “milk” him and take down dictation.

We don’t want a laser cane. We’re simply nearly as good with the historical know-how of a extremely lengthy stick.

What had been the necessary breakthroughs that made the display screen reader you’re utilizing now doable?

Leland: One actually necessary one touches on the Moore’s Law phenomenon: the work finished on optical character recognition, or OCR. There’s been variations of it stretching again shockingly far—even to the early twentieth century, like the 1910s and 20s. They used a light-sensitive materials—selenium—to create a tool in the twenties referred to as the optophone. The approach was referred to as musical print. In essence, it was the first scanner know-how the place you would take a bit of textual content and put it underneath the eye of a machine with this actually delicate materials and it might convert the ink-based letter types into sound.

I think about there was no Siri or Alexa voice popping out of this machine you’re describing.

Leland: Not even shut. Imagine the capital letter V. If you handed that underneath the machine’s eye, it might sound musical. You would hear the tones descend after which rise. The reader might say “Oh, okay. That was a V.” and they’d hear for the tone mixture signaling the subsequent letter. Some blind folks learn complete books that means. But that’s extraordinarily laborious and an odd and tough technique to learn.

Researchers, engineers, and scientists had been pushing this type of proto–scanning know-how ahead and it actually involves a breakthrough, I feel, with Ray Kurzweil in the Nineteen Seventies when he invented the flatbed scanner and perfected this OCR know-how that was nascent at the time. For the first time in historical past, a blind individual might pull a e-book off the shelf—[not just what’s] printed in a specialised typeface designed in a [computer science] lab however any outdated e-book in the library. The Kurzweil Reading Machine that he developed was not instantaneous, however in the course of a pair minutes, transformed textual content to artificial speech. This was an actual sport changer for blind folks, who, up till that time, needed to depend on handbook transcription into Braille. Blind school college students must rent anyone to document books for them—first on a reel-to-reel then afterward cassettes—if there wasn’t a particular prerecorded audiobook.

Audrey Marquez, 12, listens to a taped voice from the Kurzweil Reading Machine in the early Eighties.Dave Buresh/The Denver Post/Getty Images

Audrey Marquez, 12, listens to a taped voice from the Kurzweil Reading Machine in the early Eighties.Dave Buresh/The Denver Post/Getty Images

So, with the Kurzweil Reading Machine, all of the sudden the complete world of print actually begins to open up. Granted, at that point the machine value like 1 / 4 million {dollars} and wasn’t broadly accessible, however Stevie Wonder purchased one, and it began to seem in libraries at faculties for the blind. Then, with loads of the different technological advances of which Kurzweil himself was a preferred form of prophet, these machines grew to become extra environment friendly and smaller. To the level the place now I can take my iPhone and snap an image of a restaurant menu, and it’ll OCR that restaurant menu for me mechanically.

So, what’s the subsequent logical step on this development?

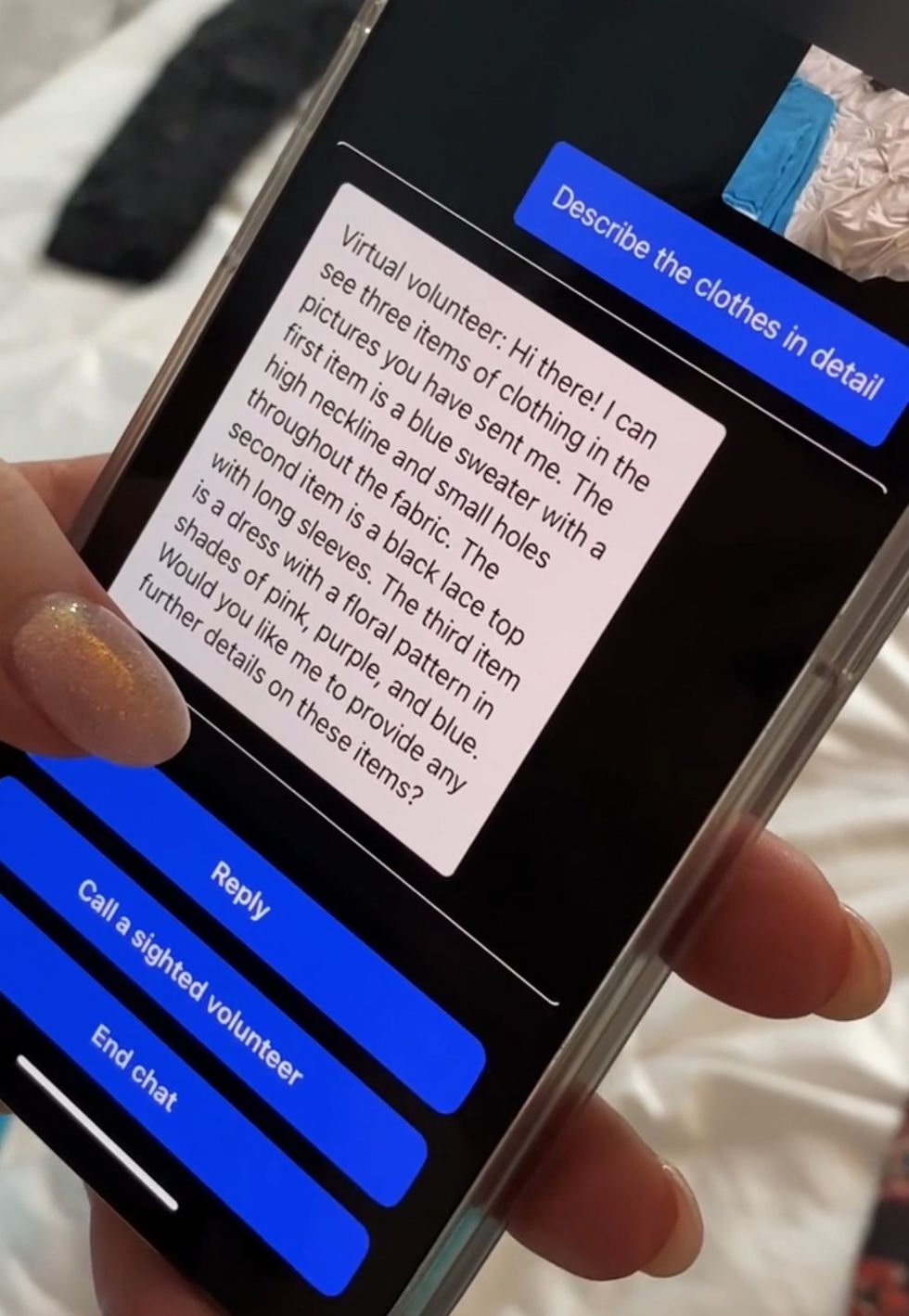

Leland: Now you might have ChatGPT machine imaginative and prescient, the place I can maintain up my telephone’s digicam and have it inform me what it’s seeing. There’s a visible interpreter app referred to as Be My Eyes. The eponymous firm that produced the app has partnered with Open AI, so now a blind individual can maintain their telephone as much as their fridge and say “What’s in this fridge?” and it’ll say “You have three-quarters of a 250 milliliter jug of orange juice that expires in two days; you have six bananas and two of them look rotten.”

So, that’s the kind of capsule model of the development of machine imaginative and prescient and the energy of machine imaginative and prescient for blind folks.

What do you assume or hope advances in AI will do subsequent to make the world extra navigable by individuals who can’t depend on their eyes?

Virtual Volunteer makes use of Open AI’s GPT-4 know-how.Be My Eyes

Virtual Volunteer makes use of Open AI’s GPT-4 know-how.Be My Eyes

Leland: [The next big breakthrough will come from] AI machine imaginative and prescient like we see with the Be My Eyes Virtual Volunteer that makes use of Open AI’s GPT-4 know-how. Right now, it’s solely in beta and solely accessible to a couple blind individuals who have been serving as testers. But I’ve listened to a few of demos that they posted on podcast, and to an individual. They discuss it as an absolute watershed second in historical past of know-how for blind folks.

Is this digital interpreter scheme a very new thought?

Leland: Yes and no. Visual interpreters have been accessible for some time. But the means Be My Eyes historically labored is, let’s say you’re a very blind individual, with no gentle notion and also you need to know in case your shirt matches your pants. You would use the app and it might join you with a sighted volunteer who might then see what’s in your telephone’s digicam.

So, you maintain the digicam up, you stand in entrance of a mirror, and so they say, “Oh, those are two different kinds of plaids. Maybe you should pick a different pair of pants.” That’s been wonderful for blind folks. I do know loads of individuals who love this app, as a result of it’s tremendous useful. For instance, in the event you’re on an accessible web site, however the display screen reader’s not working [as intended] as a result of the take a look at button isn’t labeled. So you simply hear “Button button.” You don’t know the way you’re going to take a look at. You can pull up Be My Eyes, maintain your telephone as much as your display screen, and the human volunteer will say “Okay, tab over to that third button. There you go. That’s the one you want.”

And the breakthrough that’s occurred now could be that Open AI and Be My Eyes have rolled out this know-how referred to as the Virtual Volunteer. Instead of having you join with a human who says your shirt doesn’t match your pants, you now have GPT-4 machine imaginative and prescient AI, and it’s unbelievable. And you are able to do issues like what occurred in a demo I not too long ago listened to. A blind man had visited Disneyland together with his household. Obviously, he couldn’t see the photos, however with the iPhone’s image-recognition capabilities, he requested the telephone to explain one of the photos. It mentioned, “Image may contain adults standing in front of a building.” Then GPT did it: “There are three adult men standing in front of Disney’s princess castle in Anaheim, California. All three of the men are wearing t-shirts that say blah blah.” And you’ll be able to ask follow-up questions, like, “Did any of the men have mustaches?” or “Is there anything else in the background?” Getting a style of GPT-4’s image-recognition capabilities, it’s straightforward to grasp why blind individuals are so enthusiastic about it.