There has been nice progress in the direction of adapting large language models (LLMs) to accommodate multimodal inputs for duties together with picture captioning, visual query answering (VQA), and open vocabulary recognition. Despite such achievements, present state-of-the-art visual language models (VLMs) carry out inadequately on visual information seeking datasets, comparable to Infoseek and OK-VQA, the place exterior data is required to reply the questions.

|

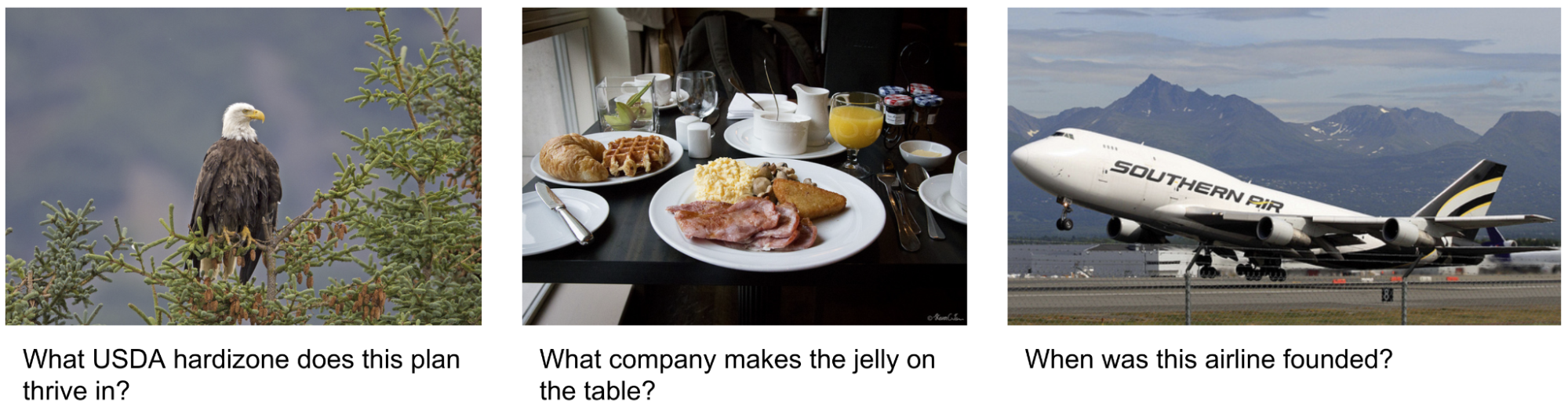

| Examples of visual information seeking queries the place exterior data is required to reply the query. Images are taken from the OK-VQA dataset. |

In “AVIS: Autonomous Visual Information Seeking with Large Language Models”, we introduce a novel technique that achieves state-of-the-art outcomes on visual information seeking duties. Our technique integrates LLMs with three kinds of instruments: (i) laptop imaginative and prescient instruments for extracting visual information from photographs, (ii) an internet search device for retrieving open world data and details, and (iii) a picture search device to glean related information from metadata related with visually comparable photographs. AVIS employs an LLM-powered planner to decide on instruments and queries at every step. It additionally makes use of an LLM-powered reasoner to investigate device outputs and extract key information. A working reminiscence element retains information all through the method.

|

| An instance of AVIS’s generated workflow for answering a difficult visual information seeking query. The enter picture is taken from the Infoseek dataset. |

Comparison to earlier work

Recent research (e.g., Chameleon, ViperGPT and MM-ReAct) explored including instruments to LLMs for multimodal inputs. These techniques comply with a two-stage course of: planning (breaking down questions into structured applications or directions) and execution (utilizing instruments to assemble information). Despite success in fundamental duties, this strategy typically falters in advanced real-world eventualities.

There has additionally been a surge of curiosity in making use of LLMs as autonomous brokers (e.g., WebGPT and ReAct). These brokers work together with their atmosphere, adapt based mostly on real-time suggestions, and obtain objectives. However, these strategies don’t limit the instruments that may be invoked at every stage, resulting in an immense search house. Consequently, even essentially the most superior LLMs right this moment can fall into infinite loops or propagate errors. AVIS tackles this by way of guided LLM use, influenced by human choices from a person research.

Informing LLM resolution making with a person research

Many of the visual questions in datasets comparable to Infoseek and OK-VQA pose a problem even for people, typically requiring the help of numerous instruments and APIs. An instance query from the OK-VQA dataset is proven under. We performed a person research to know human decision-making when utilizing exterior instruments.

|

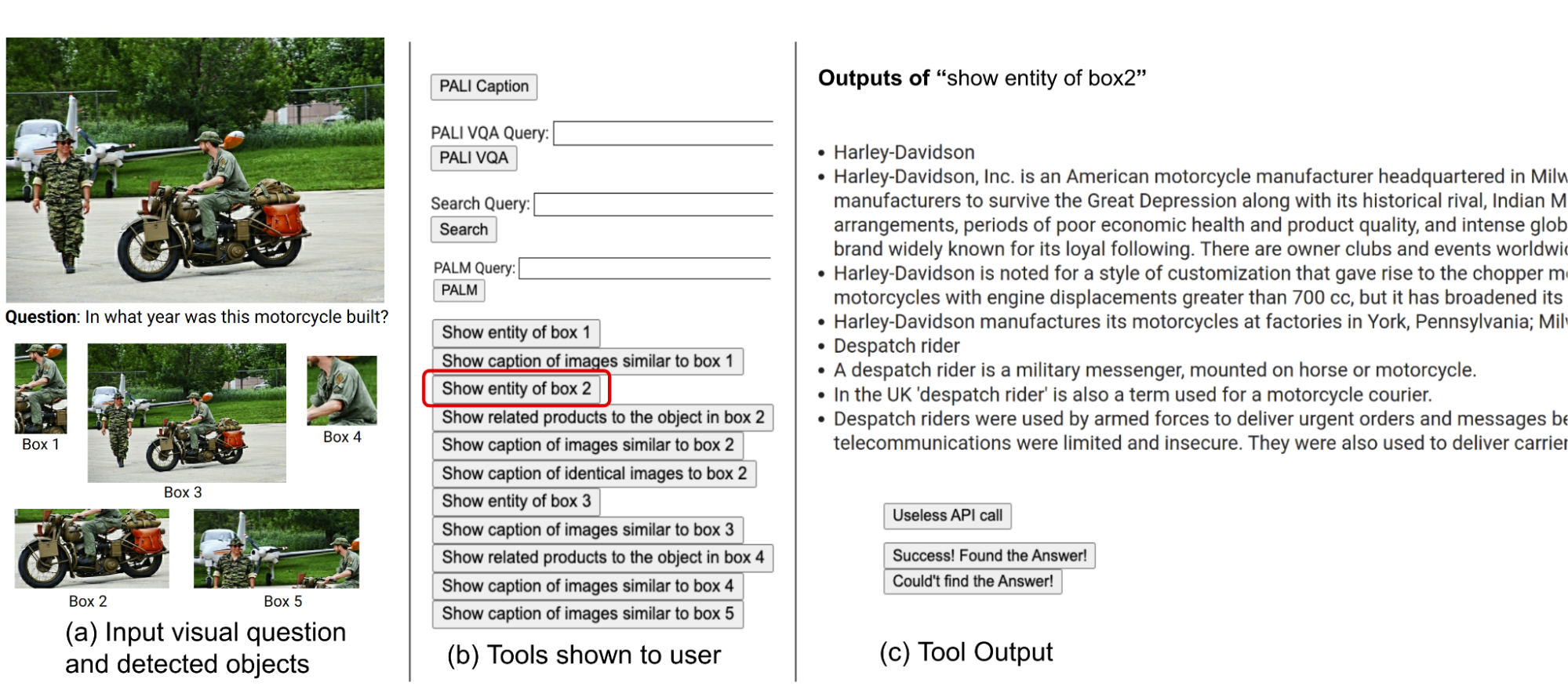

| We performed a person research to know human decision-making when utilizing exterior instruments. Image is taken from the OK-VQA dataset. |

The customers have been outfitted with an similar set of instruments as our technique, together with PALI, PaLM, and internet search. They acquired enter photographs, questions, detected object crops, and buttons linked to picture search outcomes. These buttons provided various information concerning the detected object crops, comparable to data graph entities, comparable picture captions, associated product titles, and similar picture captions.

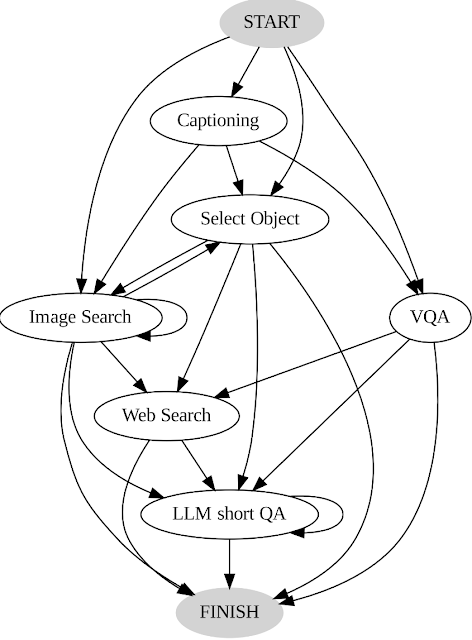

We report person actions and outputs and use it as a information for our system in two key methods. First, we assemble a transition graph (proven under) by analyzing the sequence of selections made by customers. This graph defines distinct states and restricts the accessible set of actions at every state. For instance, initially state, the system can take solely considered one of these three actions: PALI caption, PALI VQA, or object detection. Second, we use the examples of human decision-making to information our planner and reasoner with related contextual cases to reinforce the efficiency and effectiveness of our system.

|

| AVIS transition graph. |

General framework

Our strategy employs a dynamic decision-making technique designed to answer visual information-seeking queries. Our system has three main parts. First, we’ve a planner to find out the following motion, together with the suitable API name and the question it must course of. Second, we’ve a working reminiscence that retains information concerning the outcomes obtained from API executions. Last, we’ve a reasoner, whose position is to course of the outputs from the API calls. It determines whether or not the obtained information is adequate to supply the ultimate response, or if further information retrieval is required.

The planner undertakes a collection of steps every time a choice is required relating to which device to make use of and what question to ship to it. Based on the current state, the planner offers a spread of potential subsequent actions. The potential motion house could also be so large that it makes the search house intractable. To handle this challenge, the planner refers back to the transition graph to get rid of irrelevant actions. The planner additionally excludes the actions which have already been taken earlier than and are saved within the working reminiscence.

Next, the planner collects a set of related in-context examples which are assembled from the selections beforehand made by people through the person research. With these examples and the working reminiscence that holds information collected from previous device interactions, the planner formulates a immediate. The immediate is then despatched to the LLM, which returns a structured reply, figuring out the subsequent device to be activated and the question to be dispatched to it. This design permits the planner to be invoked a number of instances all through the method, thereby facilitating dynamic decision-making that steadily results in answering the enter question.

We make use of a reasoner to investigate the output of the device execution, extract the helpful information and determine into which class the device output falls: informative, uninformative, or remaining reply. Our technique makes use of the LLM with applicable prompting and in-context examples to carry out the reasoning. If the reasoner concludes that it’s prepared to supply a solution, it’s going to output the ultimate response, thus concluding the duty. If it determines that the device output is uninformative, it’s going to revert again to the planner to pick out one other motion based mostly on the present state. If it finds the device output to be helpful, it’s going to modify the state and switch management again to the planner to make a brand new resolution on the new state.

|

| AVIS employs a dynamic decision-making technique to answer visual information-seeking queries. |

Results

We consider AVIS on Infoseek and OK-VQA datasets. As proven under, even sturdy visual-language models, comparable to OFA and PaLI, fail to yield excessive accuracy when fine-tuned on Infoseek. Our strategy (AVIS), with out fine-tuning, achieves 50.7% accuracy on the unseen entity break up of this dataset.

|

| AVIS visual query answering outcomes on Infoseek dataset. AVIS achieves larger accuracy compared to earlier baselines based mostly on PaLI, PaLM and OFA. |

Our outcomes on the OK-VQA dataset are proven under. AVIS with few-shot in-context examples achieves an accuracy of 60.2%, larger than a lot of the earlier works. AVIS achieves decrease however comparable accuracy compared to the PALI mannequin fine-tuned on OK-VQA. This distinction, in comparison with Infoseek the place AVIS outperforms fine-tuned PALI, is because of the truth that most question-answer examples in OK-VQA depend on widespread sense data somewhat than on fine-grained data. Therefore, PaLI is ready to encode such generic data within the mannequin parameters and doesn’t require exterior data.

|

| Visual query answering outcomes on A-OKVQA. AVIS achieves larger accuracy compared to earlier works that use few-shot or zero-shot studying, together with Flamingo, PaLI and ViperGPT. AVIS additionally achieves larger accuracy than a lot of the earlier works which are fine-tuned on OK-VQA dataset, together with REVEAL, ReVIVE, KAT and KRISP, and achieves outcomes which are near the fine-tuned PaLI mannequin. |

Conclusion

We current a novel strategy that equips LLMs with the power to make use of quite a lot of instruments for answering knowledge-intensive visual questions. Our methodology, anchored in human decision-making information collected from a person research, employs a structured framework that makes use of an LLM-powered planner to dynamically determine on device choice and question formation. An LLM-powered reasoner is tasked with processing and extracting key information from the output of the chosen device. Our technique iteratively employs the planner and reasoner to leverage completely different instruments till all crucial information required to reply the visual query is amassed.

Acknowledgements

This analysis was performed by Ziniu Hu, Ahmet Iscen, Chen Sun, Kai-Wei Chang, Yizhou Sun, David A. Ross, Cordelia Schmid and Alireza Fathi.