Legal issues have been raised about large language fashions (LMs) as a result of they’re usually skilled on copyrighted content material. The inherent tradeoff between authorized danger and mannequin efficiency lies on the coronary heart of this matter. Using simply permissively licensed or publicly obtainable knowledge for coaching has a extreme adverse affect on accuracy. Since frequent LM corpora embody a wider vary of points, this constraint stems from the rarity of permissive knowledge and its tightness to sources like copyright-expired books, authorities data, and permissively licensed code.

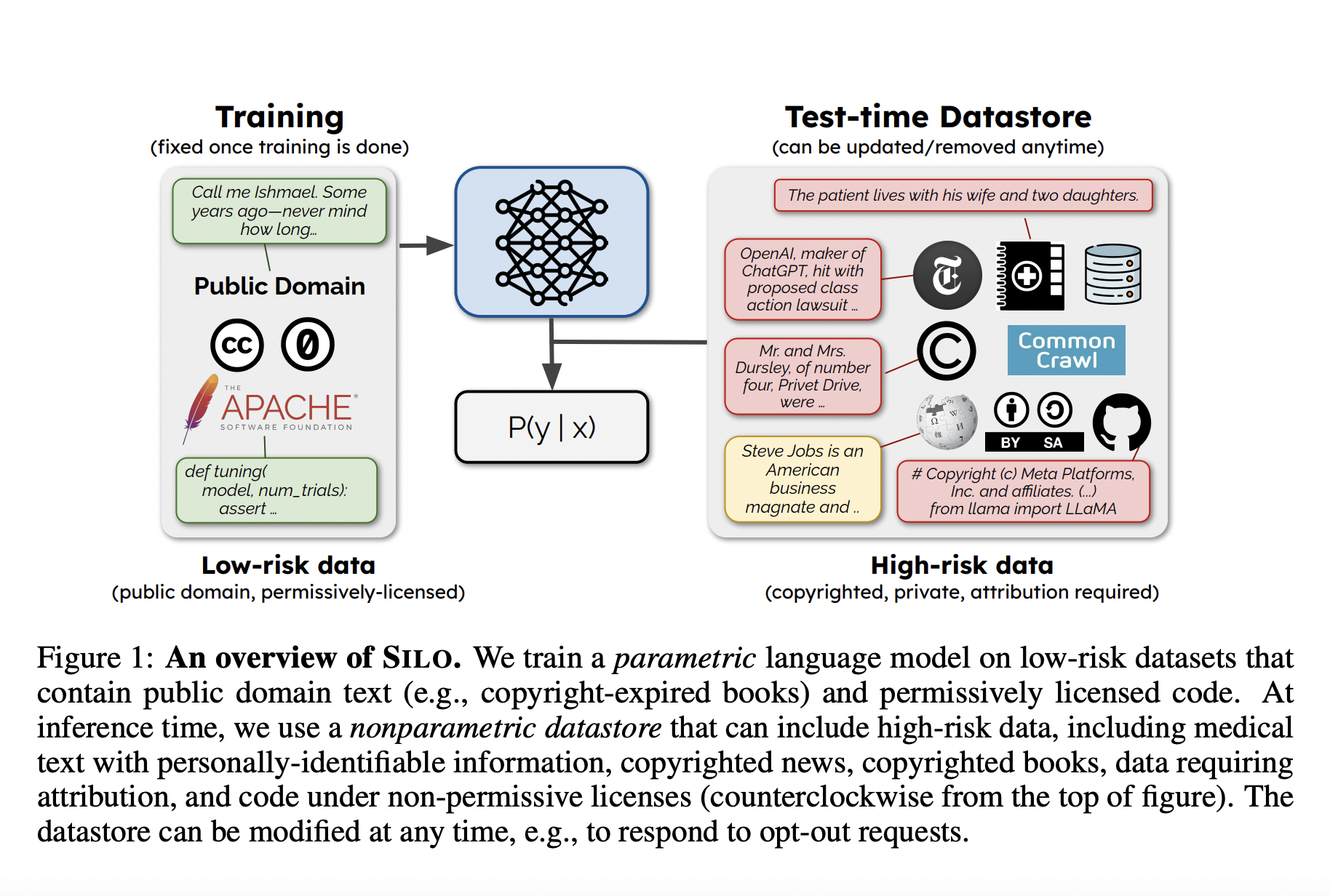

A new research by the University of Washington, UC Berkeley, and Allen Institute for AI present that splitting coaching knowledge into parametric and nonparametric subsets improves the risk-performance tradeoff. The crew trains LM parameters on low-risk knowledge and feeds them right into a nonparametric part (a datastore) that is barely used throughout inference. High-risk knowledge may be retrieved from nonparametric datastores to improve mannequin predictions exterior the coaching part. The mannequin builders can fully take away their knowledge from the datastore down to the extent of particular person examples, and the datastore is definitely updatable at any second. This technique additionally assigns credit score to knowledge contributors by attributing mannequin predictions down to the sentence stage. Thanks to these up to date options, the mannequin can now be extra precisely aligned with varied data-use restrictions. Parametric fashions, conversely, make it unattainable to do away with high-risk knowledge as soon as coaching is full, and it’s additionally onerous to attribute knowledge at scale.

They developed SILO, a novel nonparametric language mannequin to implement their suggestion. OPEN LICENSE CORPUS (OLC)—a novel pretraining corpus for the parametric part of SILO is wealthy in varied domains. Its distribution is skewed closely towards code and authorities textual content, making it not like different pretraining corpora. Because of this, they now face the intense area generalization drawback of making an attempt to generalize a mannequin skilled on very slim domains. Three 1.3B-parameter LMs are skilled on totally different subsets of OLC, after which a test-time datastore that can incorporate high-risk knowledge is constructed, and its contents are retrieved and utilized in inference. A retrieval-in-context method (RIC-LM) that retrieves textual content blocks and feeds them to the parametric LM in context is contrasted with a nearest-neighbors method (kNN-LM) that employs a nonparametric next-token prediction operate.

Perplexity in language modeling is measured throughout 14 domains, together with in-domain and OLC-specific knowledge. Here, the researchers consider SILO in opposition to Pythia, a parametric LM that shares some options with SILO however was developed primarily to be used with high-risk knowledge. They first verify the issue of extraordinarily generalizing domains by demonstrating that parametric-only SILO performs competitively on domains lined by OLC however poorly out of the area. However, this drawback is solved by supplementing SILO with an inference-time datastore. While each kNN-LM and RIC-LM significantly enhance out-of-domain efficiency, the findings present that kNN-LM generalizes higher, permitting SILO to shut the hole with the Pythia baseline by a mean of 90% throughout all domains. Analysis reveals that the nonparametric next-token prediction in kNN-LM is resistant to area shift and that kNN-LM significantly advantages from rising the info retailer.

Overall, this work signifies that increasing the scale of the datastore and additional bettering the nonparametric mannequin can doubtless shut the remaining gaps within the few domains the place SILO has not but achieved Pythia efficiency ranges.

Check out the Paper and Github. All Credit For This Research Goes To the Researchers on This Project. Also, don’t overlook to be part of our 28k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI tasks, and extra.

Dhanshree Shenwai is a Computer Science Engineer and has a great expertise in FinTech firms masking Financial, Cards & Payments and Banking area with eager curiosity in functions of AI. She is captivated with exploring new applied sciences and developments in at the moment’s evolving world making everybody’s life straightforward.