Adaptive computation refers back to the skill of a machine studying system to regulate its habits in response to adjustments within the atmosphere. While typical neural networks have a set perform and computation capability, i.e., they spend the identical variety of FLOPs for processing completely different inputs, a model with adaptive and dynamic computation modulates the computational finances it dedicates to processing every enter, relying on the complexity of the enter.

Adaptive computation in neural networks is interesting for 2 key causes. First, the mechanism that introduces adaptivity supplies an inductive bias that may play a key function in fixing some difficult duties. For occasion, enabling completely different numbers of computational steps for various inputs may be essential in fixing arithmetic issues that require modeling hierarchies of various depths. Second, it provides practitioners the flexibility to tune the price of inference by better flexibility supplied by dynamic computation, as these fashions may be adjusted to spend extra FLOPs processing a brand new enter.

Neural networks may be made adaptive by utilizing completely different capabilities or computation budgets for numerous inputs. A deep neural community may be regarded as a perform that outputs a end result primarily based on each the enter and its parameters. To implement adaptive perform sorts, a subset of parameters are selectively activated primarily based on the enter, a course of known as conditional computation. Adaptivity primarily based on the perform kind has been explored in research on mixture-of-experts, the place the sparsely activated parameters for every enter pattern are decided by routing.

Another space of analysis in adaptive computation entails dynamic computation budgets. Unlike in customary neural networks, similar to T5, GPT-3, PaLM, and ViT, whose computation finances is fastened for various samples, current analysis has demonstrated that adaptive computation budgets can enhance efficiency on duties the place transformers fall brief. Many of those works obtain adaptivity by utilizing dynamic depth to allocate the computation finances. For instance, the Adaptive Computation Time (ACT) algorithm was proposed to supply an adaptive computational finances for recurrent neural networks. The Universal Transformer extends the ACT algorithm to transformers by making the computation finances depending on the variety of transformer layers used for every enter instance or token. Recent research, like PonderNet, observe the same method whereas enhancing the dynamic halting mechanisms.

In the paper “Adaptive Computation with Elastic Input Sequence”, we introduce a brand new model that makes use of adaptive computation, known as AdaTape. This model is a Transformer-based structure that makes use of a dynamic set of tokens to create elastic enter sequences, offering a novel perspective on adaptivity compared to earlier works. AdaTape makes use of an adaptive tape studying mechanism to find out a various variety of tape tokens which are added to every enter primarily based on enter’s complexity. AdaTape could be very easy to implement, supplies an efficient knob to extend the accuracy when wanted, however can also be rather more environment friendly in comparison with different adaptive baselines as a result of it straight injects adaptivity into the enter sequence as an alternative of the model depth. Finally, Adatape affords higher efficiency on customary duties, like picture classification, in addition to algorithmic duties, whereas sustaining a positive high quality and price tradeoff.

Adaptive computation transformer with elastic enter sequence

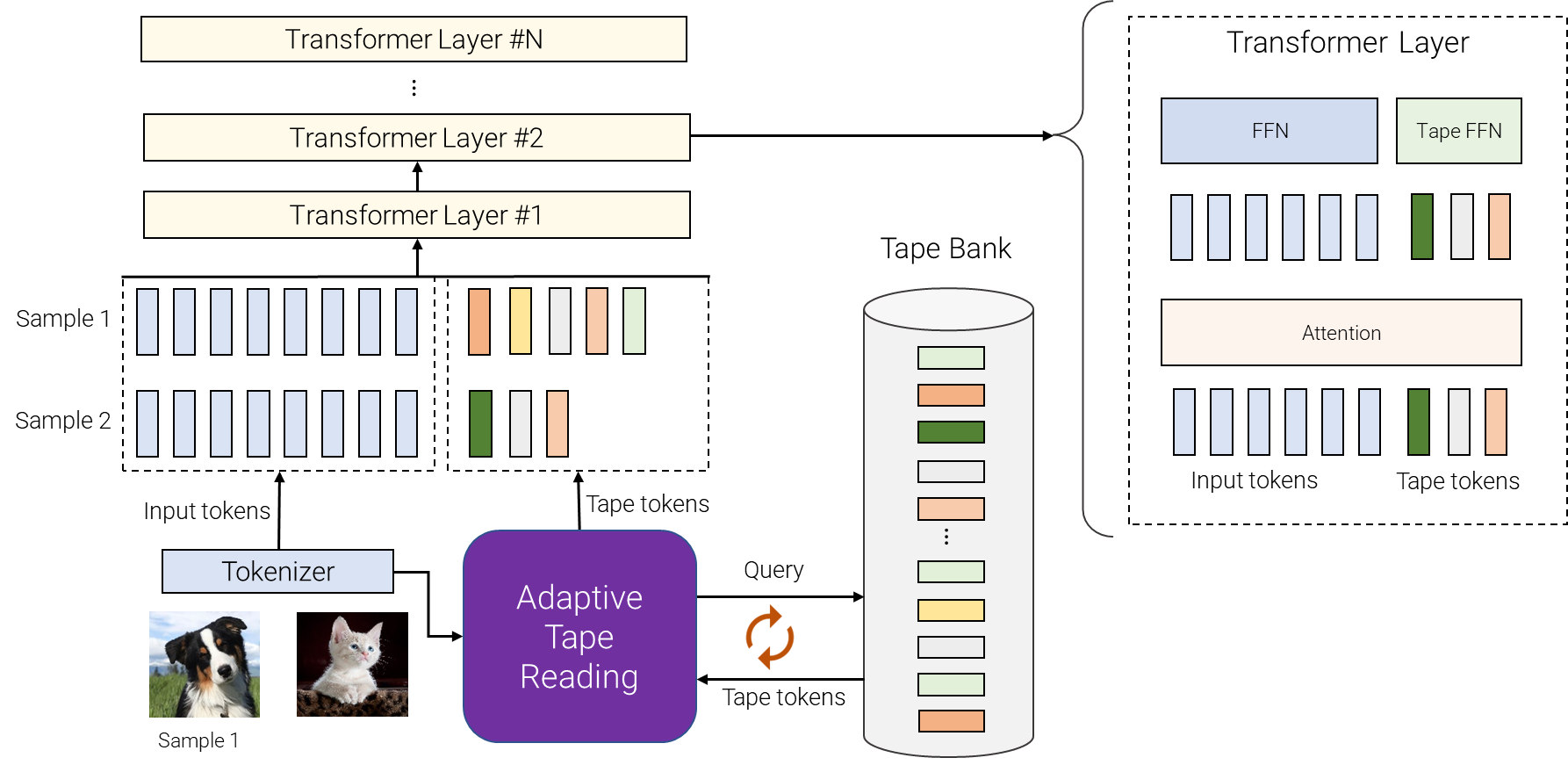

AdaTape makes use of each the adaptive perform sorts and a dynamic computation finances. Specifically, for a batch of enter sequences after tokenization (e.g., a linear projection of non-overlapping patches from a picture within the imaginative and prescient transformer), AdaTape makes use of a vector representing every enter to dynamically choose a variable-sized sequence of tape tokens.

AdaTape makes use of a financial institution of tokens, known as a “tape bank”, to retailer all of the candidate tape tokens that work together with the model by the adaptive tape studying mechanism. We discover two completely different strategies for creating the tape financial institution: an input-driven financial institution and a learnable financial institution.

The common concept of the input-driven financial institution is to extract a financial institution of tokens from the enter whereas using a unique method than the unique model tokenizer for mapping the uncooked enter to a sequence of enter tokens. This permits dynamic, on-demand entry to data from the enter that’s obtained utilizing a unique standpoint, e.g., a unique picture decision or a unique stage of abstraction.

In some instances, tokenization in a unique stage of abstraction is just not doable, thus an input-driven tape financial institution is just not possible, similar to when it is tough to additional break up every node in a graph transformer. To deal with this difficulty, AdaTape affords a extra common method for producing the tape financial institution by utilizing a set of trainable vectors as tape tokens. This method is known as the learnable financial institution and may be considered as an embedding layer the place the model can dynamically retrieve tokens primarily based on the complexity of the enter instance. The learnable financial institution permits AdaTape to generate a extra versatile tape financial institution, offering it with the flexibility to dynamically modify its computation finances primarily based on the complexity of every enter instance, e.g., extra advanced examples retrieve extra tokens from the financial institution, which let the model not solely use the information saved within the financial institution, but additionally spend extra FLOPs processing it, because the enter is now bigger.

Finally, the chosen tape tokens are appended to the unique enter and fed to the next transformer layers. For every transformer layer, the identical multi-head consideration is used throughout all enter and tape tokens. However, two completely different feed-forward networks (FFN) are used: one for all tokens from the unique enter and the opposite for all tape tokens. We noticed barely higher high quality by utilizing separate feed-forward networks for enter and tape tokens.

|

| An overview of AdaTape. For completely different samples, we decide a variable variety of completely different tokens from the tape financial institution. The tape financial institution may be pushed from enter, e.g., by extracting some further fine-grained data or it may be a set of trainable vectors. Adaptive tape studying is used to recursively choose completely different sequences of tape tokens, with variable lengths, for various inputs. These tokens are then merely appended to inputs and fed to the transformer encoder. |

AdaTape supplies useful inductive bias

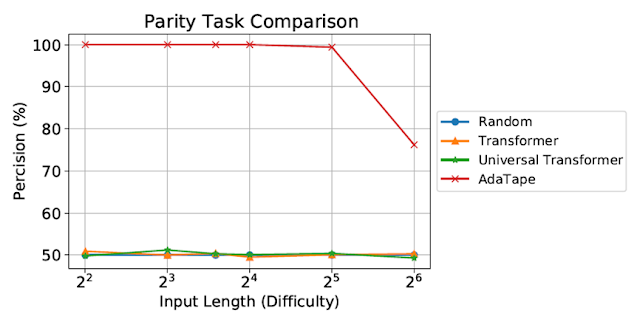

We consider AdaTape on parity, a really difficult job for the usual Transformer, to review the impact of inductive biases in AdaTape. With the parity job, given a sequence 1s, 0s, and -1s, the model has to foretell the evenness or oddness of the variety of 1s within the sequence. Parity is the best non-counter-free or periodic common language, however maybe surprisingly, the duty is unsolvable by the usual Transformer.

|

| Evaluation on the parity job. The customary Transformer and Universal Transformer had been unable to carry out this job, each exhibiting efficiency on the stage of a random guessing baseline. |

Despite being evaluated on brief, easy sequences, each the usual Transformer and Universal Transformers had been unable to carry out the parity job as they’re unable to take care of a counter inside the model. However, AdaTape outperforms all baselines, because it incorporates a light-weight recurrence inside its enter choice mechanism, offering an inductive bias that permits the implicit upkeep of a counter, which isn’t doable in customary Transformers.

Evaluation on picture classification

We additionally consider AdaTape on the picture classification job. To achieve this, we educated AdaTape on ImageNet-1K from scratch. The determine beneath reveals the accuracy of AdaTape and the baseline strategies, together with A-ViT, and the Universal Transformer ViT (UViT and U2T) versus their velocity (measured as variety of pictures, processed by every code, per second). In phrases of high quality and price tradeoff, AdaTape performs significantly better than the choice adaptive transformer baselines. In phrases of effectivity, bigger AdaTape fashions (when it comes to parameter depend) are quicker than smaller baselines. Such outcomes are constant with the discovering from earlier work that reveals that the adaptive model depth architectures should not properly suited for a lot of accelerators, just like the TPU.

|

| We consider AdaTape by coaching on ImageNet from scratch. For A-ViT, we not solely report their outcomes from the paper but additionally re-implement A-ViT by coaching from scratch, i.e., A-ViT(Ours). |

A research of AdaTape’s habits

In addition to its efficiency on the parity job and ImageNet-1K, we additionally evaluated the token choice habits of AdaTape with an input-driven financial institution on the JFT-300M validation set. To higher perceive the model’s habits, we visualized the token choice outcomes on the input-driven financial institution as heatmaps, the place lighter colours imply that place is extra often chosen. The heatmaps reveal that AdaTape extra often picks the central patches. This aligns with our prior information, as central patches are usually extra informative — particularly within the context of datasets with pure pictures, the place the principle object is in the midst of the picture. This end result highlights the intelligence of AdaTape, as it might probably successfully determine and prioritize extra informative patches to enhance its efficiency.

|

| We visualize the tape token choice heatmap of AdaTape-B/32 (left) and AdaTape-B/16 (proper). The hotter / lighter coloration means the patch at this place is extra often chosen. |

Conclusion

AdaTape is characterised by elastic sequence lengths generated by the adaptive tape studying mechanism. This additionally introduces a brand new inductive bias that permits AdaTape to have the potential to unravel duties which are difficult for each customary transformers and present adaptive transformers. By conducting complete experiments on picture recognition benchmarks, we show that AdaTape outperforms customary transformers and adaptive structure transformers when computation is held fixed.

Acknowledgments

One of the authors of this submit, Mostafa Dehghani, is now at Google DeepMind.