Someone’s prior beliefs about a synthetic intelligence agent, like a chatbot, have a major impact on their interactions with that agent and their notion of its trustworthiness, empathy, and effectiveness, based on a brand new examine.

Researchers from MIT and Arizona State University discovered that priming customers — by telling them {that a} conversational AI agent for psychological well being help was both empathetic, impartial, or manipulative — influenced their notion of the chatbot and formed how they communicated with it, though they had been talking to the very same chatbot.

Most customers who had been instructed the AI agent was caring believed that it was, they usually additionally gave it increased efficiency scores than those that believed it was manipulative. At the identical time, lower than half of the customers who had been instructed the agent had manipulative motives thought the chatbot was truly malicious, indicating that individuals might attempt to “see the good” in AI the identical means they do in their fellow people.

The examine revealed a suggestions loop between customers’ psychological fashions, or their notion of an AI agent, and that agent’s responses. The sentiment of user-AI conversations turned extra constructive over time if the consumer believed the AI was empathetic, whereas the reverse was true for customers who thought it was nefarious.

“From this study, we see that to some extent, the AI is the AI of the beholder,” says Pat Pataranutaporn, a graduate pupil in the Fluid Interfaces group of the MIT Media Lab and co-lead creator of a paper describing this examine. “When we describe to users what an AI agent is, it does not just change their mental model, it also changes their behavior. And since the AI responds to the user, when the person changes their behavior, that changes the AI, as well.”

Pataranutaporn is joined by co-lead creator and fellow MIT graduate pupil Ruby Liu; Ed Finn, affiliate professor in the Center for Science and Imagination at Arizona State University; and senior creator Pattie Maes, professor of media expertise and head of the Fluid Interfaces group at MIT.

The examine, printed at present in Nature Machine Intelligence, highlights the significance of learning how AI is introduced to society, since the media and fashionable tradition strongly affect our psychological fashions. The authors additionally increase a cautionary flag, since the identical varieties of priming statements in this examine might be used to deceive folks about an AI’s motives or capabilities.

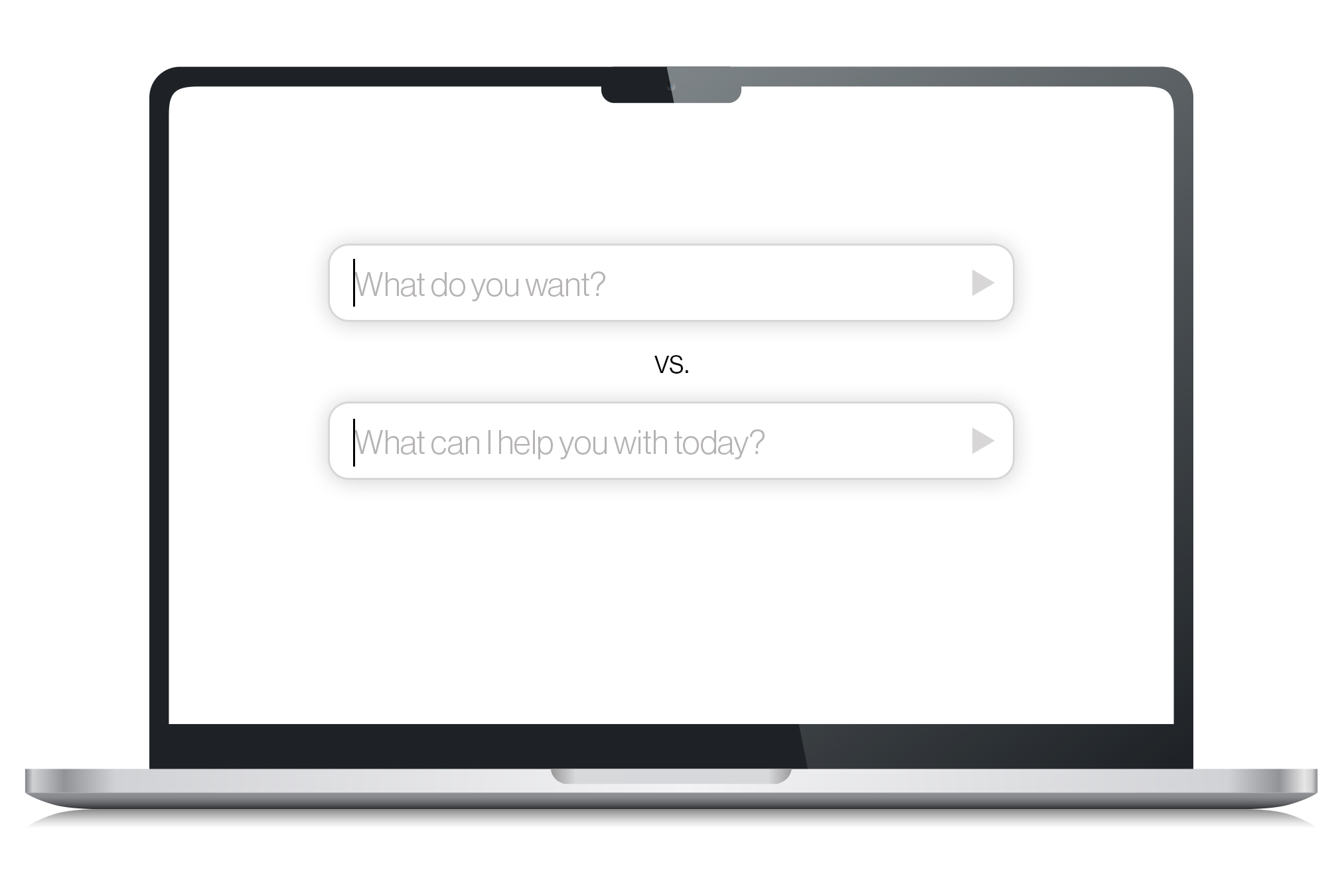

“A lot of people think of AI as only an engineering problem, but the success of AI is also a human factors problem. The way we talk about AI, even the name that we give it in the first place, can have an enormous impact on the effectiveness of these systems when you put them in front of people. We have to think more about these issues,” Maes says.

AI pal or foe?

In this examine, the researchers sought to find out how a lot of the empathy and effectiveness folks see in AI relies on their subjective notion and the way a lot relies on the expertise itself. They additionally needed to discover whether or not one may manipulate somebody’s subjective notion with priming.

“The AI is a black box, so we tend to associate it with something else that we can understand. We make analogies and metaphors. But what is the right metaphor we can use to think about AI? The answer is not straightforward,” Pataranutaporn says.

They designed a examine in which people interacted with a conversational AI psychological well being companion for about half-hour to find out whether or not they would advocate it to a pal, after which rated the agent and their experiences. The researchers recruited 310 individuals and randomly break up them into three teams, which had been every given a priming assertion about the AI.

One group was instructed the agent had no motives, the second group was instructed the AI had benevolent intentions and cared about the consumer’s well-being, and the third group was instructed the agent had malicious intentions and would attempt to deceive customers. While it was difficult to choose solely three primers, the researchers selected statements they thought match the most typical perceptions about AI, Liu says.

Half the individuals in every group interacted with an AI agent primarily based on the generative language mannequin GPT-3, a robust deep-learning mannequin that may generate human-like textual content. The different half interacted with an implementation of the chatbot ELIZA, a much less refined rule-based pure language processing program developed at MIT in the Sixties.

Molding psychological fashions

Post-survey outcomes revealed that easy priming statements can strongly affect a consumer’s psychological mannequin of an AI agent, and that the constructive primers had a larger impact. Only 44 p.c of these given adverse primers believed them, whereas 88 p.c of these in the constructive group and 79 p.c of these in the impartial group believed the AI was empathetic or impartial, respectively.

“With the negative priming statements, rather than priming them to believe something, we were priming them to form their own opinion. If you tell someone to be suspicious of something, then they might just be more suspicious in general,” Liu says.

But the capabilities of the expertise do play a task, since the results had been extra vital for the extra refined GPT-3 primarily based conversational chatbot.

The researchers had been stunned to see that customers rated the effectiveness of the chatbots in another way primarily based on the priming statements. Users in the constructive group awarded their chatbots increased marks for giving psychological well being recommendation, regardless of the reality that each one brokers had been similar.

Interestingly, additionally they noticed that the sentiment of conversations modified primarily based on how customers had been primed. People who believed the AI was caring tended to work together with it in a extra constructive means, making the agent’s responses extra constructive. The adverse priming statements had the reverse impact. This influence on sentiment was amplified as the dialog progressed, Maes provides.

The outcomes of the examine counsel that as a result of priming statements can have such a robust influence on a consumer’s psychological mannequin, one may use them to make an AI agent appear extra succesful than it’s — which could lead customers to position an excessive amount of belief in an agent and comply with incorrect recommendation.

“Maybe we should prime people more to be careful and to understand that AI agents can hallucinate and are biased. How we talk about AI systems will ultimately have a big effect on how people respond to them,” Maes says.

In the future, the researchers need to see how AI-user interactions can be affected if the brokers had been designed to counteract some consumer bias. For occasion, maybe somebody with a extremely constructive notion of AI is given a chatbot that responds in a impartial or perhaps a barely adverse means so the dialog stays extra balanced.

They additionally need to use what they’ve realized to reinforce sure AI purposes, like psychological well being therapies, the place it might be helpful for the consumer to imagine an AI is empathetic. In addition, they need to conduct a longer-term examine to see how a consumer’s psychological mannequin of an AI agent adjustments over time.

This analysis was funded, in half, by the Media Lab, the Harvard-MIT Program in Health Sciences and Technology, Accenture, and KBTG.