A researcher from Johannes Kepler University has launched GateLoop, a novel sequence mannequin that leverages the potential of linear recurrence for environment friendly long-sequence modeling. It generalized linear recurrent fashions and outperformed them in auto-regressive language modeling. GateLoop gives low-cost recurrent and environment friendly parallel modes whereas introducing a surrogate consideration mode that has implications for Transformer architectures. It supplies data-controlled relative-positional data to Attention, emphasizing the importance of data-controlled cumulative merchandise for extra sturdy sequence fashions past conventional cumulative sums utilized in current fashions.

GateLoop is a flexible sequence mannequin that extends the capabilities of linear recurrent fashions like S4, S5, LRU, and RetNet by using data-controlled state transitions. GateLoop excels in auto-regressive language modeling, providing each cost-efficient recurrent and extremely environment friendly parallel modes. It introduces a surrogate consideration mode with implications for Transformer architectures. The examine discusses key features like prefix-cumulative-product pre-computation, operator associativity, and non-data-controlled parameterization. GateLoop is empirically validated with decrease perplexity scores on the WikiText103 dataset. Existing fashions are proven to underutilize linear recurrence’s potential, which GateLoop addresses with data-controlled transitions and complicated cumulative merchandise.

Sequences with long-range dependencies pose challenges in machine studying, historically tackled with Recurrent Neural Networks (RNNs). However, RNNs face vanishing and exploding gradients, hindering their stability for prolonged sequences. Gated variants like LSTM and GRU alleviate these issues however should be extra environment friendly. Transformers launched consideration mechanisms for international dependencies, eliminating recurrence. Although they allow environment friendly parallel coaching and international pairwise dependencies, their quadratic complexity limits use with lengthy sequences. Linear Recurrent Models (LRMs) supply an alternate, with GateLoop as a foundational sequence mannequin generalizing LRMs via data-controlled state transitions, excelling in auto-regressive language modeling, and offering versatile operational modes.

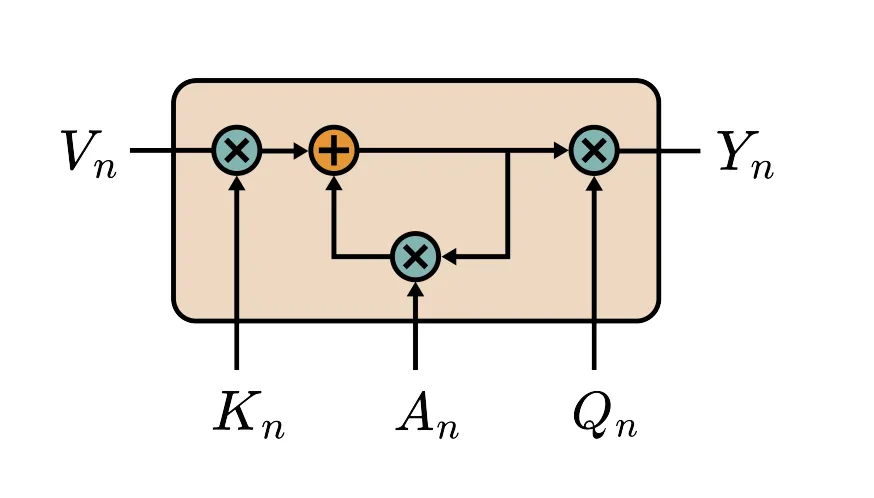

GateLoop gives an environment friendly O(l) recurrent mode, an optimized O(llog2l) parallel mode, and an O(l2) surrogate consideration mode, offering data-controlled relative-positional data to Attention. Experiments on the WikiText-103 benchmark display GateLoop’s autoregressive pure language modeling prowess. An artificial job confirms the empirical benefit of data-controlled over non-data-controlled state transitions. Key features embody prefix-cumulative-product pre-computation and non-data-controlled parameterization to forestall variable blow-up.

GateLoop, a sequence mannequin incorporating data-controlled state transitions, excels in auto-regressive language modeling, as demonstrated in experiments on the WikiText-103 benchmark. It achieves a decrease take a look at perplexity than different fashions, highlighting the sensible advantages of data-controlled state transitions in sequence modeling. GateLoop’s capability to neglect reminiscences input-dependently permits it to handle its hidden state successfully for related data. The analysis outlines future analysis prospects, together with exploring initialization methods, amplitude and section activations, and the interpretability of discovered state transitions for deeper mannequin understanding.

GateLoop, a completely data-controlled linear RNN, extends current linear recurrent fashions via data-controlled gating of inputs, outputs, and state transitions. It excels in auto-regressive language modeling, outperforming different fashions. GateLoop’s mechanism supplies relative positional data to Attention and may be reformulated in an equal surrogate consideration mode with O(l2) complexity. Empirical outcomes validate the efficacy of absolutely data-controlled linear recurrence in autoregressive language modeling. The mannequin can neglect reminiscences input-dependently, making room for pertinent data. Future analysis avenues embody exploring completely different initialization methods, amplitude, and section activations and enhancing the interpretability of discovered state transitions.

Check out the Paper. All credit score for this analysis goes to the researchers of this venture. Also, don’t neglect to affix our 32k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra.

If you want our work, you’ll love our publication..

We are additionally on Telegram and WhatsApp.

Sana Hassan, a consulting intern at Marktechpost and dual-degree pupil at IIT Madras, is obsessed with making use of expertise and AI to deal with real-world challenges. With a eager curiosity in fixing sensible issues, he brings a contemporary perspective to the intersection of AI and real-life options.