Image retrieval performs an important function in serps. Typically, their customers depend on both image or textual content as a question to retrieve a desired goal image. However, text-based retrieval has its limitations, as describing the goal image precisely utilizing words may be difficult. For occasion, when looking out for a vogue merchandise, customers might want an merchandise whose particular attribute, e.g., the colour of a emblem or the brand itself, is totally different from what they discover in an internet site. Yet looking out for the merchandise in an present search engine isn’t trivial since exactly describing the style merchandise by textual content may be difficult. To deal with this truth, composed image retrieval (CIR) retrieves photos based mostly on a question that mixes each an image and a textual content pattern that gives directions on how to modify the image to match the supposed retrieval goal. Thus, CIR permits exact retrieval of the goal image by combining image and textual content.

However, CIR strategies require giant quantities of labeled information, i.e., triplets of a 1) question image, 2) description, and three) goal image. Collecting such labeled information is dear, and fashions educated on this information are sometimes tailor-made to a selected use case, limiting their capacity to generalize to totally different datasets.

To deal with these challenges, in “Pic2Word: Mapping Pictures to Words for Zero-shot Composed Image Retrieval”, we suggest a job known as zero-shot CIR (ZS-CIR). In ZS-CIR, we purpose to construct a single CIR mannequin that performs a wide range of CIR duties, corresponding to object composition, attribute modifying, or area conversion, with out requiring labeled triplet information. Instead, we suggest to prepare a retrieval mannequin utilizing large-scale image-caption pairs and unlabeled photos, that are significantly simpler to accumulate than supervised CIR datasets at scale. To encourage reproducibility and additional advance this house, we additionally launch the code.

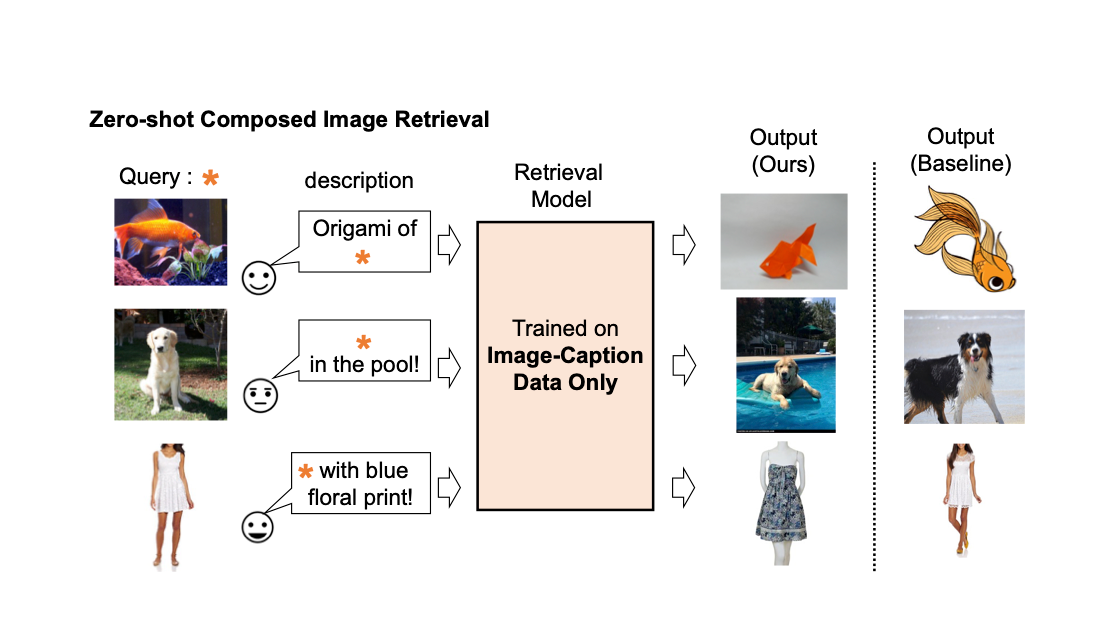

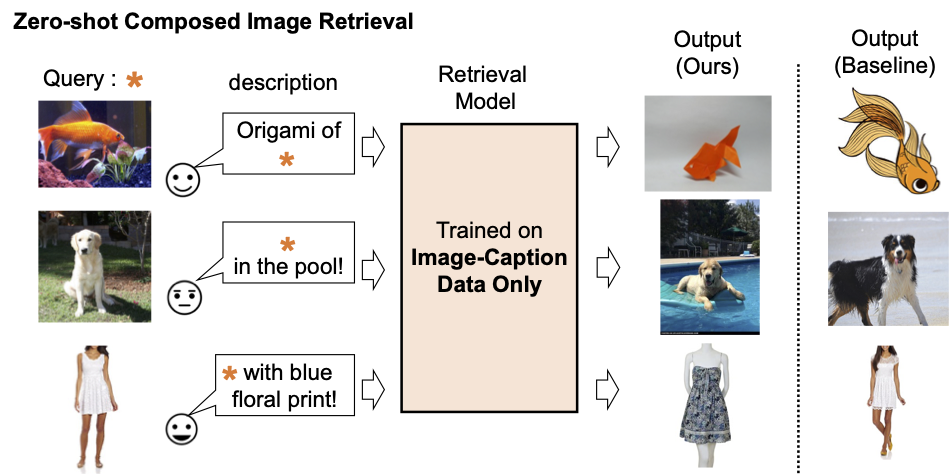

|

| Description of present composed image retrieval mannequin. |

|

| We prepare a composed image retrieval mannequin utilizing image-caption information solely. Our mannequin retrieves photos aligned with the composition of the question image and textual content. |

Method overview

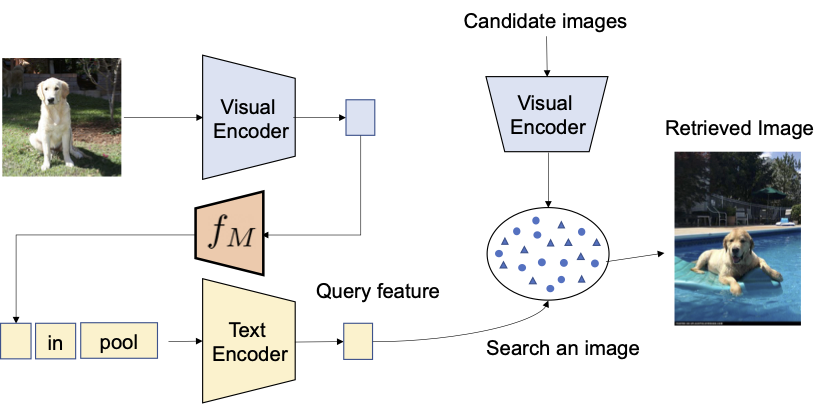

We suggest to leverage the language capabilities of the language encoder within the contrastive language-image pre-trained mannequin (CLIP), which excels at producing semantically significant language embeddings for a variety of textual ideas and attributes. To that finish, we use a light-weight mapping sub-module in CLIP that’s designed to map an enter image (e.g., a photograph of a cat) from the image embedding house to a phrase token (e.g., “cat”) within the textual enter house. The entire community is optimized with the vision-language contrastive loss to once more make sure the visible and textual content embedding areas are as shut as attainable given a pair of an image and its textual description. Then, the question image may be handled as if it’s a phrase. This allows the versatile and seamless composition of question image options and textual content descriptions by the language encoder. We name our technique Pic2Word and supply an summary of its coaching course of within the determine under. We need the mapped token s to characterize the enter image within the type of phrase token. Then, we prepare the mapping community to reconstruct the image embedding within the language embedding, p. Specifically, we optimize the contrastive loss proposed in CLIP computed between the visible embedding v and the textual embedding p.

|

| Training of the mapping community (fM) utilizing unlabeled photos solely. We optimize solely the mapping community with a frozen visible and textual content encoder. |

Given the educated mapping community, we will regard an image as a phrase token and pair it with the textual content description to flexibly compose the joint image-text question as proven within the determine under.

|

| With the educated mapping community, we regard the image as a phrase token and pair it with the textual content description to flexibly compose the joint image-text question. |

Evaluation

We conduct a wide range of experiments to consider Pic2Word’s efficiency on a wide range of CIR duties.

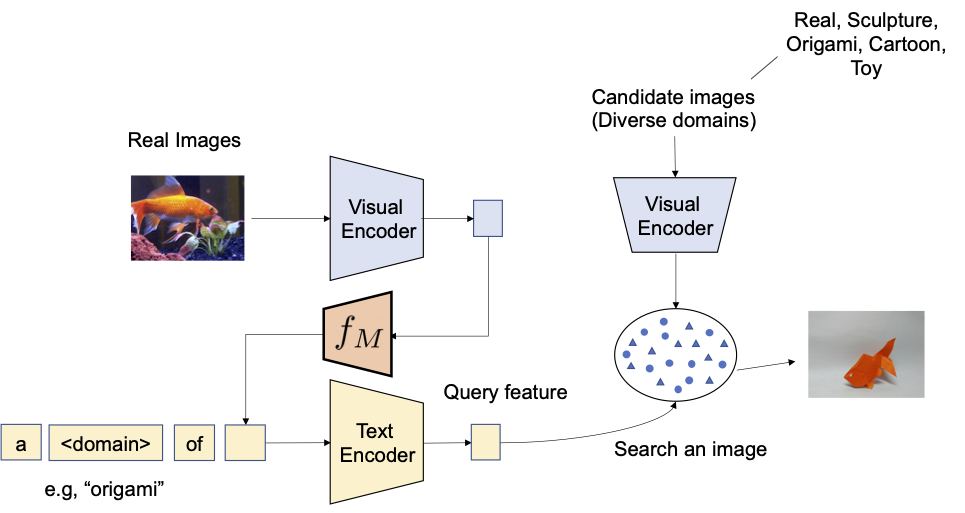

Domain conversion

We first consider the aptitude of compositionality of the proposed technique on area conversion — given an image and the specified new image area (e.g., sculpture, origami, cartoon, toy), the output of the system ought to be an image with the identical content material however within the new desired image area or model. As illustrated under, we consider the power to compose the class data and area description given as an image and textual content, respectively. We consider the conversion from actual photos to 4 domains utilizing ImageInternet and ImageInternet-R.

To examine with approaches that don’t require supervised coaching information, we choose three approaches: (i) image solely performs retrieval solely with visible embedding, (ii) textual content solely employs solely textual content embedding, and (iii) image + textual content averages the visible and textual content embedding to compose the question. The comparability with (iii) reveals the significance of composing image and textual content utilizing a language encoder. We additionally examine with Combiner, which trains the CIR mannequin on Fashion-IQ or CIRR.

|

| We purpose to convert the area of the enter question image into the one described with textual content, e.g., origami. |

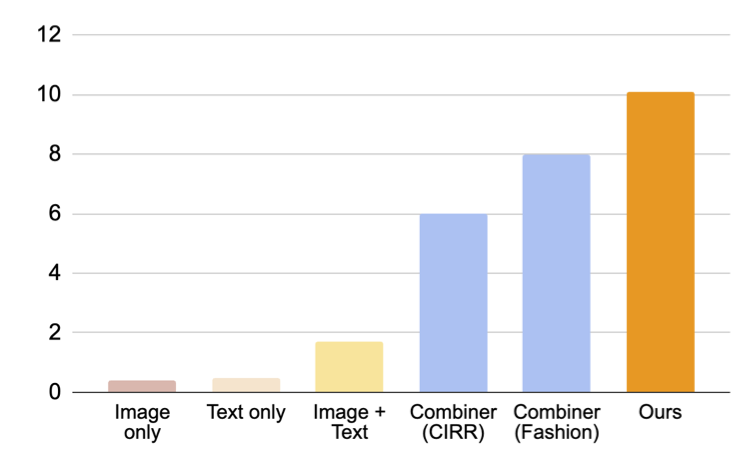

As proven in determine under, our proposed method outperforms baselines by a big margin.

|

| Results (recall@10, i.e., the proportion of related situations within the first 10 photos retrieved.) on composed image retrieval for area conversion. |

Fashion attribute composition

Next, we consider the composition of vogue attributes, corresponding to the colour of fabric, emblem, and size of sleeve, utilizing the Fashion-IQ dataset. The determine under illustrates the specified output given the question.

|

| Overview of CIR for vogue attributes. |

In the determine under, we current a comparability with baselines, together with supervised baselines that utilized triplets for coaching the CIR mannequin: (i) CB makes use of the identical structure as our method, (ii) CIRPLANT, ALTEMIS, MAAF use a smaller spine, corresponding to ResNet50. Comparison to these approaches will give us the understanding on how properly our zero-shot method performs on this job.

Although CB outperforms our method, our technique performs higher than supervised baselines with smaller backbones. This consequence means that by using a strong CLIP mannequin, we will prepare a extremely efficient CIR mannequin with out requiring annotated triplets.

|

| Results (recall@10, i.e., the proportion of related situations within the first 10 photos retrieved.) on composed image retrieval for Fashion-IQ dataset (greater is healthier). Light blue bars prepare the mannequin utilizing triplets. Note that our method performs on par with these supervised baselines with shallow (smaller) backbones. |

Qualitative outcomes

We present a number of examples within the determine under. Compared to a baseline technique that doesn’t require supervised coaching information (textual content + image characteristic averaging), our method does a greater job of accurately retrieving the goal image.

|

| Qualitative outcomes on numerous question photos and textual content description. |

Conclusion and future work

In this text, we introduce Pic2Word, a way for mapping pictures to words for ZS-CIR. We suggest to convert the image right into a phrase token to obtain a CIR mannequin utilizing solely an image-caption dataset. Through a wide range of experiments, we confirm the effectiveness of the educated mannequin on numerous CIR duties, indicating that coaching on an image-caption dataset can construct a robust CIR mannequin. One potential future analysis path is using caption information to prepare the mapping community, though we use solely image information within the current work.

Acknowledgements

This analysis was carried out by Kuniaki Saito, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, and Tomas Pfister. Also thanks to Zizhao Zhang and Sergey Ioffe for their priceless suggestions.