Medicine is an inherently multimodal self-discipline. When offering care, clinicians routinely interpret information from a variety of modalities together with medical photographs, scientific notes, lab checks, digital well being information, genomics, and extra. Over the final decade or so, AI programs have achieved expert-level efficiency on particular duties inside particular modalities — some AI programs processing CT scans, whereas others analyzing excessive magnification pathology slides, and nonetheless others attempting to find uncommon genetic variations. The inputs to those programs are typically advanced information reminiscent of photographs, and so they usually present structured outputs, whether or not within the type of discrete grades or dense picture segmentation masks. In parallel, the capacities and capabilities of enormous language fashions (LLMs) have turn into so superior that they’ve demonstrated comprehension and experience in medical data by each decoding and responding in plain language. But how will we deliver these capabilities collectively to construct medical AI programs that may leverage data from all these sources?

In right this moment’s weblog submit, we define a spectrum of approaches to bringing multimodal capabilities to LLMs and share some thrilling outcomes on the tractability of constructing multimodal medical LLMs, as described in three latest analysis papers. The papers, in flip, define easy methods to introduce de novo modalities to an LLM, easy methods to graft a state-of-the-art medical imaging basis mannequin onto a conversational LLM, and first steps in direction of constructing a very generalist multimodal medical AI system. If efficiently matured, multimodal medical LLMs would possibly function the premise of latest assistive applied sciences spanning skilled drugs, medical analysis, and shopper purposes. As with our prior work, we emphasize the necessity for cautious analysis of those applied sciences in collaboration with the medical group and healthcare ecosystem.

A spectrum of approaches

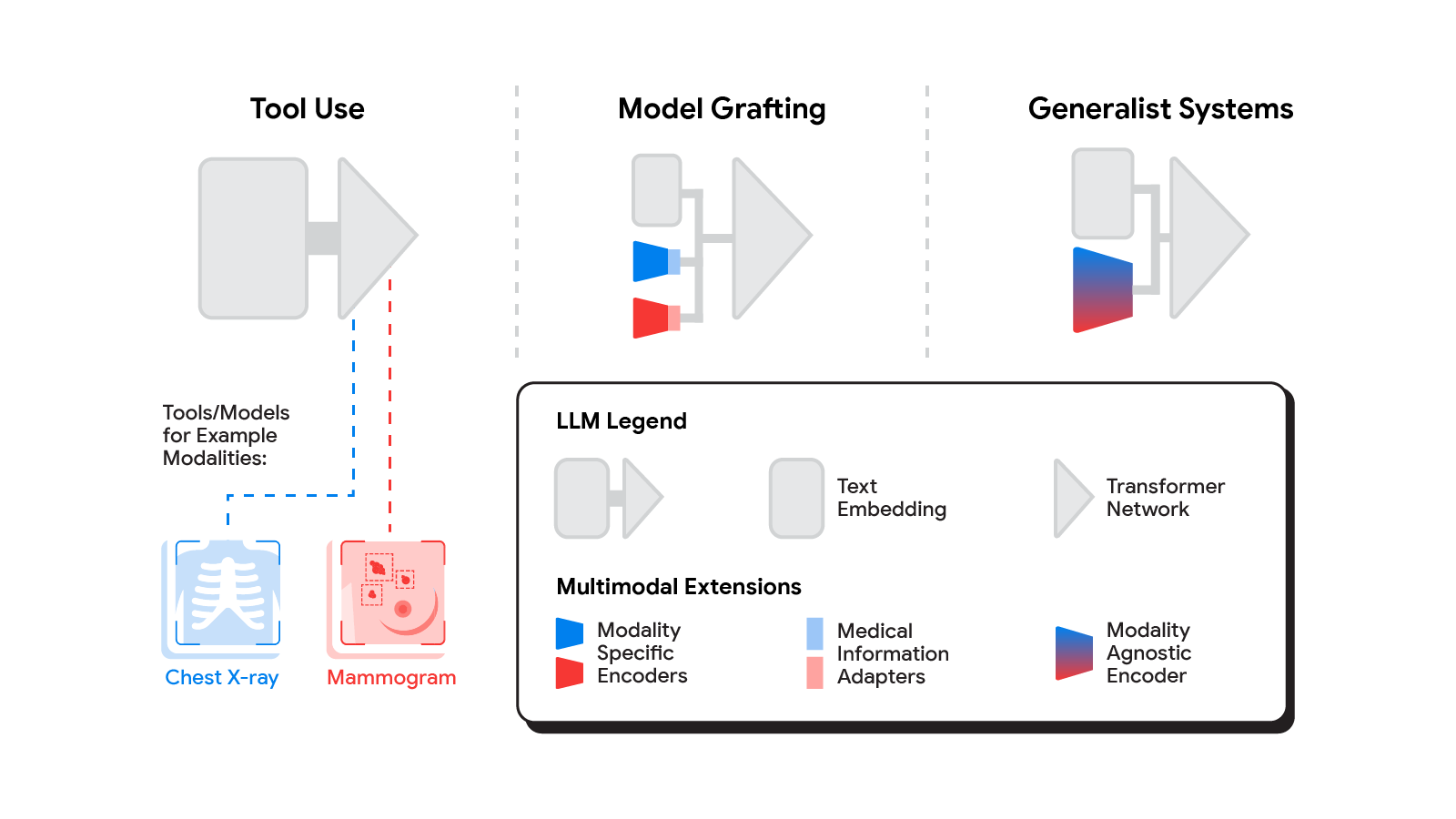

Several strategies for constructing multimodal LLMs have been proposed in latest months [1, 2, 3], and little question new strategies will proceed to emerge for a while. For the aim of understanding the alternatives to deliver new modalities to medical AI programs, we’ll think about three broadly outlined approaches: instrument use, mannequin grafting, and generalist programs.

|

| The spectrum of approaches to constructing multimodal LLMs vary from having the LLM use present instruments or fashions, to leveraging domain-specific parts with an adapter, to joint modeling of a multimodal mannequin. |

Tool use

In the instrument use method, one central medical LLM outsources evaluation of information in varied modalities to a set of software program subsystems independently optimized for these duties: the instruments. The widespread mnemonic instance of instrument use is instructing an LLM to make use of a calculator reasonably than do arithmetic by itself. In the medical house, a medical LLM confronted with a chest X-ray might ahead that picture to a radiology AI system and combine that response. This could possibly be achieved through utility programming interfaces (APIs) provided by subsystems, or extra fancifully, two medical AI programs with completely different specializations participating in a dialog.

This method has some vital advantages. It permits most flexibility and independence between subsystems, enabling well being programs to combine and match merchandise between tech suppliers based mostly on validated efficiency traits of subsystems. Moreover, human-readable communication channels between subsystems maximize auditability and debuggability. That stated, getting the communication proper between unbiased subsystems may be tough, narrowing the data switch, or exposing a threat of miscommunication and knowledge loss.

Model grafting

A extra built-in method could be to take a neural community specialised for every related area, and adapt it to plug straight into the LLM — grafting the visible mannequin onto the core reasoning agent. In distinction to instrument use the place the particular instrument(s) used are decided by the LLM, in mannequin grafting the researchers could select to make use of, refine, or develop particular fashions throughout growth. In two latest papers from Google Research, we present that that is the truth is possible. Neural LLMs usually course of textual content by first mapping phrases right into a vector embedding house. Both papers construct on the thought of mapping information from a brand new modality into the enter phrase embedding house already acquainted to the LLM. The first paper, “Multimodal LLMs for health grounded in individual-specific data”, exhibits that bronchial asthma threat prediction within the UK Biobank may be improved if we first prepare a neural community classifier to interpret spirograms (a modality used to evaluate respiratory potential) after which adapt the output of that community to function enter into the LLM.

The second paper, “ELIXR: Towards a general purpose X-ray artificial intelligence system through alignment of large language models and radiology vision encoders”, takes this identical tack, however applies it to full-scale picture encoder fashions in radiology. Starting with a basis mannequin for understanding chest X-rays, already proven to be foundation for constructing quite a lot of classifiers on this modality, this paper describes coaching a light-weight medical data adapter that re-expresses the highest layer output of the inspiration mannequin as a collection of tokens within the LLM’s enter embeddings house. Despite fine-tuning neither the visible encoder nor the language mannequin, the ensuing system shows capabilities it wasn’t educated for, together with semantic search and visible query answering.

|

| Our method to grafting a mannequin works by coaching a medical data adapter that maps the output of an present or refined picture encoder into an LLM-understandable type. |

Model grafting has an a variety of benefits. It makes use of comparatively modest computational sources to coach the adapter layers however permits the LLM to construct on present highly-optimized and validated fashions in every information area. The modularization of the issue into encoder, adapter, and LLM parts may also facilitate testing and debugging of particular person software program parts when creating and deploying such a system. The corresponding disadvantages are that the communication between the specialist encoder and the LLM is now not human readable (being a collection of excessive dimensional vectors), and the grafting process requires constructing a brand new adapter for not simply each domain-specific encoder, but additionally each revision of every of these encoders.

Generalist programs

The most radical method to multimodal medical AI is to construct one built-in, absolutely generalist system natively able to absorbing data from all sources. In our third paper on this space, “Towards Generalist Biomedical AI”, reasonably than having separate encoders and adapters for every information modality, we construct on PaLM-E, a lately revealed multimodal mannequin that’s itself a mix of a single LLM (PaLM) and a single imaginative and prescient encoder (ViT). In this arrange, textual content and tabular information modalities are lined by the LLM textual content encoder, however now all different information are handled as a picture and fed to the imaginative and prescient encoder.

|

| Med-PaLM M is a big multimodal generative mannequin that flexibly encodes and interprets biomedical information together with scientific language, imaging, and genomics with the identical mannequin weights. |

We specialize PaLM-E to the medical area by fine-tuning the entire set of mannequin parameters on medical datasets described within the paper. The ensuing generalist medical AI system is a multimodal model of Med-PaLM that we name Med-PaLM M. The versatile multimodal sequence-to-sequence structure permits us to interleave varied forms of multimodal biomedical data in a single interplay. To the perfect of our data, it’s the first demonstration of a single unified mannequin that may interpret multimodal biomedical information and deal with a various vary of duties utilizing the identical set of mannequin weights throughout all duties (detailed evaluations within the paper).

This generalist-system method to multimodality is each probably the most bold and concurrently most elegant of the approaches we describe. In precept, this direct method maximizes flexibility and knowledge switch between modalities. With no APIs to keep up compatibility throughout and no proliferation of adapter layers, the generalist method has arguably the best design. But that very same class can also be the supply of a few of its disadvantages. Computational prices are sometimes increased, and with a unitary imaginative and prescient encoder serving a variety of modalities, area specialization or system debuggability might endure.

The actuality of multimodal medical AI

To benefit from AI in drugs, we’ll want to mix the energy of professional programs educated with predictive AI with the pliability made potential by generative AI. Which method (or mixture of approaches) will probably be most helpful within the subject is dependent upon a mess of as-yet unassessed components. Is the pliability and ease of a generalist mannequin extra invaluable than the modularity of mannequin grafting or instrument use? Which method offers the very best high quality outcomes for a particular real-world use case? Is the popular method completely different for supporting medical analysis or medical schooling vs. augmenting medical follow? Answering these questions would require ongoing rigorous empirical analysis and continued direct collaboration with healthcare suppliers, medical establishments, authorities entities, and healthcare business companions broadly. We expect to find the solutions collectively.