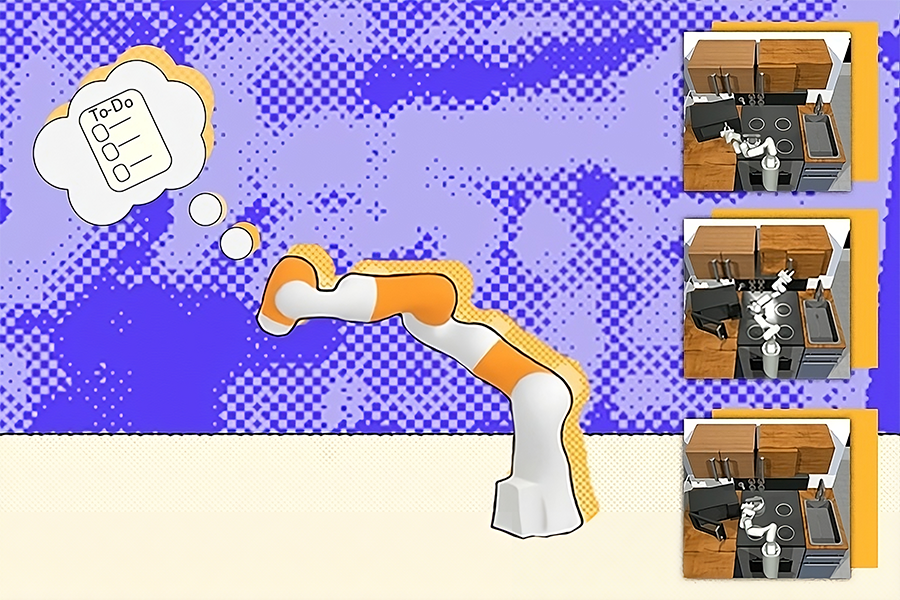

Your day by day to-do checklist is probably going fairly simple: wash the dishes, purchase groceries, and different trivia. It’s unlikely you wrote out “pick up the first dirty dish,” or “wash that plate with a sponge,” as a result of every of those miniature steps throughout the chore feels intuitive. While we are able to routinely full every step with out a lot thought, a robotic requires a complex plan that includes more detailed outlines.

MIT’s Improbable AI Lab, a bunch throughout the Computer Science and Artificial Intelligence Laboratory (CSAIL), has supplied these machines a serving to hand with a brand new multimodal framework: Compositional Foundation Models for Hierarchical Planning (HiP), which develops detailed, possible plans with the experience of three completely different basis models. Like OpenAI’s GPT-4, the inspiration mannequin that ChatGPT and Bing Chat have been constructed upon, these basis models are skilled on large portions of knowledge for functions like producing photographs, translating textual content, and robotics.

Unlike RT2 and different multimodal models which might be skilled on paired imaginative and prescient, language, and motion information, HiP makes use of three completely different basis models every skilled on completely different information modalities. Each basis mannequin captures a special a part of the decision-making course of after which works collectively when it’s time to make selections. HiP removes the necessity for entry to paired imaginative and prescient, language, and motion information, which is tough to acquire. HiP additionally makes the reasoning course of more clear.

What’s thought-about a day by day chore for a human generally is a robotic’s “long-horizon goal” — an overarching goal that includes finishing many smaller steps first — requiring ample information to plan, perceive, and execute aims. While pc imaginative and prescient researchers have tried to construct monolithic basis models for this downside, pairing language, visible, and motion information is dear. Instead, HiP represents a special, multimodal recipe: a trio that cheaply incorporates linguistic, bodily, and environmental intelligence right into a robotic.

“Foundation models do not have to be monolithic,” says NVIDIA AI researcher Jim Fan, who was not concerned within the paper. “This work decomposes the complex task of embodied agent planning into three constituent models: a language reasoner, a visual world model, and an action planner. It makes a difficult decision-making problem more tractable and transparent.”

The staff believes that their system may help these machines accomplish family chores, similar to placing away a e book or inserting a bowl within the dishwasher. Additionally, HiP may help with multistep building and manufacturing duties, like stacking and inserting completely different supplies in particular sequences.

Evaluating HiP

The CSAIL staff examined HiP’s acuity on three manipulation duties, outperforming comparable frameworks. The system reasoned by growing clever plans that adapt to new data.

First, the researchers requested that it stack different-colored blocks on one another after which place others close by. The catch: Some of the right colours weren’t current, so the robotic needed to place white blocks in a shade bowl to color them. HiP usually adjusted to those adjustments precisely, particularly in comparison with state-of-the-art activity planning programs like Transformer BC and Action Diffuser, by adjusting its plans to stack and place every sq. as wanted.

Another take a look at: arranging objects similar to sweet and a hammer in a brown field whereas ignoring different objects. Some of the objects it wanted to maneuver have been soiled, so HiP adjusted its plans to put them in a cleansing field, after which into the brown container. In a 3rd demonstration, the bot was in a position to ignore pointless objects to finish kitchen sub-goals similar to opening a microwave, clearing a kettle out of the best way, and turning on a light-weight. Some of the prompted steps had already been accomplished, so the robotic tailored by skipping these instructions.

A 3-pronged hierarchy

HiP’s three-pronged planning course of operates as a hierarchy, with the flexibility to pre-train every of its elements on completely different units of knowledge, together with data exterior of robotics. At the underside of that order is a big language mannequin (LLM), which begins to ideate by capturing all of the symbolic data wanted and growing an summary activity plan. Applying the widespread sense information it finds on the web, the mannequin breaks its goal into sub-goals. For instance, “making a cup of tea” turns into “filling a pot with water,” “boiling the pot,” and the next actions required.

“All we want to do is take existing pre-trained models and have them successfully interface with each other,” says Anurag Ajay, a PhD scholar within the MIT Department of Electrical Engineering and Computer Science (EECS) and a CSAIL affiliate. “Instead of pushing for one model to do everything, we combine multiple ones that leverage different modalities of internet data. When used in tandem, they help with robotic decision-making and can potentially aid with tasks in homes, factories, and construction sites.”

These models additionally want some type of “eyes” to know the setting they’re working in and appropriately execute every sub-goal. The staff used a big video diffusion mannequin to reinforce the preliminary planning accomplished by the LLM, which collects geometric and bodily details about the world from footage on the web. In flip, the video mannequin generates an commentary trajectory plan, refining the LLM’s define to include new bodily information.

This course of, often known as iterative refinement, permits HiP to motive about its concepts, taking in suggestions at every stage to generate a more sensible define. The move of suggestions is just like writing an article, the place an creator could ship their draft to an editor, and with these revisions integrated in, the writer evaluations for any final adjustments and finalizes.

In this case, the highest of the hierarchy is an selfish motion mannequin, or a sequence of first-person photographs that infer which actions ought to happen based mostly on its environment. During this stage, the commentary plan from the video mannequin is mapped over the house seen to the robotic, serving to the machine determine methods to execute every activity throughout the long-horizon objective. If a robotic makes use of HiP to make tea, this implies it’s going to have mapped out precisely the place the pot, sink, and different key visible components are, and start finishing every sub-goal.

Still, the multimodal work is proscribed by the shortage of high-quality video basis models. Once out there, they may interface with HiP’s small-scale video models to additional improve visible sequence prediction and robotic motion era. A better-quality model would additionally scale back the present information necessities of the video models.

That being mentioned, the CSAIL staff’s method solely used a tiny bit of knowledge total. Moreover, HiP was low cost to coach and demonstrated the potential of utilizing available basis models to finish long-horizon duties. “What Anurag has demonstrated is proof-of-concept of how we can take models trained on separate tasks and data modalities and combine them into models for robotic planning. In the future, HiP could be augmented with pre-trained models that can process touch and sound to make better plans,” says senior creator Pulkit Agrawal, MIT assistant professor in EECS and director of the Improbable AI Lab. The group can be contemplating making use of HiP to fixing real-world long-horizon duties in robotics.

Ajay and Agrawal are lead authors on a paper describing the work. They are joined by MIT professors and CSAIL principal investigators Tommi Jaakkola, Joshua Tenenbaum, and Leslie Pack Kaelbling; CSAIL analysis affiliate and MIT-IBM AI Lab analysis supervisor Akash Srivastava; graduate college students Seungwook Han and Yilun Du ’19; former postdoc Abhishek Gupta, who’s now assistant professor at University of Washington; and former graduate scholar Shuang Li PhD ’23.

The staff’s work was supported, partly, by the National Science Foundation, the U.S. Defense Advanced Research Projects Agency, the U.S. Army Research Office, the U.S. Office of Naval Research Multidisciplinary University Research Initiatives, and the MIT-IBM Watson AI Lab. Their findings have been introduced on the 2023 Conference on Neural Information Processing Systems (NeurIPS).