Modern neural networks have achieved spectacular efficiency throughout quite a lot of functions, akin to language, mathematical reasoning, and imaginative and prescient. However, these networks usually use massive architectures that require a lot of computational assets. This could make it impractical to serve such fashions to customers, particularly in resource-constrained environments like wearables and smartphones. A broadly used strategy to mitigate the inference prices of pre-trained networks is to prune them by eradicating a few of their weights, in a approach that doesn’t considerably have an effect on utility. In commonplace neural networks, every weight defines a connection between two neurons. So after weights are pruned, the enter will propagate by way of a smaller set of connections and thus requires much less computational assets.

|

| Original network vs. a pruned network. |

Pruning strategies may be utilized at totally different levels of the network’s coaching course of: submit, throughout, or earlier than coaching (i.e., instantly after weight initialization). In this submit, we deal with the post-training setting: given a pre-trained network, how can we decide which weights must be pruned? One fashionable methodology is magnitude pruning, which removes weights with the smallest magnitude. While environment friendly, this methodology doesn’t straight take into account the impact of eradicating weights on the network’s efficiency. Another fashionable paradigm is optimization-based pruning, which removes weights primarily based on how a lot their elimination impacts the loss operate. Although conceptually interesting, most current optimization-based approaches appear to face a critical tradeoff between efficiency and computational necessities. Methods that make crude approximations (e.g., assuming a diagonal Hessian matrix) can scale nicely, however have comparatively low efficiency. On the opposite hand, whereas strategies that make fewer approximations are likely to carry out higher, they look like a lot much less scalable.

In “Fast as CHITA: Neural Network Pruning with Combinatorial Optimization”, offered at ICML 2023, we describe how we developed an optimization-based strategy for pruning pre-trained neural networks at scale. CHITA (which stands for “Combinatorial Hessian-free Iterative Thresholding Algorithm”) outperforms current pruning strategies when it comes to scalability and efficiency tradeoffs, and it does so by leveraging advances from a number of fields, together with high-dimensional statistics, combinatorial optimization, and neural network pruning. For instance, CHITA may be 20x to 1000x sooner than state-of-the-art strategies for pruning ResNet and improves accuracy by over 10% in lots of settings.

Overview of contributions

CHITA has two notable technical enhancements over fashionable strategies:

- Efficient use of second-order data: Pruning strategies that use second-order data (i.e., referring to second derivatives) obtain the cutting-edge in lots of settings. In the literature, this data is often utilized by computing the Hessian matrix or its inverse, an operation that may be very troublesome to scale as a result of the Hessian measurement is quadratic with respect to the variety of weights. Through cautious reformulation, CHITA makes use of second-order data with out having to compute or retailer the Hessian matrix explicitly, thus permitting for extra scalability.

- Combinatorial optimization: Popular optimization-based strategies use a easy optimization approach that prunes weights in isolation, i.e., when deciding to prune a sure weight they don’t bear in mind whether or not different weights have been pruned. This might result in pruning vital weights as a result of weights deemed unimportant in isolation might turn into vital when different weights are pruned. CHITA avoids this concern through the use of a extra superior, combinatorial optimization algorithm that takes into consideration how pruning one weight impacts others.

In the sections beneath, we focus on CHITA’s pruning formulation and algorithms.

A computation-friendly pruning formulation

There are many attainable pruning candidates, that are obtained by retaining solely a subset of the weights from the unique network. Let ok be a user-specified parameter that denotes the variety of weights to retain. Pruning may be naturally formulated as a best-subset choice (BSS) drawback: amongst all attainable pruning candidates (i.e., subsets of weights) with solely ok weights retained, the candidate that has the smallest loss is chosen.

|

| Pruning as a BSS drawback: amongst all attainable pruning candidates with the identical whole variety of weights, the very best candidate is outlined because the one with the least loss. This illustration exhibits 4 candidates, however this quantity is mostly a lot bigger. |

Solving the pruning BSS drawback on the unique loss operate is mostly computationally intractable. Thus, much like earlier work, akin to OBD and OBS, we approximate the loss with a quadratic operate through the use of a second-order Taylor collection, the place the Hessian is estimated with the empirical Fisher data matrix. While gradients may be sometimes computed effectively, computing and storing the Hessian matrix is prohibitively costly as a consequence of its sheer measurement. In the literature, it’s common to deal with this problem by making restrictive assumptions on the Hessian (e.g., diagonal matrix) and in addition on the algorithm (e.g., pruning weights in isolation).

CHITA makes use of an environment friendly reformulation of the pruning drawback (BSS utilizing the quadratic loss) that avoids explicitly computing the Hessian matrix, whereas nonetheless utilizing all the data from this matrix. This is made attainable by exploiting the low-rank construction of the empirical Fisher data matrix. This reformulation may be considered as a sparse linear regression drawback, the place every regression coefficient corresponds to a sure weight within the neural network. After acquiring an answer to this regression drawback, coefficients set to zero will correspond to weights that must be pruned. Our regression knowledge matrix is (n x p), the place n is the batch (sub-sample) measurement and p is the variety of weights within the unique network. Typically n << p, so storing and working with this knowledge matrix is far more scalable than frequent pruning approaches that function with the (p x p) Hessian.

|

| CHITA reformulates the quadratic loss approximation, which requires an costly Hessian matrix, as a linear regression (LR) drawback. The LR’s knowledge matrix is linear in p, which makes the reformulation extra scalable than the unique quadratic approximation. |

Scalable optimization algorithms

CHITA reduces pruning to a linear regression drawback underneath the next sparsity constraint: at most ok regression coefficients may be nonzero. To get hold of an answer to this drawback, we take into account a modification of the well-known iterative arduous thresholding (IHT) algorithm. IHT performs gradient descent the place after every replace the next post-processing step is carried out: all regression coefficients exterior the Top-ok (i.e., the ok coefficients with the biggest magnitude) are set to zero. IHT sometimes delivers a great answer to the issue, and it does so iteratively exploring totally different pruning candidates and collectively optimizing over the weights.

Due to the dimensions of the issue, commonplace IHT with fixed studying fee can undergo from very gradual convergence. For sooner convergence, we developed a brand new line-search methodology that exploits the issue construction to discover a appropriate studying fee, i.e., one which results in a sufficiently massive lower within the loss. We additionally employed a number of computational schemes to enhance CHITA’s effectivity and the standard of the second-order approximation, resulting in an improved model that we name CHITA++.

Experiments

We evaluate CHITA’s run time and accuracy with a number of state-of-the-art pruning strategies utilizing totally different architectures, together with ResNet and MobileNet.

Run time: CHITA is far more scalable than comparable strategies that carry out joint optimization (versus pruning weights in isolation). For instance, CHITA’s speed-up can attain over 1000x when pruning ResNet.

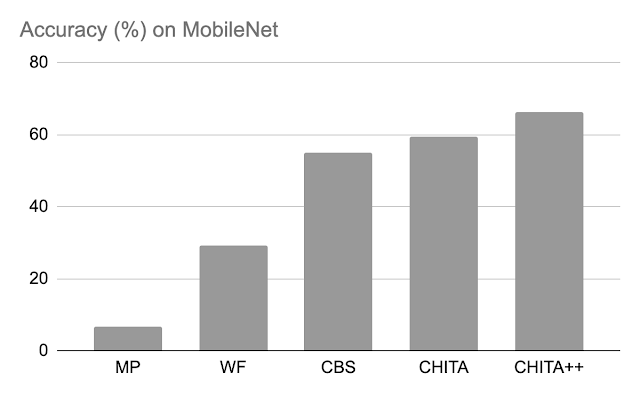

Post-pruning accuracy: Below, we evaluate the efficiency of CHITA and CHITA++ with magnitude pruning (MP), Woodfisher (WF), and Combinatorial Brain Surgeon (CBS), for pruning 70% of the mannequin weights. Overall, we see good enhancements from CHITA and CHITA++.

|

| Post-pruning accuracy of varied strategies on ResNet20. Results are reported for pruning 70% of the mannequin weights. |

|

| Post-pruning accuracy of varied strategies on MobileNet. Results are reported for pruning 70% of the mannequin weights. |

Next, we report outcomes for pruning a bigger network: ResNet50 (on this network, among the strategies listed within the ResNet20 determine couldn’t scale). Here we evaluate with magnitude pruning and M-FAC. The determine beneath exhibits that CHITA achieves higher take a look at accuracy for a variety of sparsity ranges.

|

| Test accuracy of pruned networks, obtained utilizing totally different strategies. |

Conclusion, limitations, and future work

We offered CHITA, an optimization-based strategy for pruning pre-trained neural networks. CHITA gives scalability and aggressive efficiency by effectively utilizing second-order data and drawing on concepts from combinatorial optimization and high-dimensional statistics.

CHITA is designed for unstructured pruning wherein any weight may be eliminated. In concept, unstructured pruning can considerably cut back computational necessities. However, realizing these reductions in follow requires particular software program (and presumably {hardware}) that help sparse computations. In distinction, structured pruning, which removes entire buildings like neurons, might supply enhancements which are simpler to achieve on general-purpose software program and {hardware}. It could be attention-grabbing to increase CHITA to structured pruning.

Acknowledgements

This work is a part of a analysis collaboration between Google and MIT. Thanks to Rahul Mazumder, Natalia Ponomareva, Wenyu Chen, Xiang Meng, Zhe Zhao, and Sergei Vassilvitskii for his or her assist in getting ready this submit and the paper. Also because of John Guilyard for creating the graphics on this submit.