Primate Labs

Neural processing models (NPUs) have gotten commonplace in chips from Intel and AMD after a number of years of being one thing you’d discover largely in smartphones and tablets (and Macs). But as extra firms push to do extra generative AI processing, picture enhancing, and chatbot-ing domestically on-device as a substitute of in the cloud, having the ability to measure NPU performance will develop into extra vital to folks making buying selections.

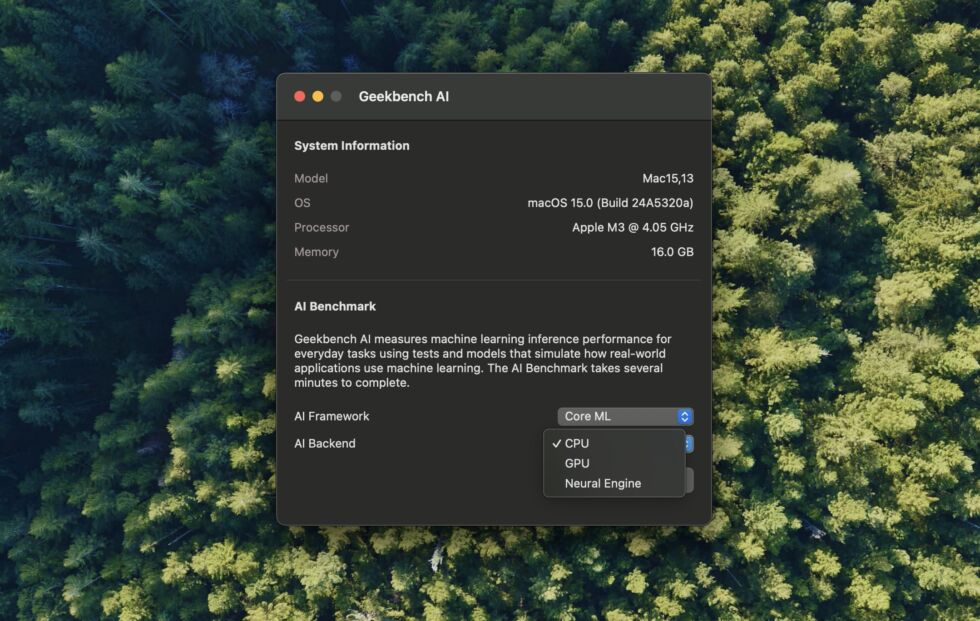

Enter Primate Labs, builders of Geekbench. The primary Geekbench app is designed to test CPU performance in addition to GPU compute performance, however for the previous few years, the firm has been experimenting with a aspect challenge referred to as Geekbench ML (for “Machine Learning”) to test the inference performance of NPUs. Now, as Microsoft’s Copilot+ initiative will get off the floor and Intel, AMD, Qualcomm, and Apple all push to spice up NPU performance, Primate Labs is bumping Geekbench ML to model 1.0 and renaming it “Geekbench AI,” a change that can presumably assist it experience the wave of AI-related buzz.

“Just as CPU-bound workloads differ in how they can take benefit of a number of cores or threads for performance scaling (necessitating each single-core and multi-core metrics in most associated benchmarks), AI workloads cowl a variety of precision ranges, relying on the activity wanted and the {hardware} out there,” wrote Primate Labs’ John Poole in a weblog publish about the replace. “Geekbench AI presents its abstract for a variety of workload checks completed with single-precision knowledge, half-precision knowledge, and quantized knowledge, masking a spread utilized by builders in phrases of each precision and function in AI techniques.”

In addition to measuring pace, Geekbench AI additionally makes an attempt to measure accuracy, which is vital for machine-learning workloads that depend on producing constant outcomes (figuring out and cataloging folks and objects in a photograph library, for instance).

Andrew Cunningham

Geekbench AI helps a number of AI frameworks: OpenVINO for Windows and Linux, ONNX for Windows, Qualcomm’s QNN on Snapdragon-powered Arm PCs, Apple’s CoreML on macOS and iOS, and a quantity of vendor-specific frameworks on numerous Android units. The app can run these workloads on the CPU, GPU, or NPU, at the least when your gadget has a suitable NPU put in.

On Windows PCs, the place NPU help and APIs like Microsoft’s DirectML are nonetheless works in progress, Geekbench AI helps Intel and Qualcomm’s NPUs however not AMD’s (but).

“We’re hoping to add AMD NPU support in a future version once we have more clarity on how best to enable them from AMD,” Poole instructed Ars.

Geekbench AI is out there for Windows, macOS, Linux, iOS/iPadOS, and Android. It’s free to make use of, although a Pro license will get you command-line instruments, the means to run the benchmark with out importing outcomes to the Geekbench Browser, and a couple of different advantages. Though the app is hitting 1.0 immediately, the Primate Labs staff expects to replace the app incessantly for brand new {hardware}, frameworks, and workloads as vital.

“AI is nothing if not fast-changing,” Poole continued in the announcement publish, “so anticipate new releases and updates as needs and AI features in the market change.”