Detection is a elementary vision job that goals to localize and acknowledge objects in a picture. However, the information assortment strategy of manually annotating bounding bins or occasion masks is tedious and pricey, which limits the trendy detection vocabulary dimension to roughly 1,000 object courses. This is orders of magnitude smaller than the vocabulary folks use to explain the visible world and leaves out many classes. Recent vision and language models (VLMs), similar to CLIP, have demonstrated improved open-vocabulary visible recognition capabilities by studying from Internet-scale image-text pairs. These VLMs are utilized to zero-shot classification utilizing frozen mannequin weights with out the necessity for fine-tuning, which stands in stark distinction to the present paradigms used for retraining or fine-tuning VLMs for open-vocabulary detection duties.

Intuitively, to align the picture content material with the textual content description throughout coaching, VLMs could study region-sensitive and discriminative options which might be transferable to object detection. Surprisingly, options of a frozen VLM include wealthy info which might be each area delicate for describing object shapes (second column beneath) and discriminative for area classification (third column beneath). In truth, characteristic grouping can properly delineate object boundaries with none supervision. This motivates us to discover using frozen VLMs for open-vocabulary object detection with the aim to increase detection past the restricted set of annotated classes.

|

| We discover the potential of frozen vision and language options for open-vocabulary detection. The Ok-Means characteristic grouping reveals wealthy semantic and region-sensitive info the place object boundaries are properly delineated (column 2). The identical frozen options can classify groundtruth (GT) areas properly with out fine-tuning (column 3). |

In “F-VLM: Open-Vocabulary Object Detection upon Frozen Vision and Language Models”, introduced at ICLR 2023, we introduce a easy and scalable open-vocabulary detection strategy constructed upon frozen VLMs. F-VLM reduces the coaching complexity of an open-vocabulary detector to beneath that of a regular detector, obviating the necessity for information distillation, detection-tailored pre-training, or weakly supervised studying. We exhibit that by preserving the information of pre-trained VLMs utterly, F-VLM maintains an analogous philosophy to ViTDet and decouples detector-specific studying from the extra task-agnostic vision information within the detector spine. We are additionally releasing the F-VLM code together with a demo on our venture web page.

Learning upon frozen vision and language models

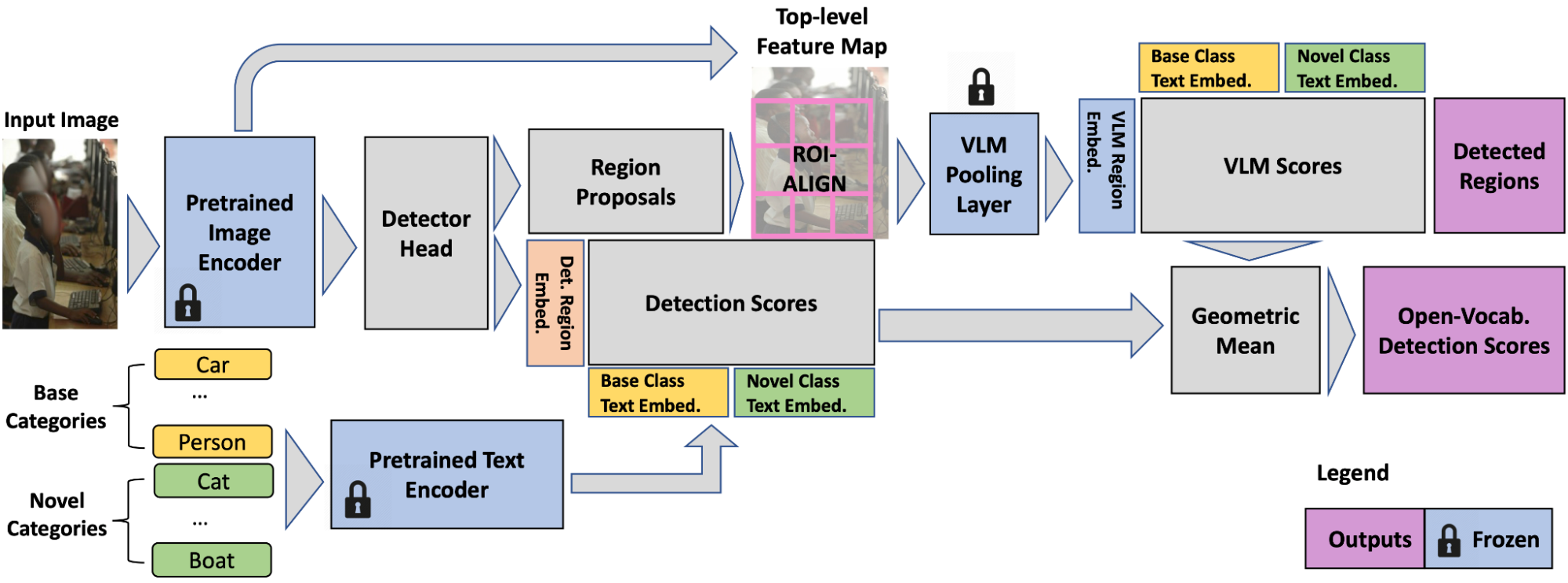

We need to retain the information of pretrained VLMs as a lot as potential with a view to attenuate effort and value wanted to adapt them for open-vocabulary detection. We use a frozen VLM picture encoder because the detector spine and a textual content encoder for caching the detection textual content embeddings of offline dataset vocabulary. We take this VLM spine and connect a detector head, which predicts object areas for localization and outputs detection scores that point out the likelihood of a detected field being of a sure class. The detection scores are the cosine similarity of area options (a set of bounding bins that the detector head outputs) and class textual content embeddings. The class textual content embeddings are obtained by feeding the class names by the textual content mannequin of pretrained VLM (which has each picture and textual content models)r.

The VLM picture encoder consists of two elements: 1) a characteristic extractor and 2) a characteristic pooling layer. We undertake the characteristic extractor for detector head coaching, which is the one step we practice (on customary detection knowledge), to permit us to immediately use frozen weights, inheriting wealthy semantic information (e.g., long-tailed classes like martini, fedora hat, pennant) from the VLM spine. The detection losses embody field regression and classification losses.

|

| At coaching time, F-VLM is just a detector with the final classification layer changed by base-category textual content embeddings. |

Region-level open-vocabulary recognition

The potential to carry out open-vocabulary recognition at area degree (i.e., bounding field degree versus picture degree) is integral to F-VLM. Since the spine options are frozen, they don’t overfit to the coaching classes (e.g., donut, zebra) and might be immediately cropped for region-level classification. F-VLM performs this open-vocabulary classification solely at take a look at time. To get hold of the VLM options for a area, we apply the characteristic pooling layer on the cropped spine output options. Because the pooling layer requires fixed-size inputs, e.g., 7×7 for ResNet50 (R50) CLIP spine, we crop and resize the area options with the ROI-Align layer (proven beneath). Unlike current open-vocabulary detection approaches, we don’t crop and resize the RGB picture areas and cache their embeddings in a separate offline course of, however practice the detector head in a single stage. This is easier and makes extra environment friendly use of disk cupboard space.. In addition, we don’t crop VLM area options throughout coaching as a result of the spine options are frozen.

Despite by no means being skilled on areas, the cropped area options preserve good open-vocabulary recognition functionality. However, we observe the cropped area options usually are not delicate sufficient to the localization high quality of the areas, i.e., a loosely vs. tightly localized field each have comparable options. This could also be good for classification, however is problematic for detection as a result of we want the detection scores to replicate localization high quality as properly. To treatment this, we apply the geometric imply to mix the VLM scores with the detection scores for every area and class. The VLM scores point out the likelihood of a detection field being of a sure class in response to the pretrained VLM. The detection scores point out the category likelihood distribution of every field based mostly on the similarity of area options and enter textual content embeddings.

|

| At take a look at time, F-VLM makes use of the area proposals to crop out the top-level options of the VLM spine and compute the VLM rating per area. The skilled detector head supplies the detection bins and masks, whereas the ultimate detection scores are a mix of detection and VLM scores. |

Evaluation

We apply F-VLM to the favored LVIS open-vocabulary detection benchmark. At the system-level, the perfect F-VLM achieves 32.8 common precision (AP) on uncommon classes (APr), which outperforms the state-of-the-art by 6.5 masks APr and many different approaches based mostly on information distillation, pre-training, or joint coaching with weak supervision. F-VLM reveals sturdy scaling property with frozen mannequin capability, whereas the variety of trainable parameters is fastened. Moreover, F-VLM generalizes and scales properly within the switch detection duties (e.g., Objects365 and Ego4D datasets) by merely changing the vocabularies with out fine-tuning the mannequin. We take a look at the LVIS-trained models on the favored Objects365 datasets and exhibit that the mannequin can work very properly with out coaching on in-domain detection knowledge.

|

| F-VLM outperforms the state-of-the-art (SOTA) on LVIS open-vocabulary detection benchmark and switch object detection. On the x-axis, we present the LVIS metric masks AP on uncommon classes (APr), and the Objects365 (O365) metric field AP on all classes. The sizes of the detector backbones are as follows: Small(R50), Base (R50x4), Large(R50x16), Huge(R50x64). The naming follows CLIP conference. |

We visualize F-VLM on open-vocabulary detection and switch detection duties (proven beneath). On LVIS and Objects365, F-VLM appropriately detects each novel and widespread objects. A key good thing about open-vocabulary detection is to check on out-of-distribution knowledge with classes given by customers on the fly. See the F-VLM paper for extra visualization on LVIS, Objects365 and Ego4D datasets.

|

| F-VLM open-vocabulary and switch detections. Top: Open-vocabulary detection on LVIS. We solely present the novel classes for readability. Bottom: Transfer to Objects365 dataset reveals correct detection of many classes. Novel classes detected: fedora, martini, pennant, soccer helmet (LVIS); slide (Objects365). |

Training effectivity

We present that F-VLM can obtain prime efficiency with a lot much less computational assets within the desk beneath. Compared to the state-of-the-art strategy, F-VLM can obtain higher efficiency with 226x fewer assets and 57x quicker wall clock time. Apart from coaching useful resource financial savings, F-VLM has potential for substantial reminiscence financial savings at coaching time by operating the spine in inference mode. The F-VLM system runs virtually as quick as a regular detector at inference time, as a result of the one addition is a single consideration pooling layer on the detected area options.

| Method | APr | Training Epochs | Training Cost (per-core-hour) |

Training Cost Savings | ||||||||||

| SOTA | 26.3 | 460 | 8,000 | 1x | ||||||||||

| F-VLM | 32.8 | 118 | 565 | 14x | ||||||||||

| F-VLM | 31.0 | 14.7 | 71 | 113x | ||||||||||

| F-VLM | 27.7 | 7.4 | 35 | 226x |

We present further outcomes utilizing the shorter Detectron2 coaching recipes (12 and 36 epochs), and present equally sturdy efficiency by utilizing a frozen spine. The default setting is marked in grey.

| Backbone | Large Scale Jitter | #Epochs | Batch Size | APr | ||||||||||

| R50 | 12 | 16 | 18.1 | |||||||||||

| R50 | 36 | 64 | 18.5 | |||||||||||

| R50 | ✓ | 100 | 256 | 18.6 | ||||||||||

| R50x64 | 12 | 16 | 31.9 | |||||||||||

| R50x64 | 36 | 64 | 32.6 | |||||||||||

| R50x64 | ✓ | 100 | 256 | 32.8 |

Conclusion

We current F-VLM – a easy open-vocabulary detection technique which harnesses the facility of frozen pre-trained massive vision-language models to supply detection of novel objects. This is finished with no want for information distillation, detection-tailored pre-training, or weakly supervised studying. Our strategy gives vital compute financial savings and obviates the necessity for image-level labels. F-VLM achieves the brand new state-of-the-art in open-vocabulary detection on the LVIS benchmark at system degree, and reveals very aggressive switch detection on different datasets. We hope this examine can each facilitate additional analysis in novel-object detection and assist the neighborhood discover frozen VLMs for a wider vary of vision duties.

Acknowledgements

This work is performed by Weicheng Kuo, Yin Cui, Xiuye Gu, AJ Piergiovanni, and Anelia Angelova. We wish to thank our colleagues at Google Research for his or her recommendation and useful discussions.