MIT researchers have created a periodic table that reveals how greater than 20 classical machine-learning algorithms are linked. The new framework sheds gentle on how scientists could fuse methods from completely different strategies to enhance present AI fashions or provide you with new ones.

For occasion, the researchers used their framework to mix components of two completely different algorithms to create a brand new image-classification algorithm that carried out 8 p.c higher than present state-of-the-art approaches.

The periodic table stems from one key thought: All these algorithms be taught a particular variety of relationship between knowledge factors. While every algorithm might accomplish that in a barely completely different method, the core arithmetic behind every method is identical.

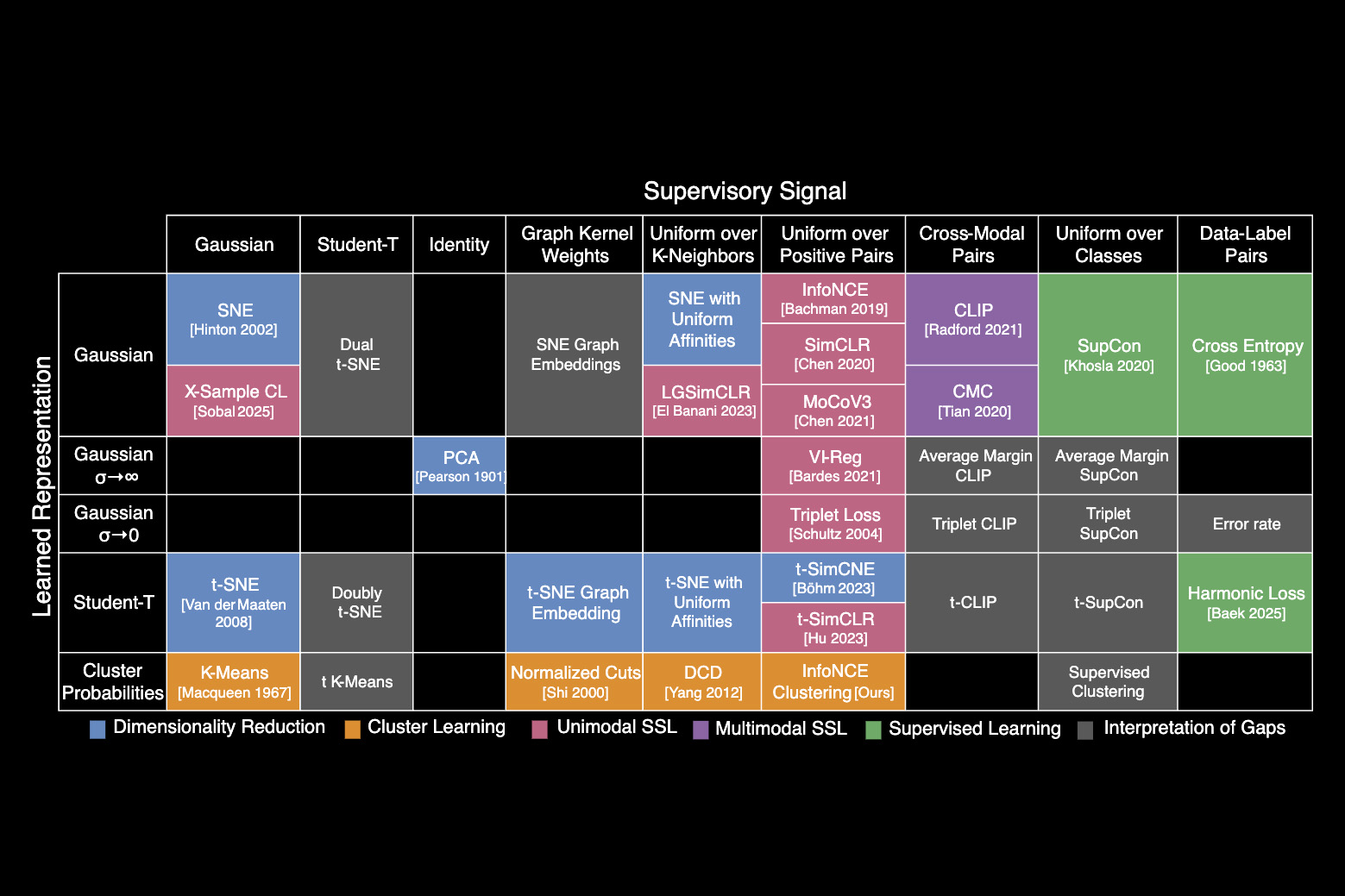

Building on these insights, the researchers recognized a unifying equation that underlies many classical AI algorithms. They used that equation to reframe well-liked strategies and organize them right into a table, categorizing every based mostly on the approximate relationships it learns.

Just just like the periodic table of chemical components, which initially contained clean squares that had been later crammed in by scientists, the periodic table of machine studying additionally has empty areas. These areas predict the place algorithms ought to exist, however which haven’t been found but.

The table offers researchers a toolkit to design new algorithms with out the necessity to rediscover concepts from prior approaches, says Shaden Alshammari, an MIT graduate pupil and lead creator of a paper on this new framework.

“It’s not just a metaphor,” provides Alshammari. “We’re starting to see machine learning as a system with structure that is a space we can explore rather than just guess our way through.”

She is joined on the paper by John Hershey, a researcher at Google AI Perception; Axel Feldmann, an MIT graduate pupil; William Freeman, the Thomas and Gerd Perkins Professor of Electrical Engineering and Computer Science and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL); and senior creator Mark Hamilton, an MIT graduate pupil and senior engineering supervisor at Microsoft. The analysis shall be offered on the International Conference on Learning Representations.

An unintended equation

The researchers didn’t got down to create a periodic table of machine studying.

After becoming a member of the Freeman Lab, Alshammari started learning clustering, a machine-learning approach that classifies photographs by studying to prepare related photographs into close by clusters.

She realized the clustering algorithm she was learning was much like one other classical machine-learning algorithm, referred to as contrastive studying, and started digging deeper into the arithmetic. Alshammari discovered that these two disparate algorithms could be reframed utilizing the identical underlying equation.

“We almost got to this unifying equation by accident. Once Shaden discovered that it connects two methods, we just started dreaming up new methods to bring into this framework. Almost every single one we tried could be added in,” Hamilton says.

The framework they created, data contrastive studying (I-Con), reveals how a range of algorithms might be seen by the lens of this unifying equation. It contains all the pieces from classification algorithms that may detect spam to the deep studying algorithms that energy LLMs.

The equation describes how such algorithms discover connections between actual knowledge factors after which approximate these connections internally.

Each algorithm goals to attenuate the quantity of deviation between the connections it learns to approximate and the actual connections in its coaching knowledge.

They determined to prepare I-Con right into a periodic table to categorize algorithms based mostly on how factors are linked in actual datasets and the first methods algorithms can approximate these connections.

“The work went gradually, but once we had identified the general structure of this equation, it was easier to add more methods to our framework,” Alshammari says.

A device for discovery

As they organized the table, the researchers started to see gaps the place algorithms could exist, however which hadn’t been invented but.

The researchers crammed in a single hole by borrowing concepts from a machine-learning approach referred to as contrastive studying and making use of them to picture clustering. This resulted in a brand new algorithm that could classify unlabeled photographs 8 p.c higher than one other state-of-the-art method.

They additionally used I-Con to indicate how an information debiasing approach developed for contrastive studying could be used to spice up the accuracy of clustering algorithms.

In addition, the versatile periodic table permits researchers so as to add new rows and columns to characterize extra varieties of datapoint connections.

Ultimately, having I-Con as a information could assist machine studying scientists suppose exterior the field, encouraging them to mix concepts in methods they wouldn’t essentially have thought of in any other case, says Hamilton.

“We’ve shown that just one very elegant equation, rooted in the science of information, gives you rich algorithms spanning 100 years of research in machine learning. This opens up many new avenues for discovery,” he provides.

“Perhaps the most challenging aspect of being a machine-learning researcher these days is the seemingly unlimited number of papers that appear each year. In this context, papers that unify and connect existing algorithms are of great importance, yet they are extremely rare. I-Con provides an excellent example of such a unifying approach and will hopefully inspire others to apply a similar approach to other domains of machine learning,” says Yair Weiss, a professor within the School of Computer Science and Engineering on the Hebrew University of Jerusalem, who was not concerned on this analysis.

This analysis was funded, partially, by the Air Force Artificial Intelligence Accelerator, the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions, and Quanta Computer.