Caching is a ubiquitous thought in pc science that considerably improves the efficiency of storage and retrieval programs by storing a subset of standard objects nearer to the shopper primarily based on request patterns. An essential algorithmic piece of cache administration is the choice coverage used for dynamically updating the set of things being saved, which has been extensively optimized over a number of many years, leading to a number of environment friendly and strong heuristics. While making use of machine learning to cache insurance policies has proven promising outcomes in recent times (e.g., LRB, LHD, storage purposes), it stays a problem to outperform strong heuristics in a means that may generalize reliably past benchmarks to manufacturing settings, whereas sustaining aggressive compute and reminiscence overheads.

In “HALP: Heuristic Aided Learned Preference Eviction Policy for YouTube Content Delivery Network”, introduced at NSDI 2023, we introduce a scalable state-of-the-art cache eviction framework that’s primarily based on realized rewards and makes use of choice learning with automated feedback. The Heuristic Aided Learned Preference (HALP) framework is a meta-algorithm that makes use of randomization to merge a light-weight heuristic baseline eviction rule with a realized reward mannequin. The reward mannequin is a light-weight neural community that’s constantly skilled with ongoing automated feedback on choice comparisons designed to imitate the offline oracle. We talk about how HALP has improved infrastructure effectivity and consumer video playback latency for YouTube’s content material supply community.

Learned preferences for cache eviction choices

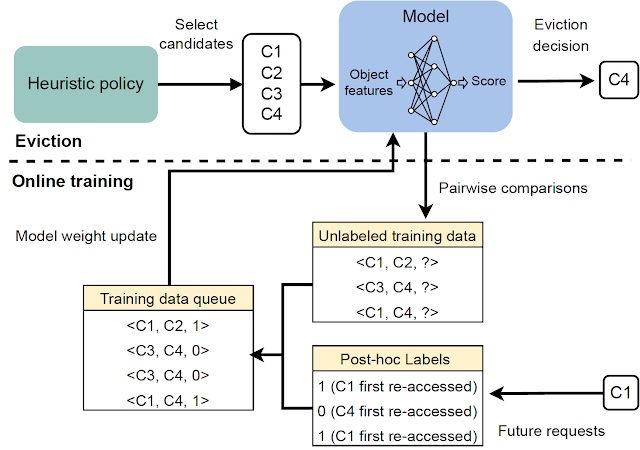

The HALP framework computes cache eviction choices primarily based on two elements: (1) a neural reward mannequin skilled with automated feedback through choice learning, and (2) a meta-algorithm that mixes a realized reward mannequin with a quick heuristic. As the cache observes incoming requests, HALP constantly trains a small neural community that predicts a scalar reward for every merchandise by formulating this as a choice learning technique through pairwise choice feedback. This facet of HALP is just like reinforcement learning from human feedback (RLHF) programs, however with two essential distinctions:

- Feedback is automated and leverages well-known outcomes in regards to the construction of offline optimum cache eviction insurance policies.

- The mannequin is realized constantly utilizing a transient buffer of coaching examples constructed from the automated feedback course of.

The eviction choices depend on a filtering mechanism with two steps. First, a small subset of candidates is chosen utilizing a heuristic that’s environment friendly, however suboptimal by way of efficiency. Then, a re-ranking step optimizes from throughout the baseline candidates through the sparing use of a neural community scoring operate to “boost” the standard of the ultimate resolution.

As a manufacturing prepared cache coverage implementation, HALP not solely makes eviction choices, but additionally subsumes the end-to-end technique of sampling pairwise choice queries used to effectively assemble related feedback and replace the mannequin to energy eviction choices.

A neural reward mannequin

HALP makes use of a lightweight two-layer multilayer perceptron (MLP) as its reward mannequin to selectively rating particular person objects within the cache. The options are constructed and managed as a metadata-only “ghost cache” (just like classical insurance policies like ARC). After any given lookup request, along with common cache operations, HALP conducts the book-keeping (e.g., monitoring and updating characteristic metadata in a capacity-constrained key-value retailer) wanted to replace the dynamic inside illustration. This contains: (1) externally tagged options supplied by the consumer as enter, alongside with a cache lookup request, and (2) internally constructed dynamic options (e.g., time since final entry, common time between accesses) constructed from lookup instances noticed on every merchandise.

HALP learns its reward mannequin totally on-line ranging from a random weight initialization. This would possibly look like a nasty thought, particularly if the selections are made completely for optimizing the reward mannequin. However, the eviction choices depend on each the realized reward mannequin and a suboptimal however easy and strong heuristic like LRU. This permits for optimum efficiency when the reward mannequin has totally generalized, whereas remaining strong to a briefly uninformative reward mannequin that’s but to generalize, or within the technique of catching as much as a altering setting.

Another benefit of on-line coaching is specialization. Each cache server runs in a probably totally different setting (e.g., geographic location), which influences native community circumstances and what content material is regionally standard, amongst different issues. Online coaching routinely captures this data whereas lowering the burden of generalization, versus a single offline coaching resolution.

Scoring samples from a randomized precedence queue

It might be impractical to optimize for the standard of eviction choices with an completely realized goal for two causes.

- Compute effectivity constraints: Inference with a realized community might be considerably dearer than the computations carried out in sensible cache insurance policies working at scale. This limits not solely the expressivity of the community and options, but additionally how usually these are invoked throughout every eviction resolution.

- Robustness for generalizing out-of-distribution: HALP is deployed in a setup that includes continuous learning, the place a rapidly altering workload would possibly generate request patterns that is perhaps briefly out-of-distribution with respect to beforehand seen information.

To deal with these points, HALP first applies a reasonable heuristic scoring rule that corresponds to an eviction precedence to determine a small candidate pattern. This course of is predicated on environment friendly random sampling that approximates precise precedence queues. The precedence operate for producing candidate samples is meant to be fast to compute utilizing current manually-tuned algorithms, e.g., LRU. However, that is configurable to approximate different cache alternative heuristics by enhancing a easy price operate. Unlike prior work, the place the randomization was used to tradeoff approximation for effectivity, HALP additionally depends on the inherent randomization within the sampled candidates throughout time steps for offering the mandatory exploratory range within the sampled candidates for each coaching and inference.

The remaining evicted merchandise is chosen from among the many equipped candidates, equal to the best-of-n reranked pattern, equivalent to maximizing the expected choice rating in line with the neural reward mannequin. The similar pool of candidates used for eviction choices can be used to assemble the pairwise choice queries for automated feedback, which helps reduce the coaching and inference skew between samples.

|

| An overview of the two-stage course of invoked for every eviction resolution. |

Online choice learning with automated feedback

The reward mannequin is realized utilizing on-line feedback, which is predicated on routinely assigned choice labels that point out, wherever possible, the ranked choice ordering for the time taken to obtain future re-accesses, ranging from a given snapshot in time amongst every queried pattern of things. This is just like the oracle optimum coverage, which, at any given time, evicts an merchandise with the farthest future entry from all of the objects within the cache.

|

| Generation of the automated feedback for learning the reward mannequin. |

To make this feedback course of informative, HALP constructs pairwise choice queries which might be probably to be related for eviction choices. In sync with the standard cache operations, HALP points a small variety of pairwise choice queries whereas making every eviction resolution, and appends them to a set of pending comparisons. The labels for these pending comparisons can solely be resolved at a random future time. To function on-line, HALP additionally performs some extra book-keeping after every lookup request to course of any pending comparisons that may be labeled incrementally after the present request. HALP indexes the pending comparability buffer with every component concerned within the comparability, and recycles the reminiscence consumed by stale comparisons (neither of which can ever get a re-access) to make sure that the reminiscence overhead related with feedback technology stays bounded over time.

|

| Overview of all important elements in HALP. |

Results: Impact on the YouTube CDN

Through empirical evaluation, we present that HALP compares favorably to state-of-the-art cache insurance policies on public benchmark traces by way of cache miss charges. However, whereas public benchmarks are a great tool, they’re not often enough to seize all of the utilization patterns internationally over time, to not point out the various {hardware} configurations that we’ve got already deployed.

Until just lately, YouTube servers used an optimized LRU-variant for reminiscence cache eviction. HALP will increase YouTube’s reminiscence egress/ingress — the ratio of the full bandwidth egress served by the CDN to that consumed for retrieval (ingress) as a consequence of cache misses — by roughly 12% and reminiscence hit price by 6%. This reduces latency for customers, since reminiscence reads are sooner than disk reads, and likewise improves egressing capability for disk-bounded machines by shielding the disks from visitors.

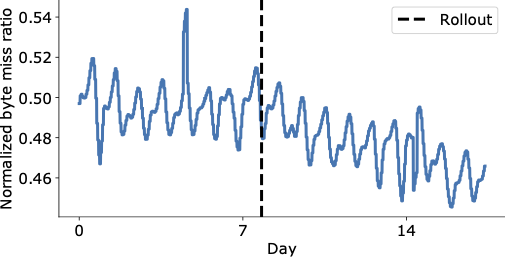

The determine beneath exhibits a visually compelling discount within the byte miss ratio within the days following HALP’s remaining rollout on the YouTube CDN, which is now serving considerably extra content material from throughout the cache with decrease latency to the tip consumer, and with out having to resort to dearer retrieval that will increase the working prices.

|

| Aggregate worldwide YouTube byte miss ratio earlier than and after rollout (vertical dashed line). |

An aggregated efficiency enchancment might nonetheless cover essential regressions. In addition to measuring general impression, we additionally conduct an evaluation within the paper to know its impression on totally different racks utilizing a machine degree evaluation, and discover it to be overwhelmingly constructive.

Conclusion

We launched a scalable state-of-the-art cache eviction framework that’s primarily based on realized rewards and makes use of choice learning with automated feedback. Because of its design decisions, HALP might be deployed in a way just like every other cache coverage with out the operational overhead of getting to individually handle the labeled examples, coaching process and the mannequin variations as extra offline pipelines widespread to most machine learning programs. Therefore, it incurs solely a small additional overhead in comparison with different classical algorithms, however has the additional benefit of with the ability to benefit from extra options to make its eviction choices and constantly adapt to altering entry patterns.

This is the primary large-scale deployment of a realized cache coverage to a broadly used and closely trafficked CDN, and has considerably improved the CDN infrastructure effectivity whereas additionally delivering a greater high quality of expertise to customers.

Acknowledgements

Ramki Gummadi is now a part of Google DeepMind. We want to thank John Guilyard for assist with the illustrations and Richard Schooler for feedback on this put up.