Code-change opinions are a essential a part of the software program growth course of at scale, taking a major quantity of the code authors’ and the code reviewers’ time. As a part of this course of, the reviewer inspects the proposed code and asks the writer for code modifications by way of comments written in pure language. At Google, we see tens of millions of reviewer comments per 12 months, and authors require a median of ~60 minutes lively shepherding time between sending modifications for review and at last submitting the change. In our measurements, the required lively work time that the code writer should do to deal with reviewer comments grows virtually linearly with the variety of comments. However, with machine studying (ML), we have now a possibility to automate and streamline the code review course of, e.g., by proposing code modifications based mostly on a remark’s textual content.

Today, we describe making use of current advances of enormous sequence fashions in a real-world setting to robotically resolve code review comments within the day-to-day growth workflow at Google (publication forthcoming). As of in the present day, code-change authors at Google deal with a considerable quantity of reviewer comments by making use of an ML-suggested edit. We count on that to cut back time spent on code opinions by a whole lot of 1000’s of hours yearly at Google scale. Unsolicited, very optimistic suggestions highlights that the influence of ML-suggested code edits will increase Googlers’ productiveness and permits them to deal with extra artistic and complicated duties.

Predicting the code edit

We began by coaching a mannequin that predicts code edits wanted to deal with reviewer comments. The mannequin is pre-trained on numerous coding duties and associated developer actions (e.g., renaming a variable, repairing a damaged construct, modifying a file). It’s then fine-tuned for this particular process with reviewed code modifications, the reviewer comments, and the edits the writer carried out to deal with these comments.

|

| An instance of an ML-suggested edit of refactorings which might be unfold inside the code. |

Google makes use of a monorepo, a single repository for all of its software program artifacts, which permits our coaching dataset to incorporate all unrestricted code used to construct Google’s most up-to-date software program, in addition to earlier variations.

To enhance the mannequin high quality, we iterated on the coaching dataset. For instance, we in contrast the mannequin efficiency for datasets with a single reviewer remark per file to datasets with a number of comments per file, and experimented with classifiers to wash up the coaching knowledge based mostly on a small, curated dataset to decide on the mannequin with one of the best offline precision and recall metrics.

Serving infrastructure and consumer expertise

We designed and applied the function on prime of the skilled mannequin, specializing in the general consumer expertise and developer effectivity. As a part of this, we explored completely different consumer expertise (UX) options by way of a sequence of consumer research. We then refined the function based mostly on insights from an inner beta (i.e., a take a look at of the function in growth) together with consumer suggestions (e.g., a “Was this helpful?” button subsequent to the urged edit).

The last mannequin was calibrated for a goal precision of fifty%. That is, we tuned the mannequin and the options filtering, so that fifty% of urged edits on our analysis dataset are appropriate. In normal, growing the goal precision reduces the variety of proven urged edits, and lowering the goal precision results in extra incorrect urged edits. Incorrect urged edits take the builders time and scale back the builders’ belief within the function. We discovered {that a} goal precision of fifty% offers stability.

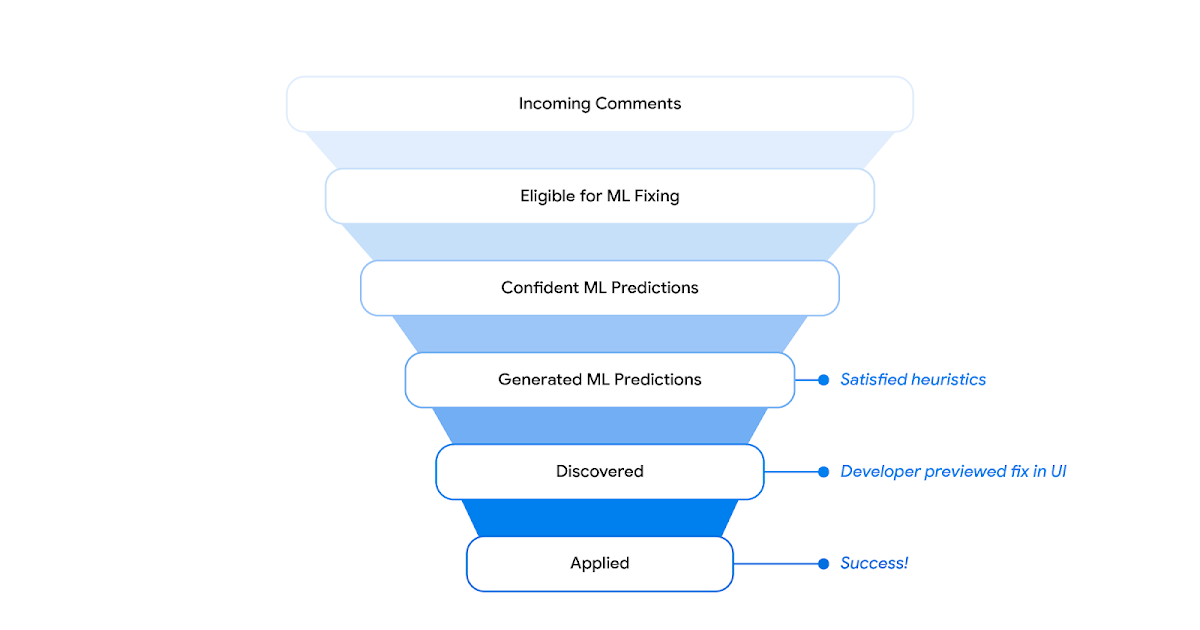

At a excessive degree, for each new reviewer remark, we generate the mannequin enter in the identical format that’s used for coaching, question the mannequin, and generate the urged code edit. If the mannequin is assured within the prediction and some extra heuristics are happy, we ship the urged edit to downstream programs. The downstream programs, i.e., the code review frontend and the built-in growth surroundings (IDE), expose the urged edits to the consumer and log consumer interactions, comparable to preview and apply occasions. A devoted pipeline collects these logs and generates combination insights, e.g., the general acceptance charges as reported on this weblog put up.

|

| Architecture of the ML-suggested edits infrastructure. We course of code and infrastructure from a number of companies, get the mannequin predictions and floor the predictions within the code review software and IDE. |

The developer interacts with the ML-suggested edits within the code review software and the IDE. Based on insights from the consumer research, the mixing into the code review software is most fitted for a streamlined review expertise. The IDE integration offers extra performance and helps 3-way merging of the ML-suggested edits (left within the determine under) in case of conflicting native modifications on prime of the reviewed code state (proper) into the merge end result (middle).

.gif) |

| 3-way-merge UX in IDE. |

Results

Offline evaluations point out that the mannequin addresses 52% of comments with a goal precision of fifty%. The on-line metrics of the beta and the total inner launch verify these offline metrics, i.e., we see mannequin options above our goal mannequin confidence for round 50% of all related reviewer comments. 40% to 50% of all previewed urged edits are utilized by code authors.

We used the “not helpful” suggestions in the course of the beta to determine recurring failure patterns of the mannequin. We applied serving-time heuristics to filter these and, thus, scale back the variety of proven incorrect predictions. With these modifications, we traded amount for high quality and noticed an elevated real-world acceptance charge.

.gif) |

| Code review software UX. The suggestion is proven as a part of the remark and could be previewed, utilized and rated as useful or not useful. |

Our beta launch confirmed a discoverability problem: code authors solely previewed ~20% of all generated urged edits. We modified the UX and launched a distinguished “Show ML-edit” button (see the determine above) subsequent to the reviewer remark, resulting in an total preview charge of ~40% at launch. We moreover discovered that urged edits within the code review software are sometimes not relevant attributable to conflicting modifications that the writer did in the course of the review course of. We addressed this with a button within the code review software that opens the IDE in a merge view for the urged edit. We now observe that greater than 70% of those are utilized within the code review software and fewer than 30% are utilized within the IDE. All these modifications allowed us to extend the general fraction of reviewer comments which might be addressed with an ML-suggested edit by an element of two from beta to the total inner launch. At Google scale, these outcomes assist automate the decision of a whole lot of 1000’s of comments annually.

|

| Suggestions filtering funnel. |

We see ML-suggested edits addressing a variety of reviewer comments in manufacturing. This consists of easy localized refactorings and refactorings which might be unfold inside the code, as proven within the examples all through the weblog put up above. The function addresses longer and fewer formally-worded comments that require code technology, refactorings and imports.

|

| Example of a suggestion for an extended and fewer formally worded remark that requires code technology, refactorings and imports. |

The mannequin may also reply to advanced comments and produce in depth code edits (proven under). The generated take a look at case follows the present unit take a look at sample, whereas altering the main points as described within the remark. Additionally, the edit suggests a complete title for the take a look at reflecting the take a look at semantics.

|

| Example of the mannequin’s capacity to answer advanced comments and produce in depth code edits. |

Conclusion and future work

In this put up, we launched an ML-assistance function to cut back the time spent on code review associated modifications. At the second, a considerable quantity of all actionable code review comments on supported languages are addressed with utilized ML-suggested edits at Google. A 12-week A/B experiment throughout all Google builders will additional measure the influence of the function on the general developer productiveness.

We are engaged on enhancements all through the entire stack. This consists of growing the standard and recall of the mannequin and constructing a extra streamlined expertise for the developer with improved discoverability all through the review course of. As a part of this, we’re investigating the choice of exhibiting urged edits to the reviewer whereas they draft comments and increasing the function into the IDE to allow code-change authors to get urged code edits for natural-language instructions.

Acknowledgements

This is the work of many individuals in Google Core Systems & Experiences group, Google Research, and DeepMind. We’d wish to particularly thank Peter Choy for bringing the collaboration collectively, and all of our group members for his or her key contributions and helpful recommendation, together with Marcus Revaj, Gabriela Surita, Maxim Tabachnyk, Jacob Austin, Nimesh Ghelani, Dan Zheng, Peter Josling, Mariana Stariolo, Chris Gorgolewski, Sascha Varkevisser, Katja Grünwedel, Alberto Elizondo, Tobias Welp, Paige Bailey, Pierre-Antoine Manzagol, Pascal Lamblin, Chenjie Gu, Petros Maniatis, Henryk Michalewski, Sara Wiltberger, Ambar Murillo, Satish Chandra, Madhura Dudhgaonkar, Niranjan Tulpule, Zoubin Ghahramani, Juanjo Carin, Danny Tarlow, Kevin Villela, Stoyan Nikolov, David Tattersall, Boris Bokowski, Kathy Nix, Mehdi Ghissassi, Luis C. Cobo, Yujia Li, David Choi, Kristóf Molnár, Vahid Meimand, Amit Patel, Brett Wiltshire, Laurent Le Brun, Mingpan Guo, Hermann Loose, Jonas Mattes, Savinee Dancs.