Forward-looking: Major chipmaking firms have been making an attempt to stack totally different varieties of silicon elements onto one another for fairly a while. Now, Samsung is seemingly prepared to debut a big development that integrates logic chips with high-bandwidth RAM.

Samsung launched its latest and most superior chip packaging expertise and service roadmap through the Samsung Foundry Forum 2024 occasion. According to unnamed trade sources quoted by the Korea Economy Daily and Samsung’s personal statements, the brand new tech will debut in fourth-gen high-bandwidth memory due out in 2025.

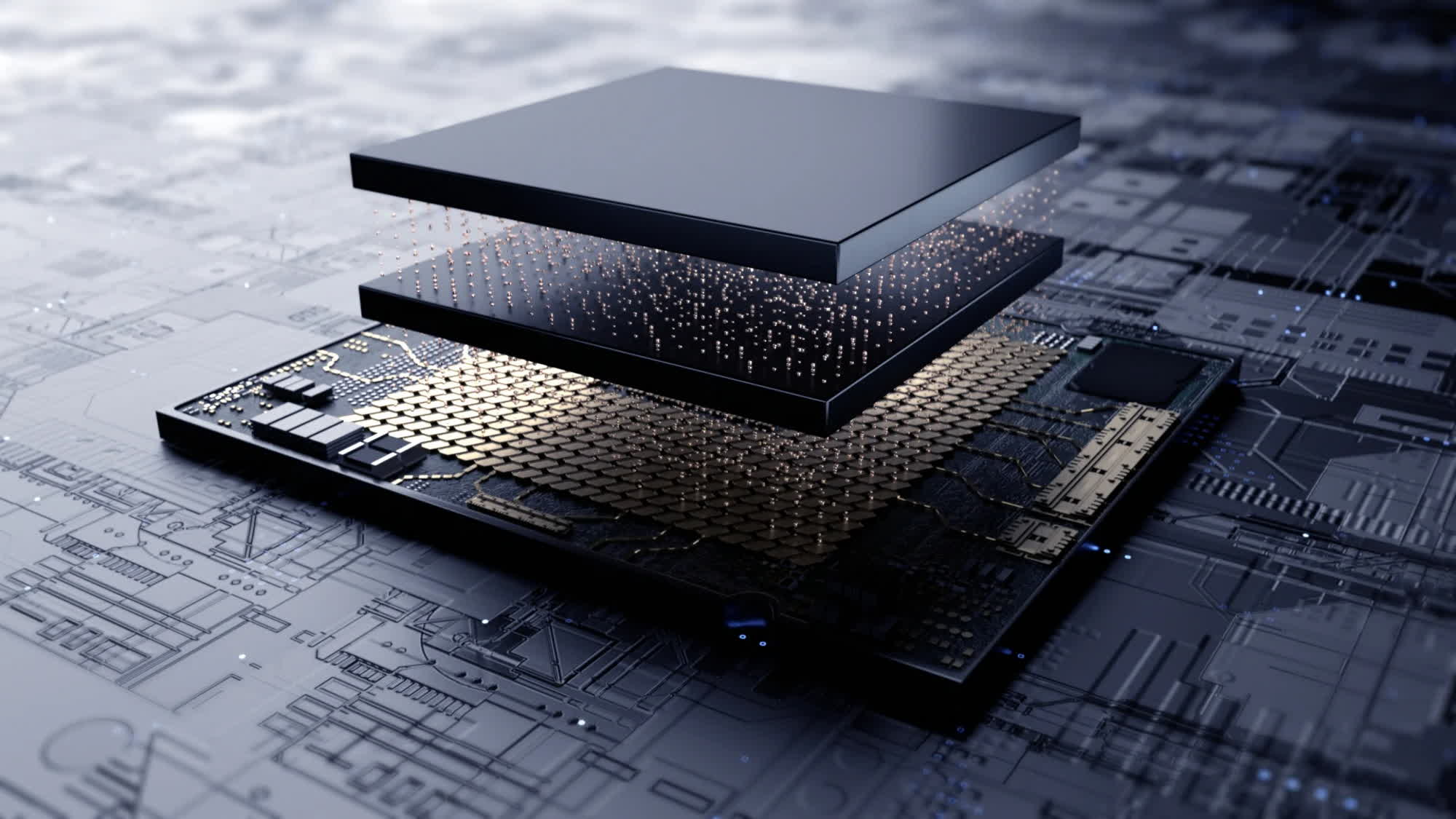

Samsung’s new stacking resolution represents a big development in chip design. It is the primary 3D packaging expertise formally launched by the world’s largest memory producer and one of the few firms with the experience and instruments required to produce such superior chips. Current stacking applied sciences are based mostly on a “2.5D” design, the place the HBM memory chip connects horizontally to the underlying logic chip (largely GPUs) through a silicon interposer.

The 3D packaging expertise developed by Samsung seemingly eliminates the necessity for an interposer, enabling “true” vertical stacking between the memory chip and the logic silicon elements. However, making a 3D packaging design requires a brand new base die for HBM memory, introducing a way more advanced course of expertise into the equation.

Samsung’s 3D packaging resolution relies on a platform referred to as SAINT, or Samsung Advanced Interconnect Technology. The tech has been in improvement for years and consists of totally different approaches for various sorts of silicon. The SAINT-S resolution is for SRAM die on logic die stacking, SAINT-L is for logic die on logic die stacking, and SAINT-D is for DRAM die on logic die stacking.

Thanks to 3D packaging, future GPUs will present quicker knowledge switch charges, cleaner electrical indicators, diminished energy consumption, and decrease latency ranges. However, the 3D packaging options may also come at the next value, and Samsung is seemingly in providing them as a “turnkey” service to promote extra HBM chips to clients.

The Korean company is predicted to finalize the SAINT-D course of this yr, with HBM4 memory expertise arriving subsequent yr. Companies growing AI accelerators, significantly Nvidia, are Samsung’s foremost enterprise targets for the brand new expertise, in accordance to Korea Daily. However, SAINT-D would require a chip redesign effort that no identified firm is presently working on.