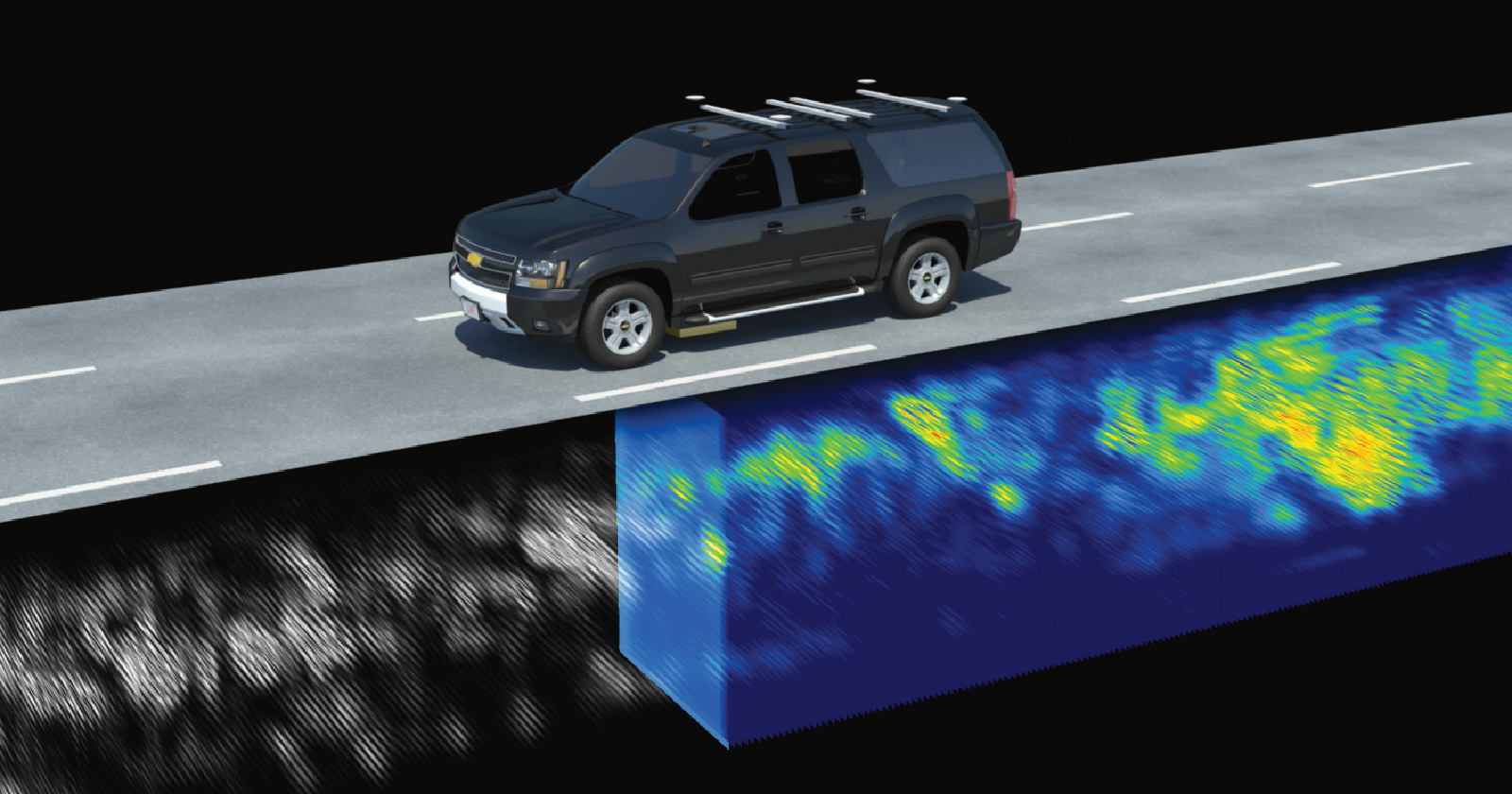

The improvement of totally autonomous automobiles has been in contrast to the touchdown on the moon. Such are the technological, authorized, and even moral challenges concerned in placing a man-made intelligence system behind the wheel. Among all these points, the want for the automobile to know the place it’s always and to be able to acknowledge its atmosphere is one in every of the most important. And a easy snowfall can render ineffective the most superior autonomous driving programs. For this purpose, a crew of researchers from the Laboratory of Computer Science and Artificial Intelligence at MIT (CSAIL) has been engaged on the improvement of a system that permits automobiles to map the subsoil. Their method makes use of ground-penetrating radar (GPR), which presents superior detection capabilities. In this case, it’s a localized GPR, or LGPR, developed by one other MIT laboratory.

The standard answer to date for environmental consciousness was to use video cameras and LiDAR programs. The latter are environment friendly when it comes to making a 3D mapping of the atmosphere, however laser expertise is unable to undergo, for instance, a blanket of snow. Instead, the GPR system can ship electromagnetic pulses that attain up to three meters deep and detect the asphalt and the composition of the subsoil, in addition to the presence of roots and different parts. CSAIL has leveraged these options to combine the sensor right into a stand-alone car and perform checks in a closed circuit coated with snow.

This expertise mission continues to be in the testing section and has to overcome some obstacles. For instance, the LGPR system utilized in the checks is 1.5 meters huge and should be put in on the outdoors of the car to work correctly. However, the researchers consider that, in the medium time period, their method might considerably enhance the present capabilities of autonomous vehicles.

A digital driving academy for self-drivings vehicles

Another of MIT’s initiatives in the discipline of autonomous automobiles is the improvement of a photorealistic simulation engine with infinite potentialities that permits them to be taught to react in a digital atmosphere. The drawback with the simulators used to date was that the knowledge, which got here from actual human trajectories, didn’t cowl all the potentialities. For instance, the response to an imminent crash or the invasion of the lane by an oncoming car shouldn’t be very frequent. Now MIT researchers have used a simulator referred to as VISTA that synthesizes an infinite variety of trajectories that the car might observe in the actual world.

Essentially, it’s a matter of accumulating video knowledge of human driving. Each body is translated right into a 3D level cloud into which the digital car is launched. At every change of trajectory, the engine is able to simulate the modification of perspective and render one other photorealistic scene by way of a neural engine. Every time the digital automobile crashes, the system returns it to the start line, which is taken into account a penalty. As the hours cross, the car travels better distances with no collision. Subsequently, researchers have managed to switch this studying to an actual autonomous automobile.

Source: MIT