Video understanding is a difficult drawback that requires reasoning about each spatial data (e.g., for objects in a scene, together with their places and relations) and temporal data for actions or occasions proven in a video. There are many video understanding functions and duties, akin to understanding the semantic content material of net movies and robotic notion. However, present works, akin to ViViT and TimeSFormer, densely course of the video and require vital compute, particularly as mannequin measurement plus video size and decision improve.

In “Rethinking Video ViTs: Sparse Video Tubes for Joint Image and Video Learning”, to be offered at CVPR 2023, we introduce a easy method that turns a Vision Transformer (ViT) mannequin image encoder into an environment friendly video spine utilizing sparse video tubes (learnable visible representations of samples from the video) to cut back the mannequin’s compute wants. This method can seamlessly course of each pictures and movies, which permits it to leverage each image and video knowledge sources throughout coaching. This coaching additional permits our sparse tubes ViT mannequin to coalesce image and video backbones collectively to serve a twin position as both an image or video spine (or each), relying on the enter. We exhibit that this mannequin is scalable, may be tailored to massive pre-trained ViTs with out requiring full fine-tuning, and achieves state-of-the-art outcomes throughout many video classification benchmarks.

|

| Using sparse video tubes to pattern a video, mixed with a regular ViT encoder, results in an environment friendly visible illustration that may be seamlessly shared with image inputs. |

Building a joint image-video spine

Our sparse tube ViT makes use of a regular ViT spine, consisting of a stack of Transformer layers, that processes video data. Previous strategies, akin to ViViT, densely tokenize the video and then apply factorized consideration, i.e., the eye weights for every token are computed individually for the temporal and spatial dimensions. In the usual ViT structure, self-attention is computed over the entire token sequence. When utilizing movies as enter, token sequences change into fairly lengthy, which may make this computation gradual. Instead, within the methodology we suggest, the video is sparsely sampled utilizing video tubes, that are 3D learnable visible representations of assorted shapes and sizes (described in additional element beneath) from the video. These tubes are used to sparsely pattern the video utilizing a big temporal stride, i.e., when a tube kernel is simply utilized to a couple places within the video, moderately than each pixel.

By sparsely sampling the video tubes, we will use the identical world self-attention module, moderately than factorized consideration like ViViT. We experimentally present that the addition of factorized consideration layers can hurt the efficiency as a result of uninitialized weights. This single stack of transformer layers within the ViT spine additionally permits higher sharing of the weights and improves efficiency. Sparse video tube sampling is finished through the use of a big spatial and temporal stride that selects tokens on a set grid. The massive stride reduces the variety of tokens within the full community, whereas nonetheless capturing each spatial and temporal data and enabling the environment friendly processing of all tokens.

Sparse video tubes

Video tubes are 3D grid-based cuboids that may have totally different shapes or classes and seize totally different data with strides and beginning places that may overlap. In the mannequin, we use three distinct tube shapes that seize: (1) solely spatial data (leading to a set of 2D image patches), (2) lengthy temporal data (over a small spatial space), and (3) each spatial and temporal data equally. Tubes that seize solely spatial data may be utilized to each image and video inputs. Tubes that seize lengthy temporal data or each temporal and spatial data equally are solely utilized to video inputs. Depending on the enter video measurement, the three tube shapes are utilized to the mannequin a number of occasions to generate tokens.

A set place embedding, which captures the worldwide location of every tube (together with any strides, offsets, and so forth.) relative to all the opposite tubes, is utilized to the video tubes. Different from the earlier realized place embeddings, this fastened one higher permits sparse, overlapping sampling. Capturing the worldwide location of the tube helps the mannequin know the place every got here from, which is particularly useful when tubes overlap or are sampled from distant video places. Next, the tube options are concatenated collectively to kind a set of N tokens. These tokens are processed by a regular ViT encoder. Finally, we apply an consideration pooling to compress all of the tokens right into a single illustration and enter to a totally linked (FC) layer to make the classification (e.g., enjoying soccer, swimming, and so forth.).

|

| Our video ViT mannequin works by sampling sparse video tubes from the video (proven on the backside) to allow both or each image or video inputs to be seamlessly processed. These tubes have totally different shapes and seize totally different video options. Tube 1 (yellow) solely captures spatial data, leading to a set of 2D patches that may be utilized to image inputs. Tube 2 (purple) captures temporal data and some spatial data and tube 3 (inexperienced) equally captures each temporal and spatial data (i.e., the spatial measurement of the tube x and y are the identical because the variety of frames t). Tubes 2 and 3 can solely be utilized to video inputs. The place embedding is added to all of the tube options. |

Scaling video ViTs

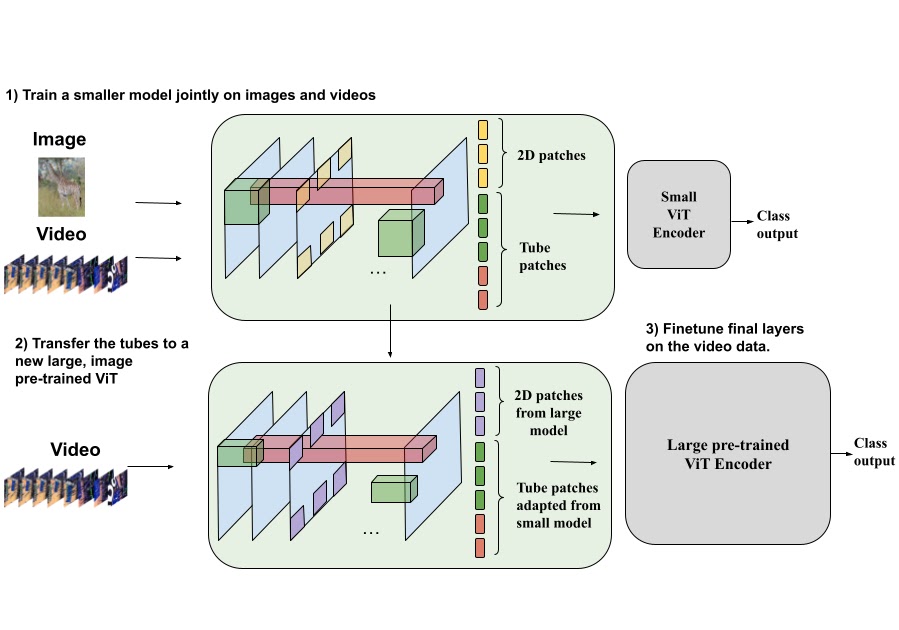

The means of constructing video backbones is computationally intensive, however our sparse tube ViT mannequin permits computationally environment friendly scaling of video fashions, leveraging beforehand educated image backbones. Since image backbones may be tailored to a video spine, massive image backbones may be become massive video backbones. More particularly, one can switch the realized video function representations from a small tube ViT to a big pre-trained image ViT and practice the ensuing mannequin with video knowledge for just a few steps, versus a full coaching from scratch.

|

| Our method permits scaling a sparse tube ViT in a extra environment friendly method. Specifically, the video options from a small video ViT (high community) may be transferred to a big, pre-trained image ViT (backside community), and additional fine-tuned. This requires fewer coaching steps to attain robust efficiency with the massive mannequin. This is useful as massive video fashions is likely to be prohibitively costly to coach from scratch. |

Results

We consider our sparse tube ViT method utilizing Kinetics-400 (proven beneath), Kinetics-600 and Kinetics-700 datasets and examine its efficiency to a protracted listing of prior strategies. We discover that our method outperforms all prior strategies. Importantly, it outperforms all state-of-the-art strategies educated collectively on image+video datasets.

|

| Performance in comparison with a number of prior works on the favored Kinetics-400 video dataset. Our sparse tube ViT outperforms state-of-the-art strategies. |

Furthermore, we check our sparse tube ViT mannequin on the Something-Something V2 dataset, which is usually used to judge extra dynamic actions, and additionally report that it outperforms all prior state-of-the-art approaches.

|

| Performance on the Something-Something V2 video dataset. |

Visualizing some realized kernels

It is attention-grabbing to know what sort of rudimentary options are being realized by the proposed mannequin. We visualize them beneath, displaying each the 2D patches, that are shared for each pictures and movies, and video tubes. These visualizations present the 2D or 3D data being captured by the projection layer. For instance, within the 2D patches, varied widespread options, like edges and colours, are detected, whereas the 3D tubes seize fundamental shapes and how they might change over time.

|

| Visualizations of patches and tubes realized the sparse tube ViT mannequin. Top row are the 2D patches and the remaining two rows are snapshots from the realized video tubes. The tubes present every patch for the 8 or 4 frames to which they’re utilized. |

Conclusions

We have offered a brand new sparse tube ViT, which may flip a ViT encoder into an environment friendly video mannequin, and can seamlessly work with each image and video inputs. We additionally confirmed that enormous video encoders may be bootstrapped from small video encoders and image-only ViTs. Our method outperforms prior strategies throughout a number of well-liked video understanding benchmarks. We consider that this straightforward illustration can facilitate way more environment friendly studying with enter movies, seamlessly incorporate both image or video inputs and successfully remove the bifurcation of image and video fashions for future multimodal understanding.

Acknowledgements

This work is carried out by AJ Piergiovanni, Weicheng Kuo and Anelia Angelova, who at the moment are at Google DeepMind. We thank Abhijit Ogale, Luowei Zhou, Claire Cui and our colleagues in Google Research for their useful discussions, feedback, and help.