Imagine for a minute that you just’re a programming teacher who’s spent many hours making artistic homework issues to introduce your college students to the world of programming. One day, a colleague tells you about an AI device known as ChatGPT. To your shock (and alarm), if you give it your homework issues, it solves most of them completely, perhaps even higher than you’ll be able to! You understand that by now, AI instruments like ChatGPT and GitHub Copilot are ok to unravel all of your class’s homework issues and inexpensive sufficient that any pupil can use them. How must you educate college students in your courses figuring out that these AI instruments are broadly out there?

I’m Sam Lau from UC San Diego, and my Ph.D. advisor (and soon-to-be college colleague) Philip Guo and I are presenting a analysis paper at the International Computing Education Research convention (ICER) on this very matter. We needed to know:

Learn quicker. Dig deeper. See farther.

How are computing instructors planning to adapt their programs as increasingly college students begin utilizing AI coding help instruments equivalent to ChatGPT and GitHub Copilot?

To reply this query, we gathered a various pattern of views by interviewing 20 introductory programming instructors at universities throughout 9 international locations (Australia, Botswana, Canada, Chile, China, Rwanda, Spain, Switzerland, United States) spanning all 6 populated continents. To our information, our paper is the first empirical examine to assemble teacher views about these AI coding instruments that increasingly college students will possible have entry to in the future.

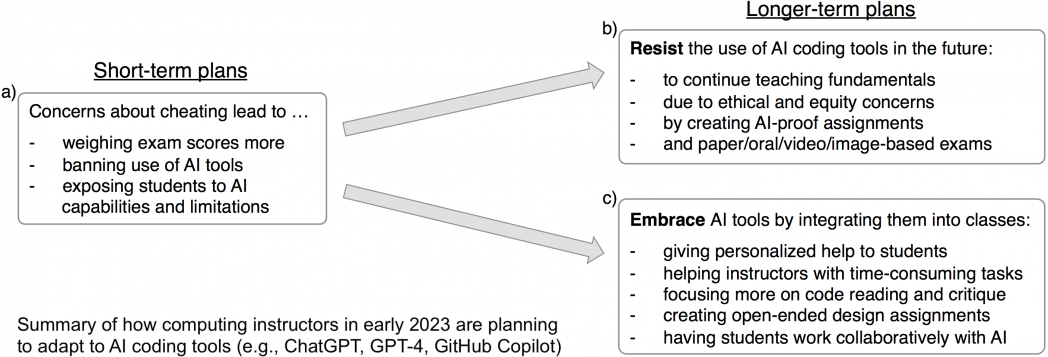

Here’s a abstract of our findings:

Short-Term Plans: Instructors Want to Stop Students from Cheating

Even although we didn’t particularly ask about dishonest in our interviews, all of the instructors we interviewed talked about it as a major motive to make modifications to their programs in the brief time period. Their reasoning was: If college students might simply get solutions to their homework questions utilizing AI instruments, then they received’t have to suppose deeply about the materials, and thus received’t be taught as a lot as they need to. Of course, having a solution key isn’t a brand new drawback for instructors, who’ve at all times fearful about college students copying off one another or on-line sources like Stack Overflow. But AI instruments like ChatGPT generate code with slight variations between responses, which is sufficient to idiot most plagiarism detectors that instructors have out there immediately.

The deeper subject for instructors is that if AI instruments can simply resolve issues in introductory programs, college students who’re studying programming for the first time may be led to imagine that AI instruments can accurately resolve any programming job, which may trigger them to develop overly reliant on them. One teacher described this as not simply dishonest, however “cheating badly” as a result of AI instruments generate code that’s incorrect in refined ways in which college students won’t be capable to perceive.

To discourage college students from turning into over-reliant on AI instruments, instructors used a combination of methods, together with making exams in-class and on-paper, and in addition having exams rely for extra of college students’ closing grades. Some instructors additionally explicitly banned AI instruments in class, or uncovered college students to the limitations of AI instruments. For instance, one teacher copied previous homework questions into ChatGPT as a dwell demo in a lecture and requested college students to critique the strengths and weaknesses of the AI-generated code. That stated, instructors thought-about these methods short-term patches; the sudden look of ChatGPT at the finish of 2022 meant that instructors wanted to make changes earlier than their programs began in 2023, which was once we interviewed them for our examine.

Longer-Term Plans (Part 1): Ideas to Resist AI Tools

In the subsequent half of our examine, instructors brainstormed many concepts about methods to method AI instruments longer-term. We cut up up these concepts into two most important classes: concepts that resist AI instruments, and concepts that embrace them. Do be aware that the majority instructors we interviewed weren’t fully on one facet or the different—they shared a combination of concepts from each classes. That stated, let’s begin with why some instructors talked about resisting AI instruments, even in the long term.

The commonest motive for wanting to withstand AI instruments was the concern that college students wouldn’t be taught the fundamentals of programming. Several instructors drew an analogy to utilizing a calculator in math class: utilizing AI instruments might be like, in the phrases of one of our interview individuals, “giving kids a calculator and they can play around with a calculator, but if they don’t know what a decimal point means, what do they really learn or do with it? They may not know how to plug in the right thing, or they don’t know how to interpret the answer.” Others talked about moral objections to AI. For instance, one teacher was fearful about latest lawsuits round Copilot’s use of open-source code as coaching information with out attribution. Others shared issues over the coaching information bias for AI instruments.

To resist AI instruments virtually, instructors proposed concepts for designing “AI-proof” homework assignments, for instance, by utilizing a custom-built library for his or her course. Also, since AI instruments are sometimes educated on U.S./English-centric information, instructors from different international locations thought that they may make their assignments more durable for AI to unravel by together with native cultural and language context (e.g. slang) from their international locations.

Instructors additionally brainstormed concepts for AI-proof assessments. One widespread suggestion was to make use of in-person paper exams since proctors might higher make sure that college students had been solely utilizing paper and pencil. Instructors additionally talked about that they may attempt oral exams the place college students both discuss to a course workers member in-person, or report a video explaining what their code does. Although these concepts had been first advised to assist preserve assessments significant, instructors additionally identified that these assessments might really enhance pedagogy by giving college students a motive to suppose extra deeply about why their code works relatively than merely attempting to get code that produces an accurate reply.

Longer-Term Plans (Part 2): Ideas to Embrace AI Tools

Another group of concepts sought to embrace AI instruments in introductory programming programs. The instructors we interviewed talked about a number of causes for wanting this future. Most generally, instructors felt that AI coding instruments would grow to be customary for programmers; since “it’s inevitable” that professionals will use AI instruments on the job, instructors needed to arrange college students for his or her future jobs. Related to this, some instructors thought that embracing AI instruments might make their establishments extra aggressive by getting forward of different universities that had been extra hesitant about doing so.

Instructors additionally noticed potential studying advantages to utilizing AI instruments. For instance, if these instruments make it in order that college students don’t have to spend as lengthy wrestling with programming syntax in introductory programs, college students might spend extra time studying about methods to higher design and engineer packages. One teacher drew an analogy to compilers: “We don’t need to look at 1’s and 0’s anymore, and nobody ever says, ‘Wow what a big problem, we don’t write machine language anymore!’ Compilers are already like AI in that they can outperform the best humans in generating code.” And in distinction to issues that AI instruments might hurt fairness and entry, some instructors thought that they may make programming much less intimidating and thus extra accessible by letting college students begin coding utilizing pure language.

Instructors additionally noticed many potential methods to make use of AI instruments themselves. For instance, many taught programs with over 100 college students, the place it will be too time-consuming to provide particular person suggestions to every pupil. Instructors thought that AI instruments educated on their class’s information might doubtlessly give customized assist to every pupil, for instance by explaining why a bit of code doesn’t work. Instructors additionally thought AI instruments might assist generate small observe issues for his or her college students.

To put together college students for a future the place AI instruments are widespread, instructors talked about that they may spend extra time in class on code studying and critique relatively than writing code from scratch. Indeed, these abilities might be helpful in the office even immediately, the place programmers spend vital quantities of time studying and reviewing different folks’s code. Instructors additionally thought that AI instruments gave them the alternative to provide extra open-ended assignments, and even have college students collaborate with AI straight on their work, the place an task would ask college students to generate code utilizing AI after which iterate on the code till it was each right and environment friendly.

Reflections

Our examine findings seize a uncommon snapshot in time in early 2023 as computing instructors are simply beginning to kind opinions about this fast-growing phenomenon however haven’t but converged to any consensus about finest practices. Using these findings as inspiration, we synthesized a various set of open analysis questions relating to methods to develop, deploy, and consider AI coding instruments for computing schooling. For occasion, what psychological fashions do novices kind each about the code that AI generates and about how the AI works to supply that code? And how do these novice psychological fashions examine to consultants’ psychological fashions of AI code era? (Section 7 of our paper has extra examples.)

We hope that these findings, together with our open analysis questions, can spur conversations about methods to work with these instruments in efficient, equitable, and moral methods.

Check out our paper right here and electronic mail us for those who’d like to debate something associated to it!

From “Ban It Till We Understand It” to “Resistance is Futile”: How University Programming Instructors Plan to Adapt as More Students Use AI Code Generation and Explanation Tools equivalent to ChatGPT and GitHub Copilot. Sam Lau and Philip J. Guo. ACM Conference on International Computing Education Research (ICER), August 2023.