Pre-training visible language (VL) fashions on web-scale image-caption datasets has lately emerged as a robust different to conventional pre-training on picture classification knowledge. Image-caption datasets are thought of to be extra “open-domain” as a result of they comprise broader scene sorts and vocabulary phrases, which lead to fashions with sturdy efficiency in few- and zero-shot recognition duties. However, pictures with fine-grained class descriptions will be uncommon, and the category distribution will be imbalanced since image-caption datasets don’t undergo guide curation. By distinction, large-scale classification datasets, corresponding to ImageInternet, are sometimes curated and can thus present fine-grained classes with a balanced label distribution. While it might sound promising, immediately combining caption and classification datasets for pre-training is commonly unsuccessful because it may end up in biased representations that don’t generalize properly to varied downstream duties.

In “Prefix Conditioning Unifies Language and Label Supervision”, introduced at CVPR 2023, we reveal a pre-training technique that makes use of each classification and caption datasets to offer complementary advantages. First, we present that naïvely unifying the datasets ends in sub-optimal efficiency on downstream zero-shot recognition duties because the mannequin is affected by dataset bias: the protection of picture domains and vocabulary phrases is completely different in every dataset. We tackle this downside throughout coaching by prefix conditioning, a novel easy and efficient technique that makes use of prefix tokens to disentangle dataset biases from visible ideas. This method permits the language encoder to study from each datasets whereas additionally tailoring function extraction to every dataset. Prefix conditioning is a generic technique that may be simply built-in into current VL pre-training goals, corresponding to Contrastive Language-Image Pre-training (CLIP) or Unified Contrastive Learning (UniCL).

High-level concept

We be aware that classification datasets are typically biased in at the least two methods: (1) the pictures principally comprise single objects from restricted domains, and (2) the vocabulary is proscribed and lacks the linguistic flexibility required for zero-shot studying. For instance, the category embedding of “a photo of a dog” optimized for ImageInternet often ends in a photograph of 1 canine within the middle of the picture pulled from the ImageInternet dataset, which doesn’t generalize properly to different datasets containing pictures of a number of canine in numerous spatial areas or a canine with different topics.

By distinction, caption datasets comprise a greater diversity of scene sorts and vocabularies. As proven beneath, if a mannequin merely learns from two datasets, the language embedding can entangle the bias from the picture classification and caption dataset, which may lower the generalization in zero-shot classification. If we are able to disentangle the bias from two datasets, we are able to use language embeddings which are tailor-made for the caption dataset to enhance generalization.

|

| Top: Language embedding entangling the bias from picture classification and caption dataset. Bottom: Language embeddings disentangles the bias from two datasets. |

Prefix conditioning

Prefix conditioning is partially impressed by immediate tuning, which prepends learnable tokens to the enter token sequences to instruct a pre-trained mannequin spine to study task-specific information that can be utilized to resolve downstream duties. The prefix conditioning method differs from immediate tuning in two methods: (1) it’s designed to unify image-caption and classification datasets by disentangling the dataset bias, and (2) it’s utilized to VL pre-training whereas the usual immediate tuning is used to fine-tune fashions. Prefix conditioning is an express strategy to particularly steer the conduct of mannequin backbones primarily based on the kind of datasets offered by customers. This is very useful in manufacturing when the variety of various kinds of datasets is understood forward of time.

During coaching, prefix conditioning learns a textual content token (prefix token) for every dataset sort, which absorbs the bias of the dataset and permits the remaining textual content tokens to deal with studying visible ideas. Specifically, it prepends prefix tokens for every dataset sort to the enter tokens that inform the language and visible encoder of the enter knowledge sort (e.g., classification vs. caption). Prefix tokens are educated to study the dataset-type-specific bias, which allows us to disentangle that bias in language representations and make the most of the embedding discovered on the image-caption dataset throughout take a look at time, even with out an enter caption.

We make the most of prefix conditioning for CLIP utilizing a language and visible encoder. During take a look at time, we make use of the prefix used for the image-caption dataset because the dataset is meant to cowl broader scene sorts and vocabulary phrases, main to higher efficiency in zero-shot recognition.

|

| Illustration of the Prefix Conditioning. |

Experimental outcomes

We apply prefix conditioning to 2 kinds of contrastive loss, CLIP and UniCL, and consider their efficiency on zero-shot recognition duties in comparison with fashions educated with ImageNet21K (IN21K) and Conceptual 12M (CC12M). CLIP and UniCL fashions educated with two datasets utilizing prefix conditioning present massive enhancements in zero-shot classification accuracy.

|

| Zero-shot classification accuracy of fashions educated with solely IN21K or CC12M in comparison with CLIP and UniCL fashions educated with each two datasets utilizing prefix conditioning (“Ours”). |

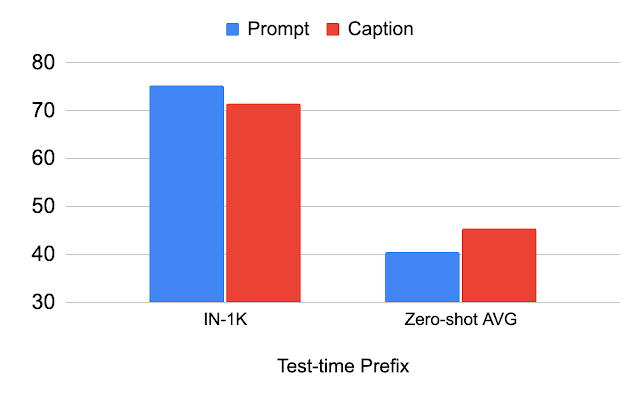

Study on test-time prefix

The desk beneath describes the efficiency change by the prefix used throughout take a look at time. We reveal that by utilizing the identical prefix used for the classification dataset (“Prompt”), the efficiency on the classification dataset (IN-1K) improves. When utilizing the identical prefix used for the image-caption dataset (“Caption”), the efficiency on different datasets (Zero-shot AVG) improves. This evaluation illustrates that if the prefix is tailor-made for the image-caption dataset, it achieves higher generalization of scene sorts and vocabulary phrases.

|

| Analysis of the prefix used for test-time. |

Study on robustness to picture distribution shift

We research the shift in picture distribution utilizing ImageInternet variants. We see that the “Caption” prefix performs higher than “Prompt” in ImageInternet-R (IN-R) and ImageInternet-Sketch (IN-S), however underperforms in ImageInternet-V2 (IN-V2). This signifies that the “Caption” prefix achieves generalization on domains removed from the classification dataset. Therefore, the optimum prefix most likely differs by how far the take a look at area is from the classification dataset.

|

| Analysis on the robustness to image-level distribution shift. IN: ImageInternet, IN-V2: ImageInternet-V2, IN-R: Art, Cartoon model ImageInternet, IN-S: ImageInternet Sketch. |

Conclusion and future work

We introduce prefix conditioning, a method for unifying picture caption and classification datasets for higher zero-shot classification. We present that this method results in higher zero-shot classification accuracy and that the prefix can management the bias within the language embedding. One limitation is that the prefix discovered on the caption dataset just isn’t essentially optimum for the zero-shot classification. Identifying the optimum prefix for every take a look at dataset is an attention-grabbing path for future work.

Acknowledgements

This analysis was carried out by Kuniaki Saito, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, and Tomas Pfister. Thanks to Zizhao Zhang and Sergey Ioffe for his or her priceless suggestions.