Calvin Wankhede / Android Authority

When Google introduced PaLM 2 and Gemini language fashions in mid-2023, the search large emphasised that its AI was multimodal. This meant it might generate textual content, photos, audio, and even video. Traditionally, language fashions like ChatGPT’s GPT-4 have solely excelled at reproducing textual content. Google’s newest VideoPoet mannequin challenges that notion, nonetheless, as it can convert text-based prompts into AI-generated movies.

With VideoPoet, Google has develop into the primary tech large to announce an AI able to producing movies. And not like prior makes an attempt, Google says it may also generate scenes with numerous movement relatively than simply refined actions. So what’s the magic behind VideoPoet and what can it do? Here’s all the pieces you should know.

What is Google VideoPoet?

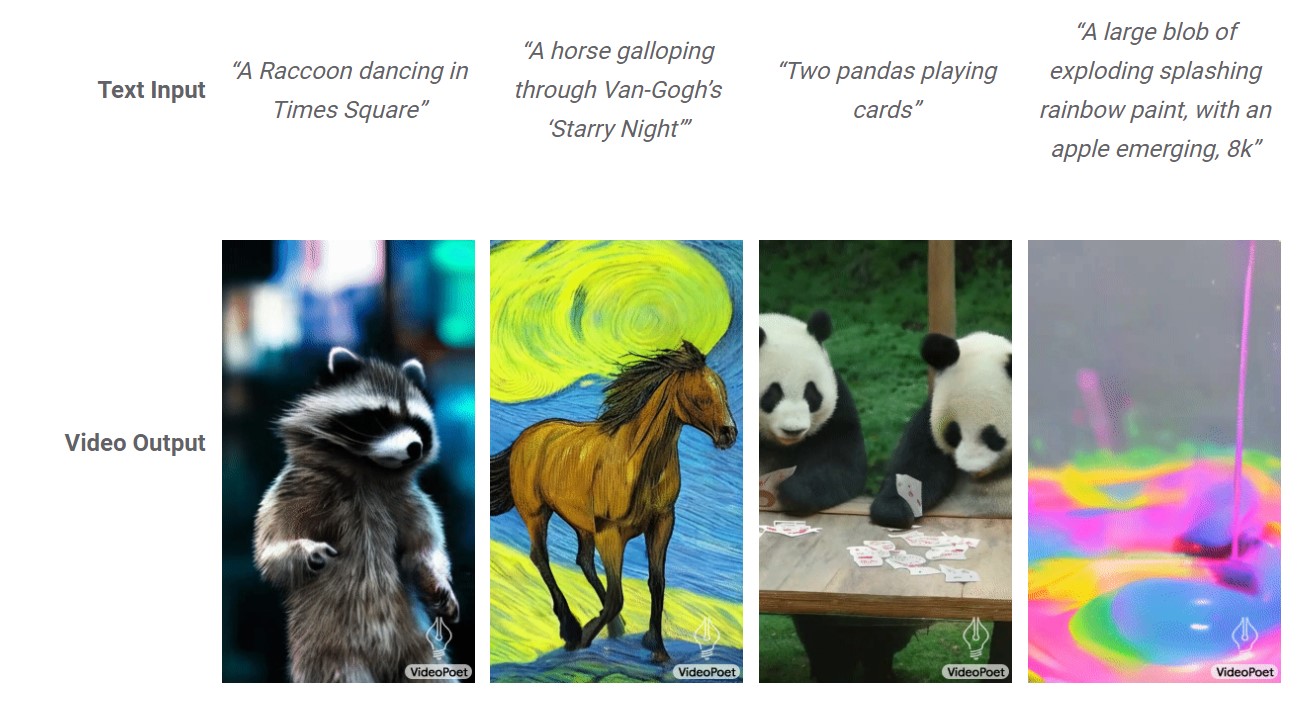

Google VideoPoet is an experimental massive language mannequin that may generate movies from a text-based immediate. You can describe a fictional scene, even one as ridiculous as “A robot cat eating spaghetti,” and have a video prepared to observe inside seconds. If you’ve ever used an AI picture generator like Midjourney or DALL-E 3, you already know what to anticipate from VideoPoet.

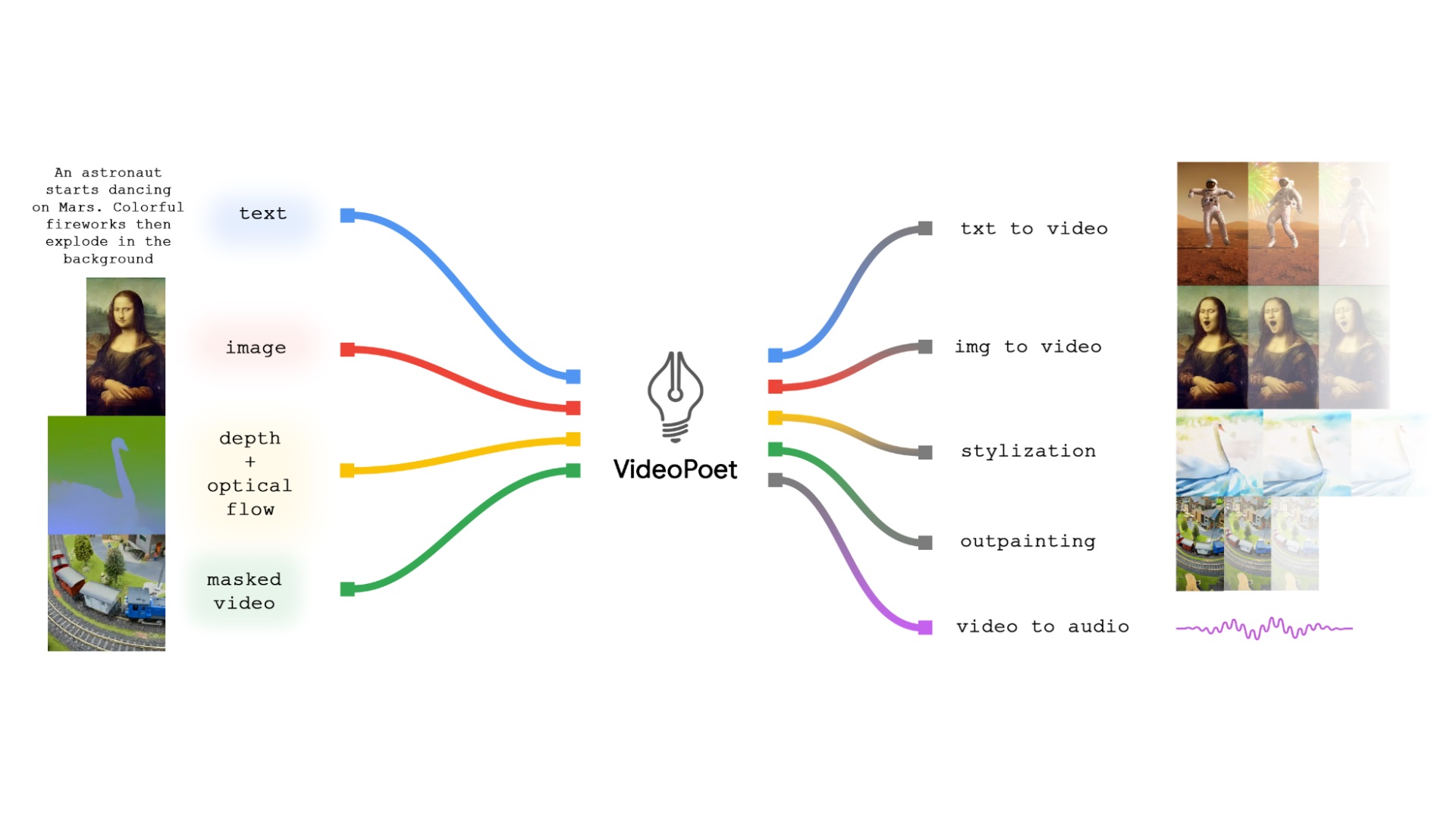

Like AI picture turbines, VideoPoet may also carry out edits in current video content material. For instance, you possibly can crop out a portion of the video body and ask the AI to fill within the hole with one thing out of your creativeness as a substitute.

Google has invested in startups like Runway engaged on AI video era, however VideoPoet comes courtesy of the corporate’s inside efforts. The VideoPoet technical paper enlists as many as 31 researchers from Google Research.

How does Google VideoPoet work?

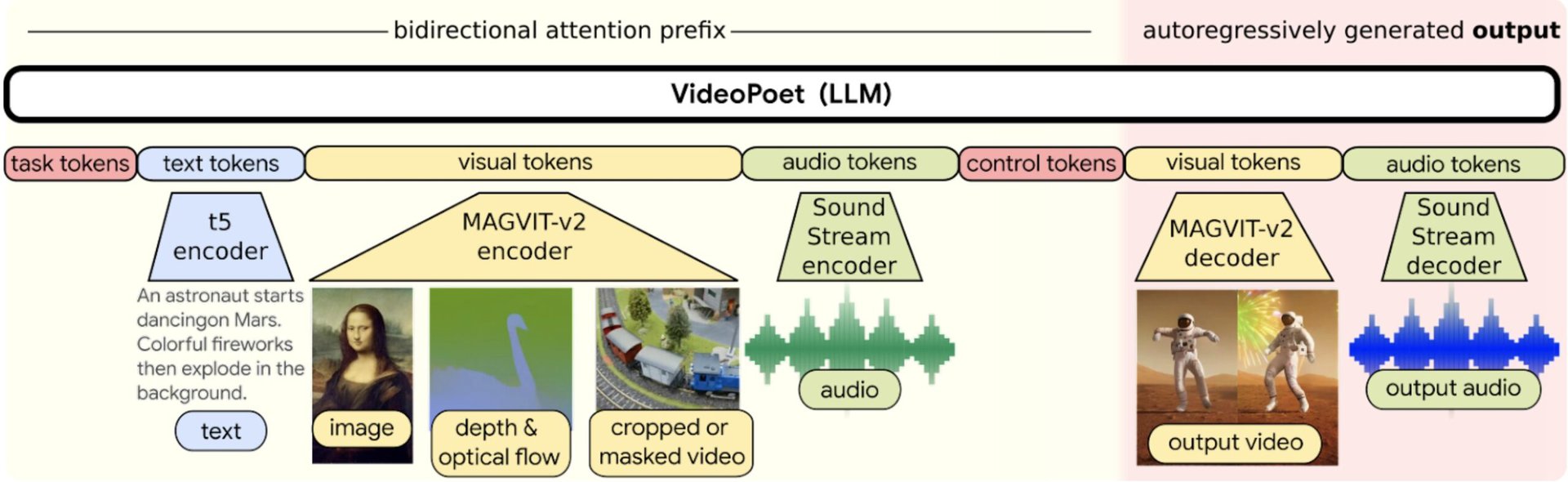

In the aforementioned paper, Google’s researchers defined that VideoPoet differs from standard text-to-image and text-to-video turbines. Unlike Midjourney, for instance, VideoPoet does not use a diffusion mannequin to generate photos from random noise. That strategy works properly for particular person photos however falls flat for movies the place the mannequin must account for movement and consistency over time.

At its core, Google’s VideoPoet is a big language mannequin. This implies that it’s primarily based on the identical know-how powering ChatGPT and Google Bard that may predict how phrases match collectively to type sentences. VideoPoet takes that idea a step additional as it’s additionally able to predicting video and audio chunks, and not simply textual content.

VideoPoet is a big language mannequin that generates movies as a substitute of textual content.

VideoPoet required a specialised pre-training course of which concerned translating photos, video frames, and audio clips into a standard language, known as tokens. Put merely, the mannequin realized how to interpret completely different modalities from the coaching information. Google says that it used one billion image-text pairs and 270 million public video samples to coach VideoPoet. Ultimately, VideoPoet has develop into able to predicting video tokens identical to a conventional LLM mannequin would predict textual content tokens.

VideoPoet has a strong basis because of its coaching that permits it to carry out duties past text-to-video era as properly. For instance, it can apply types to current movies, carry out edits like including background results, change the look of an current video with filters, and change the movement of a shifting object in an current video. Google demonstrated the latter with a raccoon dancing in numerous types.

VideoPoet vs. rival AI video turbines: What’s the distinction?

Edgar Cervantes / Android Authority

Google’s VideoPoet differs from most of its rivals that depend on diffusion fashions to show textual content into movies. However, it’s not precisely the primary – a smaller variety of Google Brain researchers offered Phenaki final 12 months. Likewise, Meta’s Make-A-Video challenge made waves within the AI neighborhood for producing numerous movies with out coaching on video-text pairs beforehand. However, neither fashions have been publicly launched.

So provided that we don’t have entry to any video-generating fashions, we are able to solely depend on the data Google has supplied about VideoPoet. With that in thoughts, the paper’s authors assert that “In many cases, even the current leading models either generate small motion or, when producing larger motions, exhibit noticeable artifacts.” VideoPoet, alternatively, can deal with extra movement.

VideoPoet can generate longer movies and deal with movement extra gracefully than the competitors.

Google additionally says that VideoPoet can generate longer movies than the competitors. While it’s restricted to an preliminary burst of two-second movies, it can keep context throughout eight to 10 seconds of video. That might not sound like a lot however it’s spectacular given how a lot a scene might change in that point interval. Having mentioned that, Google’s instance movies solely embrace a number of dozen frames, removed from the 24 or 30 frames per second benchmark used for skilled video or filmmaking.

Google VideoPoet availability: Is it free?

While Google has revealed dozens of instance movies to reveal the strengths of VideoPoet, it stopped wanting saying a public rollout. In different phrases, we don’t know after we’ll have the ability to use VideoPoet, if in any respect.

Google hasn’t introduced a product or launch date for VideoPoet but.

As for pricing, we might must take the trace from AI picture turbines like Midjourney which might be solely accessible through a subscription. Indeed, AI-generated photos and movies are computationally costly so opening up entry to everybody will not be possible, even for Google. We’ll have to attend for a disruptive launch like OpenAI’s ChatGPT to pressure the search large’s hand. Until then, we’ll merely have to attend and watch from the sidelines.