A protein positioned in the unsuitable half of a cell can contribute to a number of illnesses, reminiscent of Alzheimer’s, cystic fibrosis, and most cancers. But there are about 70,000 totally different proteins and protein variants in a single human cell, and since scientists can usually solely check for a handful in a single experiment, this can be very pricey and time-consuming to determine proteins’ areas manually.

A brand new technology of computational methods seeks to streamline the course of utilizing machine-learning fashions that usually leverage datasets containing 1000’s of proteins and their areas, measured throughout a number of cell traces. One of the largest such datasets is the Human Protein Atlas, which catalogs the subcellular conduct of over 13,000 proteins in additional than 40 cell traces. But as huge as it’s, the Human Protein Atlas has solely explored about 0.25 p.c of all potential pairings of all proteins and cell traces within the database.

Now, researchers from MIT, Harvard University, and the Broad Institute of MIT and Harvard have developed a new computational method that may effectively discover the remaining uncharted house. Their methodology can predict the location of any protein in any human cell line, even when each protein and cell have by no means been examined earlier than.

Their method goes one step additional than many AI-based strategies by localizing a protein at the single-cell stage, somewhat than as an averaged estimate throughout all the cells of a particular kind. This single-cell localization may pinpoint a protein’s location in a particular most cancers cell after remedy, as an example.

The researchers mixed a protein language mannequin with a particular kind of laptop imaginative and prescient mannequin to seize wealthy particulars about a protein and cell. In the finish, the consumer receives a picture of a cell with a highlighted portion indicating the mannequin’s prediction of the place the protein is positioned. Since a protein’s localization is indicative of its practical standing, this method may assist researchers and clinicians extra effectively diagnose illnesses or determine drug targets, whereas additionally enabling biologists to higher perceive how advanced organic processes are associated to protein localization.

“You could do these protein-localization experiments on a computer without having to touch any lab bench, hopefully saving yourself months of effort. While you would still need to verify the prediction, this technique could act like an initial screening of what to test for experimentally,” says Yitong Tseo, a graduate scholar in MIT’s Computational and Systems Biology program and co-lead writer of a paper on this analysis.

Tseo is joined on the paper by co-lead writer Xinyi Zhang, a graduate scholar in the Department of Electrical Engineering and Computer Science (EECS) and the Eric and Wendy Schmidt Center at the Broad Institute; Yunhao Bai of the Broad Institute; and senior authors Fei Chen, an assistant professor at Harvard and a member of the Broad Institute, and Caroline Uhler, the Andrew and Erna Viterbi Professor of Engineering in EECS and the MIT Institute for Data, Systems, and Society (IDSS), who can be director of the Eric and Wendy Schmidt Center and a researcher at MIT’s Laboratory for Information and Decision Systems (LIDS). The analysis seems right this moment in Nature Methods.

Collaborating fashions

Many current protein prediction fashions can solely make predictions primarily based on the protein and cell information on which they have been skilled or are unable to pinpoint a protein’s location within a single cell.

To overcome these limitations, the researchers created a two-part methodology for prediction of unseen proteins’ subcellular location, referred to as PUPS.

The first half makes use of a protein sequence mannequin to seize the localization-determining properties of a protein and its 3D construction primarily based on the chain of amino acids that varieties it.

The second half incorporates a picture inpainting mannequin, which is designed to fill in lacking elements of a picture. This laptop imaginative and prescient mannequin appears at three stained photographs of a cell to collect details about the state of that cell, reminiscent of its kind, particular person options, and whether or not it’s underneath stress.

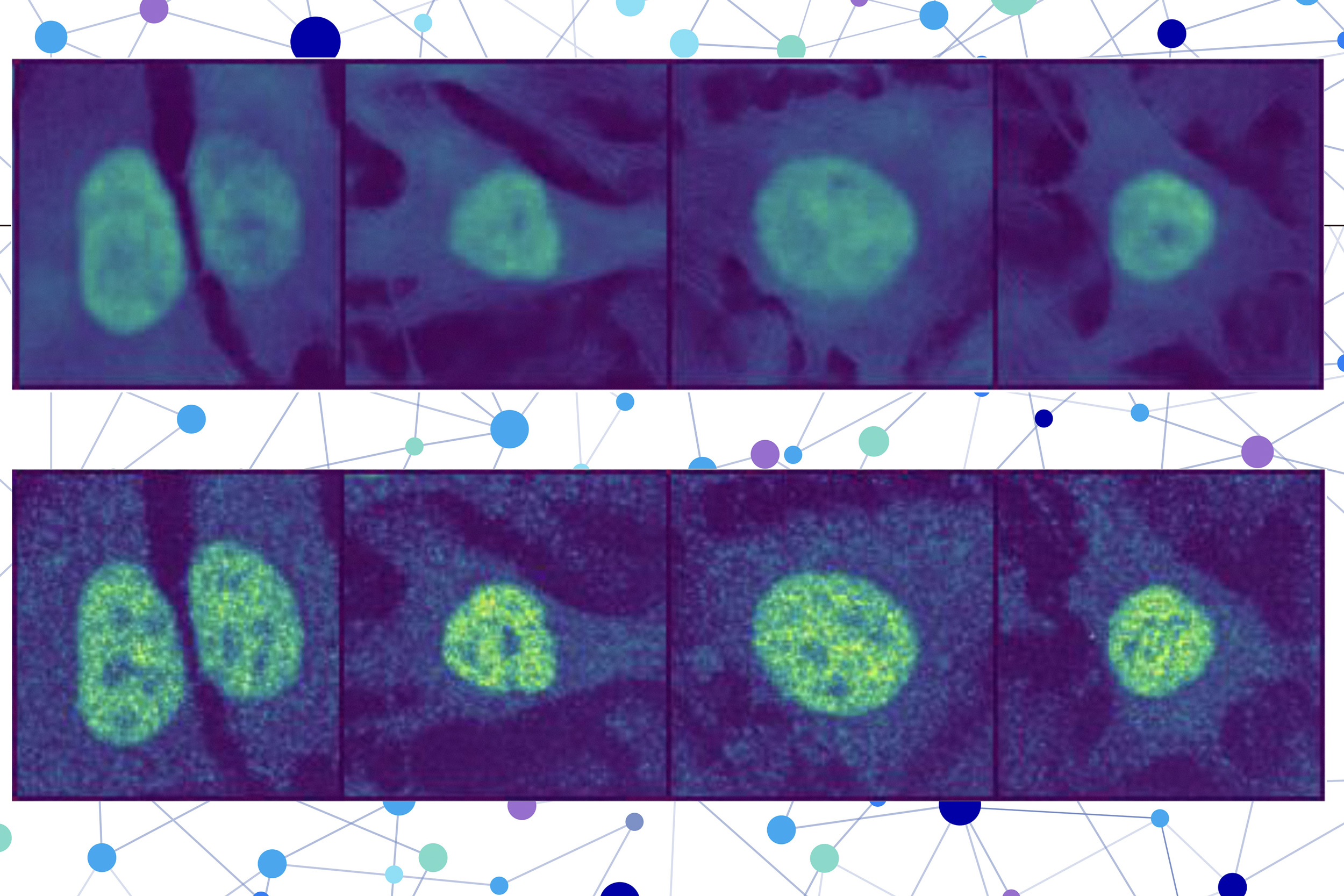

PUPS joins the representations created by every mannequin to predict the place the protein is positioned within a single cell, utilizing a picture decoder to output a highlighted picture that exhibits the predicted location.

“Different cells within a cell line exhibit different characteristics, and our model is able to understand that nuance,” Tseo says.

A consumer inputs the sequence of amino acids that kind the protein and three cell stain photographs — one for the nucleus, one for the microtubules, and one for the endoplasmic reticulum. Then PUPS does the relaxation.

A deeper understanding

The researchers employed a few tips throughout the coaching course of to show PUPS methods to mix data from every mannequin in such a method that it could make an informed guess on the protein’s location, even when it hasn’t seen that protein earlier than.

For occasion, they assign the mannequin a secondary job throughout coaching: to explicitly title the compartment of localization, like the cell nucleus. This is completed alongside the major inpainting job to assist the mannequin study extra successfully.

A very good analogy is likely to be a trainer who asks their college students to attract all the elements of a flower along with writing their names. This further step was discovered to assist the mannequin enhance its common understanding of the potential cell compartments.

In addition, the undeniable fact that PUPS is skilled on proteins and cell traces at the identical time helps it develop a deeper understanding of the place in a cell picture proteins are likely to localize.

PUPS may even perceive, by itself, how totally different elements of a protein’s sequence contribute individually to its total localization.

“Most other methods usually require you to have a stain of the protein first, so you’ve already seen it in your training data. Our approach is unique in that it can generalize across proteins and cell lines at the same time,” Zhang says.

Because PUPS can generalize to unseen proteins, it could seize adjustments in localization pushed by distinctive protein mutations that aren’t included in the Human Protein Atlas.

The researchers verified that PUPS may predict the subcellular location of new proteins in unseen cell traces by conducting lab experiments and evaluating the outcomes. In addition, when in comparison with a baseline AI methodology, PUPS exhibited on common much less prediction error throughout the proteins they examined.

In the future, the researchers wish to improve PUPS so the mannequin can perceive protein-protein interactions and make localization predictions for a number of proteins within a cell. In the long term, they wish to allow PUPS to make predictions in phrases of residing human tissue, somewhat than cultured cells.

This analysis is funded by the Eric and Wendy Schmidt Center at the Broad Institute, the National Institutes of Health, the National Science Foundation, the Burroughs Welcome Fund, the Searle Scholars Foundation, the Harvard Stem Cell Institute, the Merkin Institute, the Office of Naval Research, and the Department of Energy.