Routing in Google Maps stays one in every of our most useful and regularly used options. Determining the most effective route from A to B requires making advanced trade-offs between components together with the estimated time of arrival (ETA), tolls, directness, floor situations (e.g., paved, unpaved roads), and consumer preferences, which fluctuate throughout transportation mode and native geography. Often, probably the most pure visibility we now have into vacationers’ preferences is by analyzing real-world journey patterns.

Learning preferences from noticed sequential choice making habits is a traditional software of inverse reinforcement learning (IRL). Given a Markov choice course of (MDP) — a formalization of the highway community — and a set of demonstration trajectories (the traveled routes), the purpose of IRL is to get well the customers’ latent reward operate. Although previous analysis has created more and more basic IRL options, these haven’t been efficiently scaled to world-sized MDPs. Scaling IRL algorithms is difficult as a result of they sometimes require fixing an RL subroutine at each replace step. At first look, even making an attempt to suit a world-scale MDP into reminiscence to compute a single gradient step seems infeasible because of the massive variety of highway segments and restricted excessive bandwidth reminiscence. When making use of IRL to routing, one wants to contemplate all cheap routes between every demonstration’s origin and vacation spot. This implies that any try to interrupt the world-scale MDP into smaller parts can not contemplate parts smaller than a metropolitan space.

To this finish, in “Massively Scalable Inverse Reinforcement Learning in Google Maps”, we share the results of a multi-year collaboration amongst Google Research, Maps, and Google DeepMind to surpass this IRL scalability limitation. We revisit traditional algorithms in this area, and introduce advances in graph compression and parallelization, together with a brand new IRL algorithm known as Receding Horizon Inverse Planning (RHIP) that gives fine-grained management over efficiency trade-offs. The ultimate RHIP coverage achieves a 16–24% relative enchancment in international route match fee, i.e., the share of de-identified traveled routes that precisely match the recommended route in Google Maps. To the most effective of our information, this represents the most important occasion of IRL in an actual world setting thus far.

|

| Google Maps enhancements in route match fee relative to the present baseline, when utilizing the RHIP inverse reinforcement learning coverage. |

The advantages of IRL

A refined however essential element in regards to the routing drawback is that it’s purpose conditioned, that means that each vacation spot state induces a barely totally different MDP (particularly, the vacation spot is a terminal, zero-reward state). IRL approaches are effectively fitted to these kind of issues as a result of the realized reward operate transfers throughout MDPs, and solely the vacation spot state is modified. This is in distinction to approaches that straight be taught a coverage, which generally require an additional issue of S parameters, the place S is the variety of MDP states.

Once the reward operate is realized by way of IRL, we reap the benefits of a robust inference-time trick. First, we consider all the graph’s rewards as soon as in an offline batch setting. This computation is carried out totally on servers with out entry to particular person journeys, and operates solely over batches of highway segments in the graph. Then, we save the outcomes to an in-memory database and use a quick on-line graph search algorithm to search out the very best reward path for routing requests between any origin and vacation spot. This circumvents the necessity to carry out on-line inference of a deeply parameterized mannequin or coverage, and vastly improves serving prices and latency.

|

| Reward mannequin deployment utilizing batch inference and quick on-line planners. |

Receding Horizon Inverse Planning

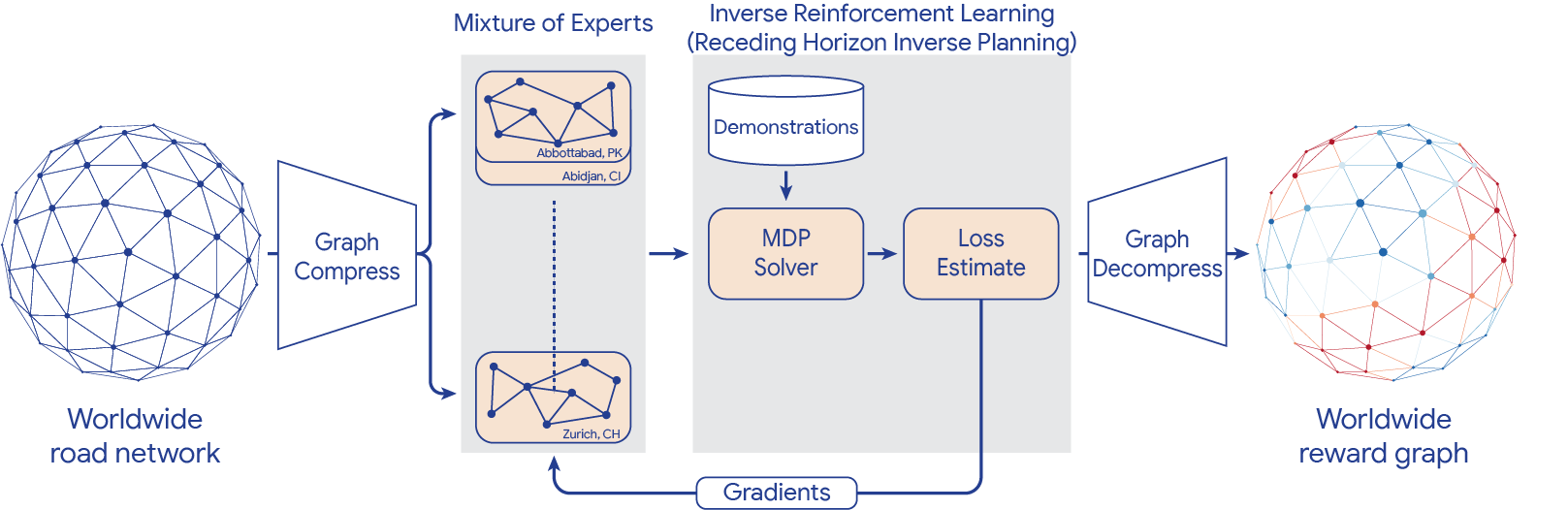

To scale IRL to the world MDP, we compress the graph and shard the worldwide MDP utilizing a sparse Mixture of Experts (MoE) based mostly on geographic areas. We then apply traditional IRL algorithms to unravel the native MDPs, estimate the loss, and ship gradients again to the MoE. The worldwide reward graph is computed by decompressing the ultimate MoE reward mannequin. To present extra management over efficiency traits, we introduce a brand new generalized IRL algorithm known as Receding Horizon Inverse Planning (RHIP).

|

| IRL reward mannequin coaching utilizing MoE parallelization, graph compression, and RHIP. |

RHIP is impressed by individuals’s tendency to carry out in depth native planning (“What am I doing for the subsequent hour?”) and approximate long-term planning (“What will my life appear to be in 5 years?”). To reap the benefits of this perception, RHIP makes use of strong but costly stochastic insurance policies in the native area surrounding the demonstration path, and switches to cheaper deterministic planners past some horizon. Adjusting the horizon H permits controlling computational prices, and infrequently permits the invention of the efficiency candy spot. Interestingly, RHIP generalizes many traditional IRL algorithms and offers the novel perception that they are often considered alongside a stochastic vs. deterministic spectrum (particularly, for H=∞ it reduces to MaxEnt, for H=1 it reduces to BIRL, and for H=0 it reduces to MMP).

|

| Given an indication from so to sd, (1) RHIP follows a strong but costly stochastic coverage in the native area surrounding the demonstration (blue area). (2) Beyond some horizon H, RHIP switches to following a less expensive deterministic planner (crimson traces). Adjusting the horizon permits fine-grained management over efficiency and computational prices. |

Routing wins

The RHIP coverage offers a 15.9% and 24.1% raise in international route match fee for driving and two-wheelers (e.g., scooters, bikes, mopeds) relative to the well-tuned Maps baseline, respectively. We’re particularly enthusiastic about the advantages to extra sustainable transportation modes, the place components past journey time play a considerable position. By tuning RHIP’s horizon H, we’re in a position to obtain a coverage that’s each extra correct than all different IRL insurance policies and 70% sooner than MaxEnt.

Our 360M parameter reward mannequin offers intuitive wins for Google Maps customers in dwell A/B experiments. Examining highway segments with a big absolute distinction between the realized rewards and the baseline rewards may also help enhance sure Google Maps routes. For instance:

|

| Nottingham, UK. The most well-liked route (blue) was beforehand marked as personal property because of the presence of a giant gate, which indicated to our programs that the highway could also be closed at occasions and wouldn’t be preferrred for drivers. As a end result, Google Maps routed drivers by way of an extended, alternate detour as an alternative (crimson). However, as a result of real-world driving patterns confirmed that customers recurrently take the popular route with out a difficulty (because the gate is sort of by no means closed), IRL now learns to route drivers alongside the popular route by putting a big constructive reward on this highway section. |

Conclusion

Increasing efficiency by way of elevated scale – each in phrases of dataset dimension and mannequin complexity – has confirmed to be a persistent development in machine learning. Similar beneficial properties for inverse reinforcement learning issues have traditionally remained elusive, largely because of the challenges with dealing with virtually sized MDPs. By introducing scalability developments to traditional IRL algorithms, we’re now in a position to practice reward fashions on issues with lots of of hundreds of thousands of states, demonstration trajectories, and mannequin parameters, respectively. To the most effective of our information, that is the most important occasion of IRL in a real-world setting thus far. See the paper to be taught extra about this work.

Acknowledgements

This work is a collaboration throughout a number of groups at Google. Contributors to the mission embody Matthew Abueg, Oliver Lange, Matt Deeds, Jason Trader, Denali Molitor, Markus Wulfmeier, Shawn O’Banion, Ryan Epp, Renaud Hartert, Rui Song, Thomas Sharp, Rémi Robert, Zoltan Szego, Beth Luan, Brit Larabee and Agnieszka Madurska.

We’d additionally like to increase our due to Arno Eigenwillig, Jacob Moorman, Jonathan Spencer, Remi Munos, Michael Bloesch and Arun Ahuja for useful discussions and ideas.